GenAI Impact on DevOps & Application Development

A Technical Perspective

Shannon McFarland, Vice President, Cisco DevNet

Matt DeNapoli, Head of Developer Strategy & Product Management, Cisco DevNet

Today, developers are expected to do much more than just write code. As the line between development and operations continues to blur, developers must deeply understand programming languages, frameworks, databases, CI/CD, containerization, cloud services—the list goes on and on. And the scope of their role keeps expanding, from debugging and testing code to creating documentation. Now, developers also need to keep up with AI, with many being asked to integrate generative AI (GenAI) into their workflows. This can quickly become overwhelming, but with the right knowledge and skills, developers can use GenAI to their advantage.

In this article, we’ll explain how GenAI is impacting DevOps and application development today, how it can help developers work more efficiently, and how we can prepare for the future of AI.

The evolution of artificial intelligence

AI has been around for a long time. We've been using predictive analytics across the industry for years, which involves examining data over time to create prediction models or algorithms to determine an outcome. In infrastructure, this is applied to areas like network traffic management, resource allocation in storage and compute, application performance prediction, load balancing, and data breach detection. On the business side, predictive analytics helps us look at data over a period, and then make future looking decisions or estimates based upon that. This helps with sales forecasting, manufacturing maintenance, fraud detection, customer behavior analysis, and healthcare improvements.

GenAI vs. predictive AI

GenAI builds on predictive AI by not only analyzing data but also generating new content and optimizing actions. Unlike predictive AI, which focuses on monitoring and predicting outcomes, GenAI can recommend something and then allow the system to optimize it on our behalf—we can even allow it to autonomously learn and make decisions. For example, in cloud native environments and event-driven architectures like Kubernetes auto-scaling, GenAI can intelligently manage resource scaling, which boosts system functionality and efficiency.

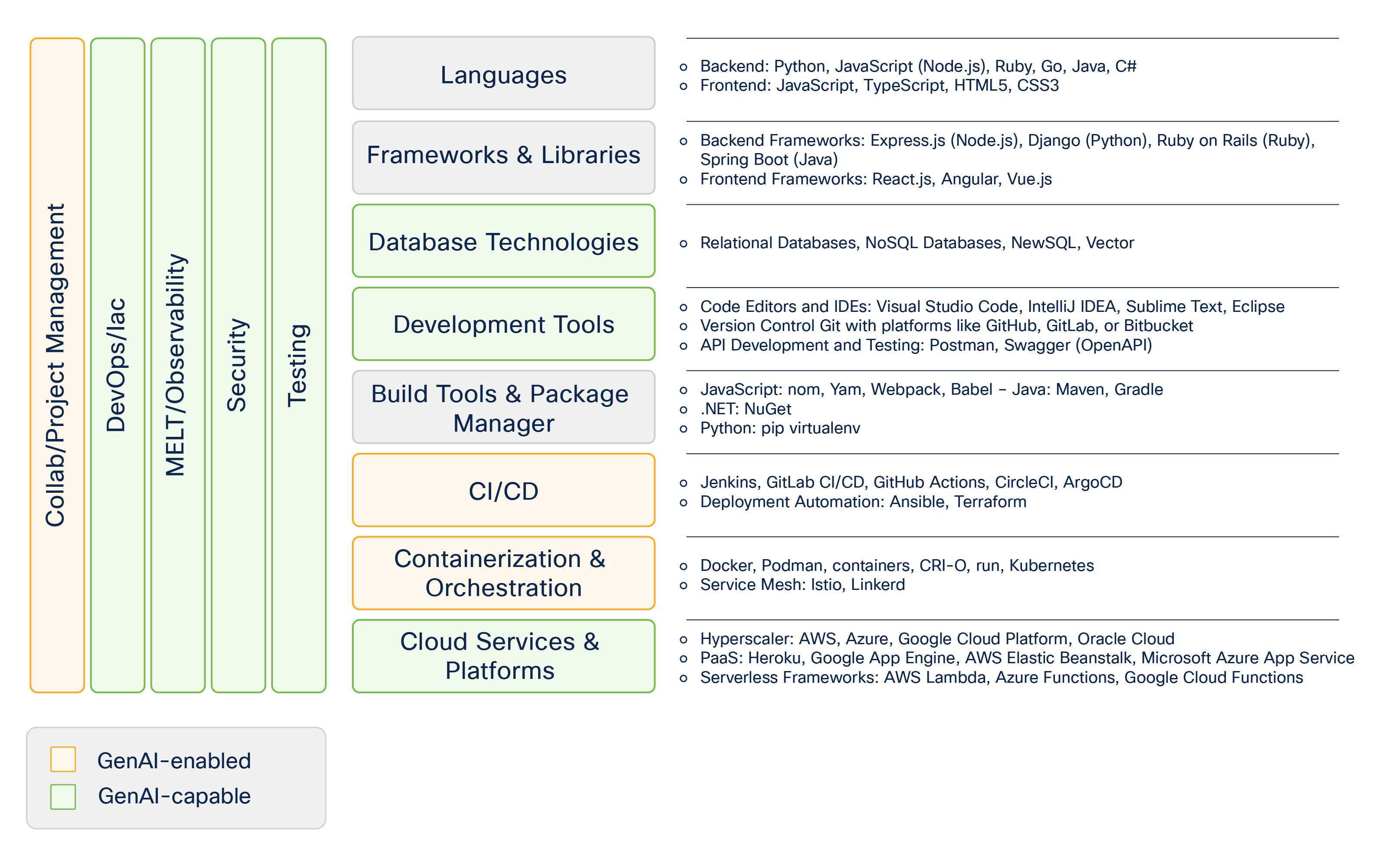

GenAI intersection points in the modern developer stack

Joining forces: Developers and GenAI

GenAI is already enhancing the day-to-day lives of developers by helping within the testing, security, observability, and DevOps spaces. And even more GenAI opportunities are emerging in the modern developer stack in many more areas; for example, CI/CD pipelines.

Streamlining coding, testing, and integration

Coding assistants are quickly gaining popularity; with new (or improved) ones constantly being released; some of which are domain-specific, designed to help with specific use cases like infrastructure as code.

Many developers are already using tools like GitHub Copilot, Amazon CodeWhisperer, or ChatGPT as part of their daily jobs. It’s important for developers to test these tools and ensure they are helpful and accurate for their specific needs. Tedious and challenging tasks often get postponed, and this is where GenAI can make a significant impact in the developer space. Spending hours debugging is exhausting, and ensuring comprehensive unit test coverage can be a hassle. GenAI can help here by automatically creating unit tests or generating functional code in test-driven development. It can also generate documentation, allowing developers to focus more on solving the problem and generating solutions rather than ensuring all our code is documented.

We've had code analysis and review tools for years, but they've been relatively basic compared to what GenAI tooling promise. Now, they're getting much smarter. We'll soon be able to rapidly create test frameworks and be confident that our code has comprehensive coverage when we push it to QA or production.

On the integration side, we’re starting to feel more comfortable with how all these services interact. We can perform tasks like API evaluation to ensure APIs are secure, used appropriately, and provide the expected data, ensuring everything works cross-functionally and securely.

Another intriguing opportunity with GenAI is around synthetic data sets. One of the hardest problems in software testing is ensuring we cover all contingencies, input, and output values our applications might encounter. GenAI can help by building synthetic data sets, which is very tedious when performed manually, involving a lot of copying and pasting without knowing if all contingencies are covered. If we can use GenAI to generate these data sets from historical data, it will make the testing process much more efficient and comprehensive.

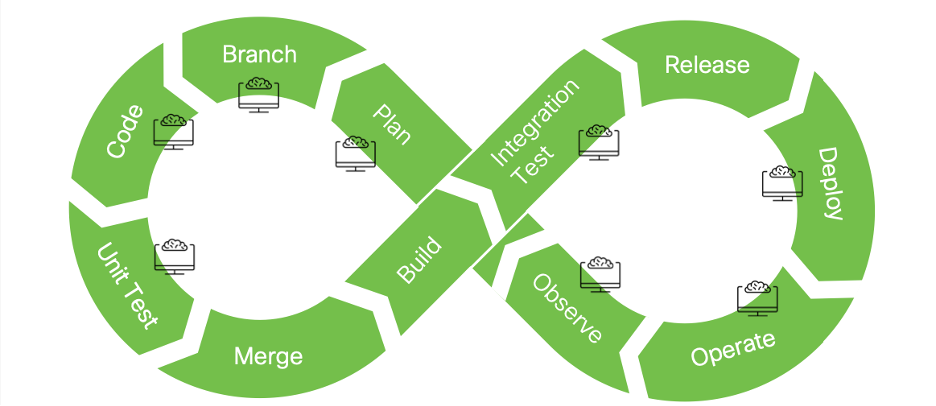

Functional points of AI insertion in the CI/CD pipeline

Simplifying documentation

While documentation isn't traditionally part of the DevOps loop, it shouldn’t be overlooked. Code comments are essential to make code understandable and maintainable for others. GenAI can be used to generate code comments as developers write.

It’s also useful for functional documentation. Many applications today—whether mobile or web—are intuitive, meaning that we don’t need user manuals to navigate them. However, in larger organizations, we often interact with customer relationship management (CRM) or enterprise resource planning (ERP) systems, where functional documentation is necessary. But writing functional documentation can be tedious for developers. Here, GenAI can help by automatically generating documentation as our code executes specific functions.

GenAI can significantly aid in API implementation and usage. Documenting API interactions provides crucial visibility for passing on, refactoring, or maintaining code. GenAI can auto-document APIs or generate code from documentation, speeding up the process and ensuring thorough coverage.

Boosting operational efficiency with AI

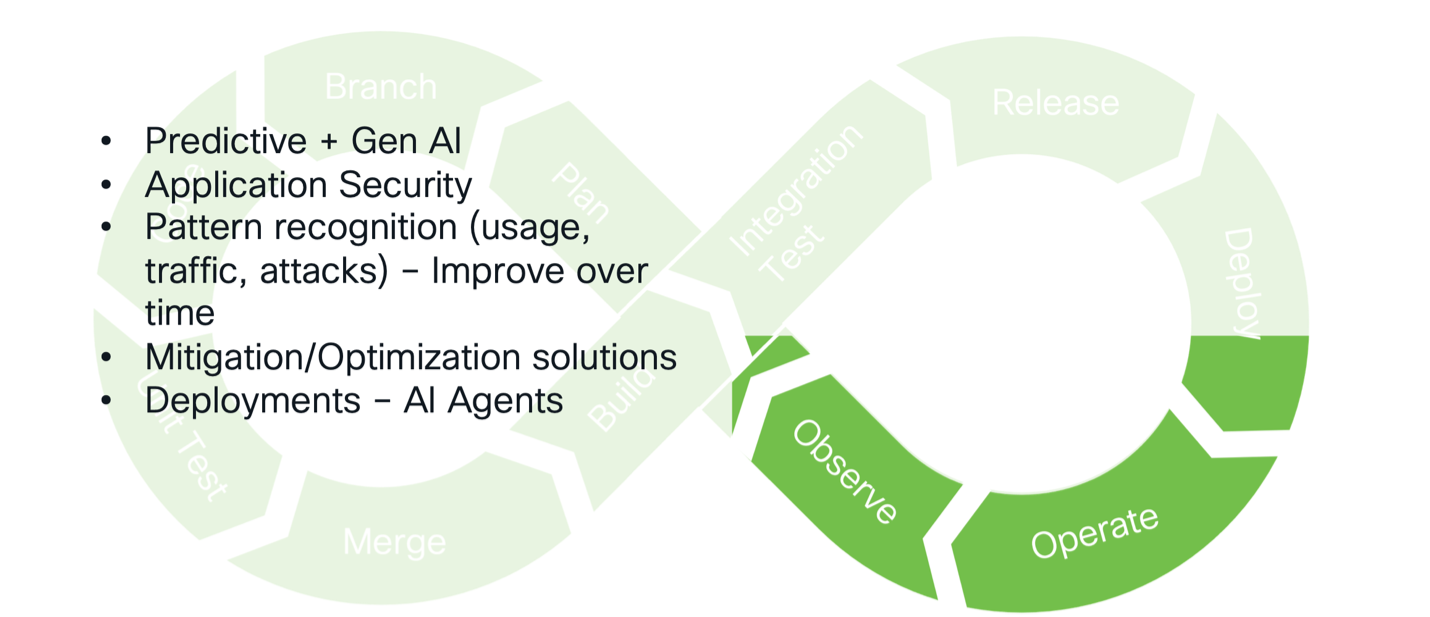

Once developers have deployed their code and systems are running smoothly, we can use predictive AI combined with generative AI for optimization and problem mitigation. Predictive AI has provided business analysis and insights for years, but it hasn't helped make decisions directly. By integrating GenAI, we can address this gap, enhancing application security, pattern recognition, traffic analysis, and attack detection. Over time, these systems will improve as they learn, keeping us ahead of potential issues.

The concept of AI agents is particularly exciting here. Imagine AI platforms autonomously making deployment decisions, such as choosing between public and private clouds, managing service interactions, and determining optimal activation times. Offloading these complex decisions to a GenAI service, combined with predictive AI, offers a tremendous opportunity likely to materialize soon.

GenAI can enhance operational efficiency

GenAI in Infrastructure as Code

Infrastructure as Code (IaC) can help network and infrastructure engineers as they enter the automation space. For example, GenAI can help engineers create scripts for Ansible, artifacts for Terraform, and embrace automation and programmability within their infrastructure.

In testing, we use various tools, similar to software development. With pyATS and Genie, we can build test cases and integrate them with Cisco Modeling Labs, ensuring virtual environment confidence before physical deployment. GenAI also generates synthetic traffic, eliminating the need for physical testing environments for wireless technologies, allowing us to simulate network loads and scenarios efficiently.

The operations piece parallels software development but operates lower in the stack. By marrying predictive analysis with GenAI, we can gain end-to-end visibility into security and ultimately provide mitigation and optimization solutions.

AI API use cases

What are the trends around APIs in AI today? Interestingly, not much has changed, which is a good thing as there's less to learn. However, API interactions are evolving with microservices and AI agents increasingly involved.

From a technical standpoint, this interaction remains familiar. If you know various API types—RESTful interfaces, WebSocket, gRPC, and GraphQL—you’re already equipped to handle AI-related interactions. These same API types are prevalent in AI environments, especially when interacting programmatically with components like vector databases. The good news is that the skills you already have for writing input, operations, and other interactions still apply in the AI world.

Managing API sprawl with generative AI

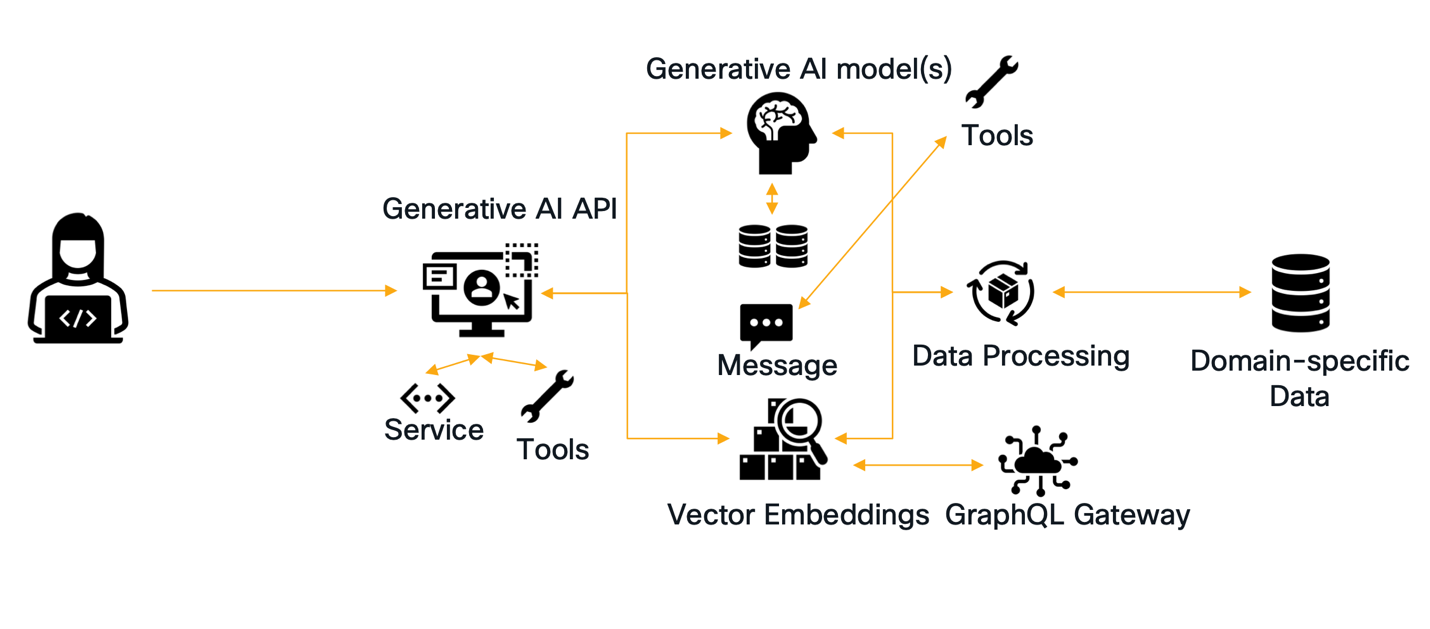

Let's explore why we might need more intelligent APIs and what this looks like architecturally. The graphic below shows an architecture involving GenAI, where a user is interacting with a system like OpenAI via a RESTful interface. The OpenAPI specification for OpenAI is published, detailing paths and operations, allowing for interactions such as code generation or audio queues. The user interacts with the Generative AI system, which performs its magic using large and smart language models.

An architecture involving GenAI, where a user is interacting with a system like OpenAI

In an enterprise environment, domain-specific data (e.g., sales or customer data) undergoes regular processing, creating numerical values and vector embeddings used by the AI system. Each interaction is an API call, leading to API sprawl similar to the early days of cloud native microservices. In a Kubernetes environment, each pod fronted by a service reference results in extensive control, visibility, and scaling capabilities but also introduces significant API sprawl.

To manage this, tools like application service meshes and eBPF help with control and visibility. However, high-level architecture components further complicate the situation, with dependencies on different tools and interactions, such as vector databases communicating through a GraphQL gateway. Consequently, a standard Generative AI environment involves many underlying API calls, exacerbating API sprawl.

Service meshes can help mitigate this issue, but we need intelligent API solutions to handle these complexities. Such solutions manage API sprawl effectively when implemented correctly.

The rise of AI agents

This is the onset of AI agents. Many people are familiar with assistants like Alexa or Google Home, which perform predefined functions based on user prompts. These assistants are a subcategory of AI agents, capable of processing natural language inputs efficiently, ranging from simple rule-based systems to advanced machine learning algorithms.

Today, most agents are semi-autonomous, meaning their functions are predefined, with guardrails set around what they cannot do. For multimodal approaches—using text, speech, and other inputs within an AI framework—we can write new code, pull from open source, and integrate components using APIs (REST, GraphQL, etc.), or use off-the-shelf solutions to build an AI agent.

Using agents safely and effectively

Frameworks for establishing assistants or agents that can communicate and perform functions are readily available. For instance, a developer could create a code interpreter agent for refactoring code from one language to another, like from Python to Rust. However, this process is not without risks. Novice programmers might miss critical issues, potentially introducing security vulnerabilities. In the API world, this is especially dangerous, as mistakes can lead to vulnerabilities like those highlighted in the OWASP Top Ten or OWASP LLM attacks, where hidden API paths could expose data infrastructure without the developer's knowledge.

That’s why it’s crucial to set guardrails around these agent technologies. The use of AI agents will grow, but they must be used safely and securely.

The role of API gateways in AI

Are AI agents going to replace everything? Absolutely not. As a developer, operator, or SRE, you'll still focus on APIs. REST remains the main pathway for interfacing with most primary services, with GraphQL used for vector-related tasks.

If you lack solid knowledge of API gateways, now is the time to get familiar. API gateways are instrumental in these environments, whether working with microservices or standard AI pathways. They help manage API interactions effectively, creating corridors for APIs to communicate with non-API-centric components like assistants and agents.

Agents are growing in popularity. Tools like AutoGen and Crew AI from open source and private sectors are helping us build better agent frameworks. Assistants, like Amazon Alexa, will remain a part of our lives for a long time. They'll improve at their tasks but will generally handle simplistic functions. The key is that these assistants will become smart enough to delegate tasks they can't handle to more capable agents. If an agent can't perform the task, new agents will be dynamically created to meet those requirements.

What's next for developers?

Adopting AI tools requires careful consideration. High-friction tasks like documentation, code refactoring,and testing are ripe for AI, but operationalizing these in production can be tricky.

Whether you're just beginning to explore GenAI or looking for ways to integrate it into your daily workflows, the AI Developer Hub is a great resource to guide you. Check it out to learn more about AI and how to use it to your advantage.