What are the Golden Signals?

Discover the importance of the four golden signals – latency, traffic, errors, and saturation – in providing a comprehensive view of system health.

Estimated time to read: 9 minutes

It is no secret that downtime is expensive and can cost up to $5,600 per minute. These outages not only cause monetary damage — they have significant impact a brand’s reputation. Thus, having a reliable IT system plays an important role in terms of both the costs and credibility of a business.

To track outages, every software system should measure key performance indicators (KPIs) and business metrics. These should include availability, resource utilization, and user experience (UX). However, keeping an eye on a large number of metrics in a distributed system can be overwhelming for an operator, or on-call Site Reliability Engineer (SRE).

To organize this complex maze of metrics, Google’s SRE teams observed and defined four essential signals that can be used to detect and analyze issues of any user-facing system. These metrics are referred to as “golden signals.”

Before understanding the golden signals, it is important to first understand the concepts of observability and monitoring.

Observability vs. monitoring

Observability and monitoring are two related but distinct concepts in the field of SRE. While both are crucial for the effective management of IT systems, they serve different purposes and offer unique benefits.

Observability enables organizations to gather insights into their system. It focuses on understanding and answering questions about what is happening inside the system via analysis of its output. Observability is achieved through the collection, aggregation, and analysis of various data points, including metrics, events, logs, and traces (MELT). By adopting observability practices, SRE teams aim to gain a holistic understanding of system performance, identify anomalies, troubleshoot issues, and make informed decisions to improve reliability.

Monitoring, on the other hand, is the practice of systematically tracking and measuring the health and performance of a system in real time. It involves setting up predefined metrics, thresholds, and alerts to detect deviations or abnormal behaviors. Monitoring typically entails collecting metrics related to resource utilization, response times, error rates, and other relevant indicators. The primary objective of monitoring is to proactively detect and promptly respond to issues, minimizing their impact on system availability and user experience.

Importance to site reliability engineers

The primary difference between observability and monitoring lies in their focus and approach. Both observability and monitoring are crucial for SRE practices for the following reasons.

Early detection of issues

Observability and monitoring enable SRE teams to identify and respond to potential issues before they impact system performance or user experience. They allow for proactive intervention and troubleshooting.

Faster incident response

By having comprehensive observability and monitoring systems in place, SRE teams can rapidly identify and address incidents, reducing mean time to detect (MTTD) and mean time to resolve (MTTR).

Root cause analysis

Observability provides the necessary insights and data to perform thorough root cause analysis for incidents and system failures. It helps in understanding the underlying causes and implementing preventive measures.

Capacity planning and performance optimization

Observability and monitoring data help SRE teams understand resource utilization, bottlenecks, and performance patterns, enabling them to optimize systems, plan for future growth, and ensure efficient resource allocation.

Iterative improvements

Both observability and monitoring promote a culture of continuous improvement by providing feedback loops and data-driven insights. SRE teams can leverage these insights to iterate on system design, architecture, and operational practices.

Ultimately, observability focuses on understanding complex systems by exploring their internal behaviors, and monitoring concentrates on detecting deviations and maintaining system stability. Both observability and monitoring are essential components of an effective SRE strategy, enabling proactive incident management, rapid response, and continuous improvement.

SLI vs. SLO vs. SLA

To measure KPIs in the context of business agreements, organizations use concepts such as service-level indicators (SLIs), service-level objectives (SLOs), and service-level agreements (SLAs). These concepts provide a framework to measure and manage business and customer expectations.

These terms can be understood by using a web application as an example.

*A SLI refers to the “actual” numbers or metrics for the health of a system. SLIs are typically measured over a period of time, such as days, months, or quarters. How long a given web app feature takes to deliver a result would be a SLI.

*A SLO is an internal threshold of the SRE team for keeping the system available and meeting expectations. A SLO should be carefully defined; it should not be ambitious but rather achievable. A company might set a SLO for its web application that requires the average response time to be 100 milliseconds or less.

*A SLA, meanwhile, is a legal commitment to customers outlining a system's availability and response time. The SLA is a promise to keep the system reliable under the internally defined SLO. A good SLA is clear and concise. For example, an\ SLA for a web application might state that if the average response time exceeds 150 milliseconds for more than 5% of requests, the customer will receive a refund.

There are numerous KPIs possible for which SLAs, SLIs, and SLOs can be defined. However, out of these, the four golden signals are essential in providing an indication of a system’s health. We will discuss this further in the next section.

Golden Signals

The term “Golden Signals” was introduced by Google in 2014 in their book Site Reliability Engineering: How Google Runs Production Systems. The book defines the four golden signals as latency, traffic, errors, and saturation. We discuss each in turn below.

Latency

How long a server takes to respond to an HTTP request is an example of latency. High latency can result in longer wait times and thus poor user experience. Note: There is a significant difference between the latency of successful requests and that of failed requests.

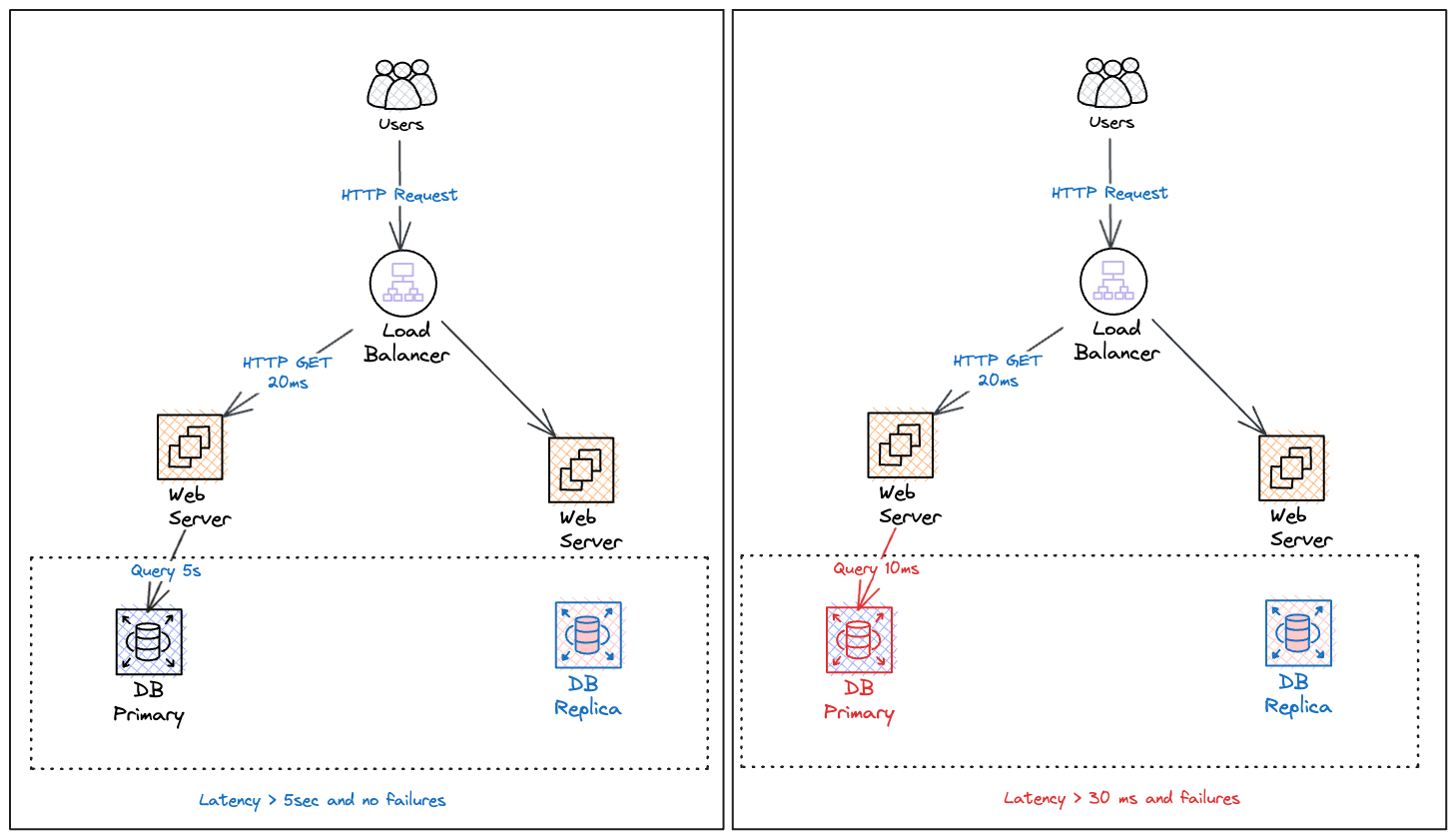

Figure 1: A typical architecture of a web application showing a successful and unsuccessful response along with latency.

Figure 1: A typical architecture of a web application showing a successful and unsuccessful response along with latency.

In the above diagram, we can see two scenarios:

- A server request took more than 5 seconds to respond, but the response was successful (HTTP 200).

- The response time is much quicker, but the request failed with an HTTP 500 error due to a failure in the database connection.

Companies need to track latency over time for both successful and unsuccessful responses. They then need to look for deviations and anomalies within a sliding window. The exact requirements of a threshold are derived from the SLO defined for the given metrics. Additionally, as seen in the above diagram, combining latency with the error rate can help reveal the root cause of a failure faster.

Traffic

Traffic is the number of requests that are made to a system over a period of time. High traffic volume can put a strain on system resources and result in performance degradation.

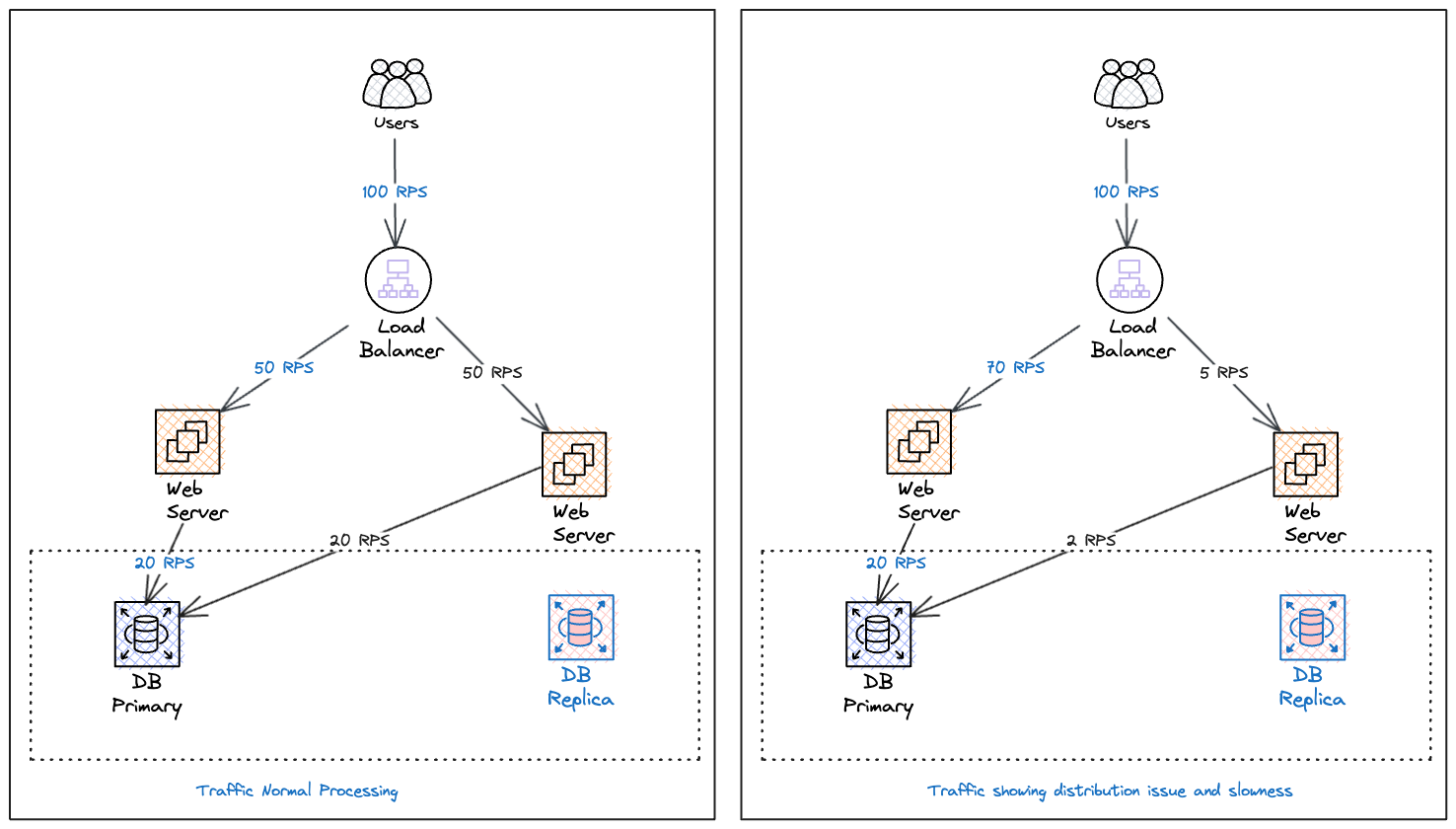

Figure 2: A typical architecture of a web application showing traffic for each component

Figure 2: A typical architecture of a web application showing traffic for each component

Continuing with the example of the web application, traffic in this case is the number of HTTP requests to the load balancer and web server, as well as the number of queries or transactions to the database. This is measured as a rate, e.g., requests per second or queries per second.

A change in traffic pattern can tell us about load, and following a given trend can be quite useful in future analysis and capacity planning. Monitoring traffic also shows the distribution of requests in each component of a distributed system, which can help identify problems like performance issues or saturation in the component.

Errors

When requests fail, an error is triggered. A high error rate can indicate that there is a problem with the system, such as a bug in the code or a hardware failure.

Examples of errors are HTTP request failures (e.g., HTTP 500 or HTTP 400), failure to query database, etc. Organizations need to monitor for a spike in errors in any component in a distributed system. Alerts can be set up to address this, as notification reduces the chance of further issues or cascading failures. In some self-healing systems and deployment strategies like canary, any errors are automatically rectified.

Saturation

Saturation is the amount of system resources that are being used. A high saturation level can indicate that the system is nearing its capacity and may not be able to handle additional requests.

In the above web application architecture, saturation could be 100% CPU utilization of a web server or database, or it could be a blocking query in a database lock, causing other queries to take longer. Every computing resource in a distributed system and the software are designed with some limits at which point they either stop working or are unable to process data further.

The golden signals are important because they provide a comprehensive view of the health of a system. By monitoring these four metrics, organizations can uncover any potential issues early and address them to avoid outages or degraded performance.

Importance of golden signals in observability

One of the goals of SRE is to reduce the time taken to solve a user-facing issue by monitoring the right things and responding quickly to alerts. The golden signals help achieve this goal and are sufficient in most use cases by providing a concise and actionable view of system health.

By monitoring these key metrics, teams can quickly identify deviations from normal behavior; prioritize troubleshooting efforts; and take proactive measures to ensure system reliability, performance, and user satisfaction. The golden signals facilitate effective observability and help maintain and improve the overall quality of a system.

Before adopting the golden signals, proper observability and effective collaboration must be instituted across the organization. This can be accomplished using tools and processes that can onboard users and create a clear path to finding the root cause of problems. Implementing a mature platform where the lessons from various teams are embedded is useful and productive. Discover Cisco's FSO Platform.

Implementing observability in organizations

Organizations must create a culture and adopt practices that enable teams to gain insights into the internal workings and behaviors of complex systems.

There are various tools and techniques available to assist with achieving observability, but this leads to tooling sprawl and lack of a congruent view of the system. A better way is to centralize all the information gathered along with the business context and provide visibility across domains and business verticals. By sending all metrics, events, logs, and traces (MELT) to a single platform along with the metadata and tags to add business context, you can improve workflows and collaboration.

Make sense of observability with our MELT infographic.

Traditional monitoring and/or siloed visibility does not work in a world driven by hybrid or cloud-native deployments, as application components have become smaller, more distributed, shorter-> lived, and increasingly ephemeral. – Cisco at AWS Reinvent 2022

Golden signals are part of the FSO equation

Many organizations are recognizing the limitations of traditional monitoring approaches and shifting towards full-stack observability (FSO). Traditional monitoring typically focuses on metrics, dashboards, views, and sampling, providing insights primarily on availability and performance within individual operation domains. However, FSO takes a broader perspective by emphasizing an understanding of the user experience across cross-functional domains.

To achieve FSO, organizations must leverage the concept of golden signals and incorporate site reliability engineering (SRE) principles. The golden signals — latency, traffic, errors, and saturation — help capture critical aspects of system behavior. SRE principles emphasize reliability, scalability, and operability, promoting observability as a core pillar of system design and operation.

The increasing digitization of people and processes has raised expectations for better user experiences. Consequently, organizations require improved observability platforms that go beyond traditional approaches. These enhanced platforms facilitate the use of distributed tracing, allowing the visualization of requests as they traverse through complex systems. High-cardinality time-series data, such as user-specific or context-rich information, can be efficiently handled to provide granular insights.

Furthermore, incorporating business context into the observability platform enables a deeper understanding of how system behavior impacts business outcomes. By streaming telemetry data in real time, organizations gain immediate insights into the performance and health of their systems. Additionally, leveraging artificial intelligence (AI) and machine learning (ML) algorithms can uncover patterns and anomalies that may go unnoticed by human eyes, leading to more proactive and predictive insights.

By embracing these advancements, organizations can effectively monitor the four golden signals and optimize their systems to deliver a superior experience in the digital age.