CML 2.5 Release Notes

Cisco Modeling Labs (CML) is a network simulation platform. CML 2.5 is the latest feature release of CML and introduces support for graphical annotations in the topology, resource limits on multi-user CML installations, and further improvements for cluster deployments.

New and Changed Information

This table shows any published changes to this CML 2.5 release notes document.

| Date | Changes |

|---|---|

| March 3, 2023 | Initial version for the CML 2.5.0 release. |

| March 30, 2023 | Added links to documentation. Added info about snap-to-grid. |

| April 17, 2023 | CML 2.5.1 release. Updated bug fixes and known issues. |

Installing or Migrating to CML 2.5

Upgrading to CML 2.5

If your CML instance is already running CML 2.3.0 or later, you can upgrade it to CML 2.5.1. See the In-Place Upgrade page for upgrade instructions. In-place upgrades from CML version 2.2.3 or earlier are not supported.

Migrating from an Existing CML 2.2 Installation

It is not possible to upgrade a CML 2.2.x installation to CML 2.5. You must migrate to a new CML 2.5 installation. If you have an existing CML 2.2.x installation, the migration tool will help you migrate your data from your existing CML installation to the new CML 2.5 installation. If your existing CML installation is CML 2.0.x or 2.1.x, we recommend first following the CML 2.2.x documentation to perform an in-place upgrade to CML 2.2.3 before migrating to a new CML 2.5 installation. The following video demonstrates the migration process from CML 2.2 to CML 2.3, and you can use the same steps to migrate from CML 2.2.3 to CML 2.5.

Note: If you choose to migrate an existing, licensed CML 2.2.x installation to a new installation of CML 2.5, you must deregister the old CML instance before decommissioning it. See Licensing - Deregistration for instructions on deregistering a CML instance.

- Deregister your existing CML 2.2.x instance.

- Install CML 2.5 (see below).

- Since you are migrating from an existing CML 2.2.x installation, mounting the refplat ISO before running the CML 2.5 installation is optional.

- If the reference platform ISO is mounted before starting the CML 2.5 installation, the setup script will copy reference platform images from a mounted refplat ISO to the local disk.

- You can skip the copying of reference platform images during installation because the migration tool will copy all of the reference platform images from your CML 2.2.x installation to the new CML 2.5 installation.

- Only mount the new refplat ISO if you want to add the new versions of the reference platforms in addition to the existing versions from your CML 2.2.x installation.

- Follow the instructions in the migration tool's README.md to migrate data from your existing CML 2.2 installation to your new CML 2.5 installation.

- License your new CML 2.5 installation.

The migration tool can be found on your CML 2.5 installation at /usr/local/bin/virl2-migrate-data.sh. You can also download the latest version of the migration tool from https://github.com/CiscoDevNet/cml-community/tree/master/scripts/migration-tool.

The migration tool also supports offline migration. If you want to install CML 2.5 on the same server that is currently running CML 2.2.x, you can use the Offline Version Migration instructions to back up the data to a .tar file. That way, you can transfer the .tar file to a separate datastore temporarily while you reinstall with CML 2.5. As always, make sure that you deregister your old CML 2.2.x instance before decommissioning it and starting the CML 2.5 installation.

Installing CML 2.5

The CML Installation Guide provides detailed steps for installing your new CML 2.5 instance.

- In CML 2.5, all of the reference platform VM images must be copied to the local disk of the CML instance. Be sure to provision enough disk space for your new CML instance to accommodate the reference platforms.

- Downloading the refplat ISO file is optional if you are migrating from an existing CML 2.2.x instance. The migration tool will migrate all of your existing image definitions and VM images from your old CML 2.2.x instance.

- Be sure to check the System Requirements for updated system requirements.

- Important: After the reference platforms are copied to disk during the Initial Set-Up, wait a couple of minutes for the CML services to finish restarting before clicking OK to continue. If you click OK too soon, the initial set-up can fail. (See the Known Issues below.)

Summary of Changes

This section details new and changed features in the new release. Bug fixes are listed in the subsequent section.

UI Changes

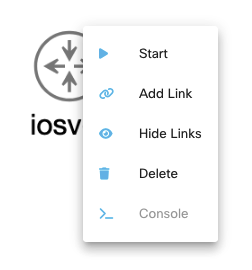

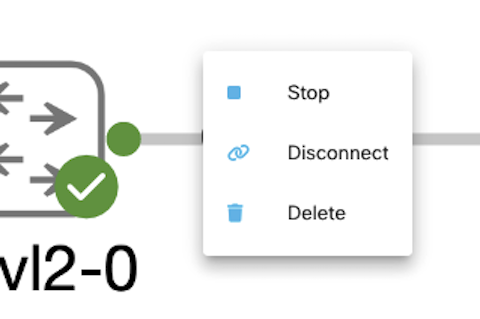

Right-Click Menu Replaces Hover Menu on Nodes

CML 2.5 moves away from the old hover menu in favor of a more traditional right-click context menu. In previous CML releases, when you hovered your mouse over a node in the Workbench, a ring-shaped action menu or hover menu appeared. This menu permitted you to start a node or open the console for a running node.

In CML 2.5.0+, if you hover the mouse over a node, nothing will happen. To open the context menu for a node in the Workbench, right-click on that node in the topology canvas.

| Menu | CML Versions | Screenshot |

|---|---|---|

| Right-click on a node to open the node's context menu. | CML 2.5.0+ |  |

| Right-click on a link to open the link's context menu. | CML 2.5.0+ |  |

| Obsolete. Hovering the mouse over a node will not open this menu in CML 2.5+. Right-click the node to open the context menu instead. | CML 2.0 - CML 2.4.1 |  |

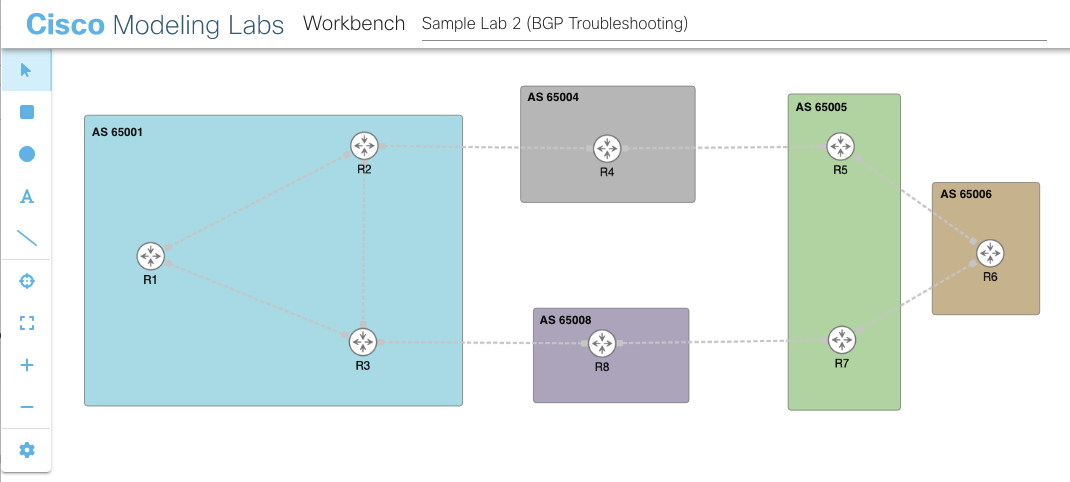

Annotations

CML 2.5 introduces the ability to add graphical annotations to a CML lab. You may use the tools in the Workbench's toolbar to add rectangles, ellipses, lines, arrows, and text annotations.

For more information, see Annotations in the CML User Guide.

Grid Lines and Snap-To-Grid

The Workbench canvas now incorporates a background grid and snap-to-grid for positioning elements in the canvas. Snap-to-grid makes it easy to align the elements in your lab. Snap-to-grid is documented in the Workbench page.

Resource Limits

Resource limits is a new feature which was introduced with the release of Cisco Modeling Labs (CML) 2.5. This feature enables the administrator of a multi-user system to limit the available resources for either individual users or sets of users. The resources which can be limited are:

- Node licenses: How many licenses from the overall available licenses can be used by a user or a set of users?

- Memory usage: How much memory in megabytes can be used?

- CPU usage: How much CPU capacity can be used by a user or set of users?

- External Connectors: Which external connectors can be used by a user or set of users?

Resource limits are configured using elements which are referred to as resource pools.

Each user can be associated with at most one resource pool. If no resource pool is configured, then the user is not restricted by any resource limits.

If a resource pool has exactly one user associated then it is called an individual pool. If a resource pool has more than one user associated, then it is called a shared pool.

Since resource limits affect the operation of a multi-user system, it does not make sense to apply resource limits on a single-user deployment, like CML-Personal or CML-Personal Plus.

For details on using resource limits, see Resource Limits in the CML Admin Guide.

Node Definitions and Image Definitions

Changing or deleting a node or image definition can have system-wide impacts on your CML server. These changes affect all users whose labs use the affected node or image definition. To help CML administrators provide a stable network simulation platform, CML 2.5 no longer permits non-admin users to create, modify, or delete node or image definitions. If you are a non-admin user, contact one of the users with administrator privileges on your CML instance for assistance with creating, modifying, or deleting a node or image definition.

CML 2.5 increases the IOS XRv 9000 node definition's boot.timeout parameter from 1000 seconds (about 16 minutes) to 3600 seconds (1 hour). If you provide a day-0 configuration with your IOS XRv 9000 nodes, ensure that they have console logging enabled. If console logging is disabled, CML will not be able to transition your IOS XRv 9000 node to BOOTED state until the 1 hour timeout has elapsed.

CML normally transitions a node to BOOTED state as soon as the node outputs one of the Boot Line messages listed in the node definition's Boot section. Those are the messages that indicate that the node has fully booted and loaded the day-0 configuration. If the node does not output any of those messages, CML uses the Timeout to transition the node to the BOOTED state. The old IOS XRv 9000 timeout value was too small, and CML was prematurely transitioning the node to BOOTED state on some CML instances. The longer timeout should prevent that from happening, but if your CML instance was relying on the boot timeout to transition the IOS XRv 9000 nodes to BOOTED state, it may appear that your IOS XRv 9000 nodes are taking much longer to boot.

Clustering (Phase 2)

CML 2.4.0 introduced support for CML clusters. This release builds on the initial clustering capabilities, improving the handling of disconnected cluster compute members.

- a disconnected compute host puts nodes that were deployed on that compute host into a DISCONNECTED state

- a node in DISCONNECTED state may be abandoned by wiping the node and restarting it anew on another available compute

- compute host API to retrieve state and modify admission state from READY (fully operational) to ONLINE (does not allow node starts) to UNREGISTERED (slated for removal - not for controller host)

- compute host API to remove an UNREGISTERED compute host from a cluster

- improved node state consistency from unexpected transitions

- administration of cluster computes from the controller's Cockpit page - only for the initial system administrator user, whose username (

sysadminby default) will be the same on all members of the cluster

API Changes

CML 2.5 introduces several changes to the REST-based web service APIs. For details about a specific API, log into the CML UI and click Tools > API Documentation to view the CML API documentation page.

APIs Removed in CML 2.5

This table summarizes the API endpoints that have been removed in CML 2.5.

| Removed API Endpoint | Use this API Endpoint Instead |

|---|---|

GET /telemetry_data |

GET /telemetry |

PUT /lab/{lab_id}/undo |

No endpoint (the feature was removed) |

APIs Deprecated in CML 2.5

This table summarizes the API endpoints that have been deprecated in CML 2.5

| Deprecated API Endpoint | Use this API Endpoint Instead |

|---|---|

GET /labs/{lab_id}/external_connections |

GET /system/external_connections |

New APIs in CML 2.5

This table summarizes the API endpoints that are new in CML 2.5.

| API Endpoint | Description |

|---|---|

GET /labs/{lab_id}/annotations |

Get a list of all annotations for the specified lab. |

POST /labs/{lab_id}/annotations |

Add an annotation to the specified lab. |

GET /labs/{lab_id}/annotations/{annotation_id} |

Get the details for the specified annotation. |

DELETE /labs/{lab_id}/annotations/{annotation_id} |

Delete the specified annotation. |

PATCH /labs/{lab_id}/annotations/{annotation_id} |

Update details for the specified annotation. |

GET /system/compute_hosts |

Get a list of all compute hosts in this cluster. |

GET /system/compute_hosts/{compute_id} |

Get the details for the specified compute host. |

DELETE /system/compute_hosts/{compute_id} |

Remove the specified unregistered compute host from cluster. |

PATCH /system/compute_hosts/{compute_id} |

Update administrative state of the specified compute host. |

GET /system/external_connectors |

Get a list of valid external connectors. |

GET /resource_pools |

Get a list of all of the resource pool IDs or resource pool objects. |

POST /resource_pools |

Add a resource pool or template, or multiple pools for each supplied user. |

GET /resource_pools/{resource_pool_id} |

Get the details for the specified resource pool. |

DELETE /resource_pools/{resource_pool_id} |

Delete the specified resource pool. |

PATCH /resource_pools/{resource_pool_id} |

Update details for the specified resource pool. |

GET /resource_pool_usage |

Get a list of all resource pool usage information. |

GET /resource_pool_usage/{resource_pool_id} |

Get single resource pool usage information. |

GET /labs/{lab_id}/resource_pools |

Get list of resource pools used by nodes in the specified lab. |

GET /telemetry/events |

Get list of telemetry events. |

GET /telemetry |

Get the current state of the telemetry setting. |

PUT /telemetry |

Set the state of the telemetry setting. |

POST /feedback |

Submit feedback to the dev team. |

APIs Changed in CML 2.5

This table summarizes the APIs that have been changed in CML 2.5.

| API Endpoint | Summary of Change |

|---|---|

GET /labs/{lab_id}/topologyGET /nodesPOST /import |

The structure of the response has changed to accommodate dropped compute_id attribute |

POST /labs/{lab_id}/nodes |

The structure of the request has changed to accommodate dropped boot_progress attribute |

GET /labs/{lab_id}/nodes/{node_id} |

The structure of the response has changed to accommodate additional node properties |

GET /system/auth/configPUT /system/auth/config |

The structure of the response has changed to accommodate improvements in LDAP server regex and certification max size |

GET /node_definitionsPUT /node_definitionsPOST /node_definitionsGET /node_definitions/{def_id}GET /node_definitions/{def_id}/image_definitionsGET /simplified_node_definitionsGET /node_definition_schemaGET /image_definition_schemaGET /image_definitionsPUT /image_definitionsGET /image_definitions/{def_id} |

The structure of the response has changed to accommodate improvements in node and image ID validation |

PUT /node_definitions POST /node_definitions DELETE /node_definitions/{def_id} PUT /image_definitions DELETE /image_definitions/{def_id} |

Only users with admin privileges to call these APIs. Non-admin users may not create, modify, or delete node definitions or image definitions. |

GET /nodesGET /labs/{lab_id}/nodesGET /labs/{lab_id}/nodes/{node_id}GET /labs/{lab_id}/nodes/{node_id}/interfacesGET /labs/{lab_id}/interfaces/{interface_id} |

We added a new, optional query parameter, operational. Specify operational=true to request that the response include the operational data instead of just configuration. |

Other Changes

| Summary | Release Notes |

|---|---|

| Do not allow non-admin users to modify node or image definitions. | CML 2.5.0+ restricts the creation/import, modification, and deletion of node definitions and image definition to CML administrators. |

| Cluster controller cockpit setup for computes | In a cluster controller Cockpit's UI, all registered compute hosts are now made available through a drop-down switching element. For this to work seamlessly, the system admin account name configured by initial setup must be used for login and match in all hosts. |

| Remove deprecated CoreOS | Dropped deprecated CoreOS from refplat ISO. It can be obtained from previously released refplat ISOs if needed. |

| Common passwords database is outdated | List of dictionary password was improved to match the most 100k used passwords. |

| Fix types in client library | Improved client library type hints |

| Support link labels in client | Added a read-only link label in the client library. |

| Validate and reject VM image's content after uploading if it's not a qcow2 | Fixed a bug where CML would create an unusable image definition with an associated disk image that was using an unsupported format. Only qcow2 and qcow images are supported. |

| Unify request calling code for virl2_client | The requests module has been replaced with httpx module in the client library. |

| Permit larger certificate chains in the LDAP authentication settings | Larger certificate chains in the LDAP authentication settings (4096 -> 32768) |

| Reset stats for disconnected compute | Reset compute statistics of disconnected computes and node statistics for nodes deployed on a disconnected compute. |

| Improve the name of downloaded console log | Replaced lab id with lab title in downloaded console log filename Added lab title to downloaded pcap filename |

| Cockpit cleanup | In a cluster deployment, relevant Cockpit functions in the CML Maintenance page now work from the controller's page and apply for all computes. For this to function, the same system admin user must be used to log into Cockpit as was setup during initial configuration, and the same user must be configured on all compute hosts. |

| Make required columns always enabled | Improved system administration page in UI to always display actions columns. |

| Support migration via IPv6 | A version migration is now supported via IPv6. |

| Remove obsolete buttons from CML Cockpit page on computes | Buttons for cluster features were removed from the CML2 page in Cockpit on compute members. The features are now available to be run from the controller. |

| Cleanup all compute members at once | Cockpit 'Clean Up' action is now cluster-wide |

| Upgrade all compute members at once | Cockpit 'Software Upgrade' action is now cluster-wide |

| Download all log files via controller | Cockpit 'Log File Download' action is now cluster-wide |

| API to list disable and remove a compute | Implemented an API to put computes into maintenance (ONLINE), and decommissioned (UNREGISTERED) administrative states, and to remove unregistered computes. |

| add l2 filters to external bridge | Implemented a cockpit feature "Bridge Protection" that ensures that there's no L2 / BPDU / STP traffic forwarded on the external bridge(s). The feature is enabled by default and ensures that external devices won't ERR_DISABLE ports because of unwanted L2 traffic. |

| Improve generic messaging for failed node actions | Improved notifications for failed lab actions. |

| Use PyATS credentials in ANK | Improved node configuration generator to include PyATS credentials configured in node/image definitions. |

| PyATS credentials in an image definition (minimal) | Added support for pyats credentials and configuration in image definition schema. |

| Allow for disconnected nodes | Nodes residing on compute hosts which are not connected for any reason are now marked in disconnected state; the state will be reset once connection to the compute host is established again. Users may wipe such a node; then deploy it on another viable compute host in a cluster. |

| Improve compute installation robustness | Various robustness improvements were done to the CML compute installation process. |

| Refactor initial LLD consistency check | Refactored compute consistency check and diagnostics data. |

| External Connector Bridge selection in UI should use API | Improved the UI/Workbench configuration tab for external connectors to display only existing and valid external connectors. |

| Restrict image uploads to qcow in Client Lib | Restricted extensions of uploaded files to .qcow and .qcow2 in the client library. |

| Expose lvm-maximize-root-volume script to expand disk space in CML | Implemented Cockpit 'Maximize disk' cluster-wide action. |

| Stop using deprecated property in pyats_testbed API | Replaced the deprecated 'series' key by the 'platform' key to prevent deprecation warning and possible future compatibility issues in PyATS calls. |

Bug Fixes

| Bug Description | Resolution |

|---|---|

| Some sample labs had invalid interface slot numbering for Ubuntu nodes. | Fixed in 2.5.1. All labs in Tools > Sample Labs now use the correct interface slot number for Ubuntu nodes. |

| The controller service was not syncing up correctly after a service restart if a running or stopped lab had a node with invalid interface slot numbering. | Fixed in 2.5.1. |

| Typos in the CML UI. | Fixed in 2.5.1. Fixed some typos and other inconsistencies in UI elements associated with new CML 2.5.0 features. For example, "Draw elipse" was corrected to "Draw ellipse". |

| The Tools > Resource Limits page showed incorrect behavior if you had no resource pool assigned and vertically scrolled the page. | Fixed in 2.5.1. Fixed resource pool overflow on the resource limits page. When vertically scrolling, the resource pool table doesn't overflow the top menu bar anymore. |

| When configured to use LDAP authentication, some CML instances are affected by CVE-2023-20154. See the page for advisory ID cisco-sa-cml-auth-bypass-4fUCCeG5. | Fixed in 2.5.1. When CML is configured to use LDAP Authentication, it now ignores reference entities (i.e., entries with type "searchResRef") in the LDAP search results. CML also requires that the User Filter and Admin Filter now return exactly one matching searchResEntry to be considered a successful match. |

| Test whether Verify TLS check box in LDAP config works as expected | Fixed a scenario with no attributes returned by a LDAP server. |

| Handle invalid LDAP server | We raise a proper notification when an invalid LDAP server is being configured instead of 500 Internal Server Error. |

| LDAP rebind failures are causing 5-minute outages in CML login | Fixed a bug that interpreted a soft error as a hard error and blocked LDAP server from being even considered as a valid server for a couple of minutes. |

| LDAP auth requires that users have read-only access to LDAP tree | Fixed a bug that locked-down OpenLDAP servers. Users could bind to the LDAP server, but they could not see any memberOf attributes. |

| OVA and ISO install files for CML did not include the latest grub-efi packages. | Fixed in 2.5.1. Resolved an issue that prevented the latest grub-efi packages from being included in the CML builds. |

| A script to fix grub caused some CML in-place upgrades to fail. | Fixed in 2.5.1. Ignore expected failures when using the .pkg file to perform a CML in-place upgrade with specific environment types. |

| ASAv sub-interfaces do not work. | Changed ASAv driver to vmxnet3 to make sub-interfaces work. |

| fix lab import validation | Loosen schema to allow empty image definitions in node data. |

| Controller may fail to start | Fixed a bug where the controller failed to start if connection to a registered compute was terminated during initialization. |

| Invalid logs are downloaded when CML services are not running | Fixed a bug that prevented up-to-date logs from being downloaded when CML services are unavailable. |

| Computes do not attempt to resync | Fixed a bug where a failed compute consistency check was not attempted again unless the compute was disconnected and reconnected. |

| Allow null configuration on import | Configuration for lab nodes may now be null instead of an empty string; nodes whose definitions do not support configuration provisioning will always use this value. Nodes which support configuration provisioning will use any default configuration specified in the node definition. |

| Logs are not refreshed when downloaded from Cockpit | Fixed a cache issue when downloading logs multiple times via a single Cockpit session in Firefox. |

| Diagnostics must not contain node configurations | The diagnostics API now reports for each deployed node a description of the node's initial configuration rather than the configuration itself. |

| iosxr9kv may take longer to boot than 1000 seconds | Increased IOS XRv 9000 boot time to 1 hour to prevent moving such node into BOOTED state prematurely. |

| Invalid boot line for iosxr9kv | Fixed a bug that moved IOS XRv 9000 nodes to BOOTED state prematurely resulting into improperly configured nodes, unavailable to extract configuration and other features. Now, nodes are moved to BOOTED when they are really booted and their console can be accessed. |

| Event storages are not regulated | Implemented a limit on events stored in memory. |

| Do not log traceback when config extraction fails | Don't log extensive tracebacks when configuration extraction fails for a node. |

| A partially imported lab is not removed when import fails | During lab import, some common causes for import failures are now rejected by the controller before import is attempted. Upon major import failures, no new, partial lab is left created on the controller anymore. |

| Missing node defs breaking UI | Fix a bug with displaying of labs that have nodes whose node definitions are missing in the Workbench. |

| grub-efi-amd-signed package fails software updates | Fixed an issue where Ubuntu software upgrades failed on updating the grub-efi-amd-signed package due to an inconsistency in the grub-efi debian package configuration, resulting in an incompletely upgraded system. The issue is fixed by automatic upgrade using the CML 2.5.0 .pkg file. |

| CML does not let you use Standard VGA video model | Replaced invalid kvm video drivers to fix not working VNC with specific types. |

| Security issues in curl 7.83.1 | Updated curl and other libraries to latest available versions to fix all known vulnerabilities. |

| A node cannot be wiped if a node definition is missing | Fixed a bug that prevented a stopped node from being started or wiped when its node definition was missing. |

| Allow IPv6 IP address for Licensing > Transport Settings > Proxy host | Allowed IPv6 in licensing transport proxy server settings. |

| Do not require stop VMs for licensing reset | It's not a requirement anymore to stop running VMs before resetting licensing via Cockpit. |

| ASAv node definition is missing interfaces | Added two extra missing interfaces to ASAv node definition. |

| *.orig and *.rej files in node definition directory may cause failures during a migration | Ignore invalid node definitions during a migration. |

| vCPU and RAM limits are inconsistent across CML | Unify max node resource limits across the product to 128 vCPUs, 1 TB memory, 4 TB boot and data disks. |

| Links in node info popup are not reachable | Fixed popup window with node information in the workbench. You can now click on links in the popup and it won't disappear when you are in it with the mouse. |

| Initial setup hostname check is not anchored | Fixed a bug where initial setup allowed invalid hostnames to be configured. |

| Remove orphaned VMs doesn't work in a cluster deployment | Cockpit 'Remove Orphaned VMs' action is now cluster-wide |

| IPv6 licensing transport is not allowed | Allowed IPv6 in licensing transport gateway server settings. |

| Resolve.sh must force IPv6 address | Fixed a bug where in cluster hosts failed to resolve the link-local ipv6 address of remote computes' cluster interfaces in nonstandard network environments |

| Lab events do not contain timestamp | Properly display timestamps for lab events in UI (Diagnostics page). |

| User settings page do not load properly when system is not licensed | Improved loading of data on the user settings page in UI on an unlicensed VM. |

| Build initial configuration error in started labs | Run build initial configuration only over undefined nodes to prevent unwanted errors. |

| Default image definition may be removed while in use | A default image definition that is in use cannot be removed anymore. |

| Node definition IDs must be at most 250 bytes long | Fixed a bug where node and image definition IDs are validated for use in filenames to store these definitions before trying to add them. |

| Possible memory leak from eventBus | Fixed possible memory leak in browser |

| Node Create API ignores most parameters | Fixed a bug where configuration, link hiding and simulation attributes were ignored when creating a new node through API. Fixed a bug where node update API call allowed setting simulation attributes which were prohibited by the node definition from doing so. Fixed a bug where node create API, and topology import API calls silently skipped simulation attributes which were prohibited from being set per-node by the node definition. Along with node update API call, attempts to set these values for the given node will now be rejected. Fixed a bug where simulation attributes from node get API call and when exported to topology for simulated nodes were using values used when starting them. Now, the values match exactly what was set for this specific node regardless of the node's simulation state, which means they are null if not set per-node. For compatibility with previously-exported topologies, the topology import API will ignore zero-valued simulation attributes (where zero is normally prohibited by the schema for each of these values) and treat them as null; if the simulation property is prohibited by the node definition schema, then its value in the imported topology must be either omitted, zero, null, or exactly the same value as in the node definition. Fixed a bug where the Node API specification did not match actual supported set of node attributes for node create, update, get, and export in topology. The Node.config property, and associated parameter config of Lab.create_node were both renamed to configuration. The config property is still present but deprecated; the parameter cannot be called by its original name. Client Node.update function was added a new push_to_server parameter; if set to True, the update values are first pushed into the server instance. Client Node.hide_links property was added. |

| Sometimes elements are not loading in user settings page | Fixed blank settings page on unlicensed CML |

| It was possible to create an image definition where the Boot Disk Size was too small for the corresponding VM image. CML could never start a node that used such an image definition. | Fixed a bug where an unusable image definition was allowed to be added where its own or its node definition's boot disk size was lower than its virtual size. Fixed a bug where a node was not prevented from starting and failed to start because its boot disk size was lower than its disk image's virtual size. Fixed a bug where a node's effective boot disk size was applied to all disks of the image definition instead of the first. |

| Handle unreadable image definitions properly | Improved validation around image definitions. Invalid image definitions are not properly rejected. |

| Fix incorrect info in node definition descriptions | Updated incorrect description in Alpine and Ubuntu node definitions. |

Known Issues and Caveats for CML 2.5

| Bug Description | Notes |

|---|---|

| CVE-2023-20154 affects some CML instances that use LDAP authentication. | We recommend for all customers who are using LDAP authentication with CML to upgrade to CML 2.5.1. For more details, see advisory ID cisco-sa-cml-auth-bypass-4fUCCeG5. |

scp to port 22 fails with error subsystem request failed |

The service that runs on port 22 of your CML server only supports scp with the SCP protocol. Some scp clients use the SFTP protocol by default. Workaround: If you're using a newer version of an scp client that defaults to SFTP, see whether it provides an option, such as -O, to use the older SCP protocol. If so, retry the copy operation using scp -O. |

| The controller service was not syncing up correctly after a service restart if a running or stopped lab had a node with invalid interface slot numbering. | Fixed in 2.5.1. |

| Some sample labs had invalid interface slot numbering for Ubuntu nodes. | Fixed in 2.5.1. Upgrade to that release before importing sample labs that use the Ubuntu node type. |

| Cannot run simulations on a macOS system that uses Apple silicon (e.g., the Apple M1 chip). | CML and the VM Images for CML Labs are built to run on x86_64 processors. In particular, CML is not compatible with Apple silicon (e.g., the Apple M1 chip). Workaround: There are no plans to support ARM64 processors at this time. Deploy CML to a different system that meets the system requirements for running CML. |

| Even if you were able to run network simulations with CML 2.2.x on VMWare Fusion and macOS Big Sur or above, the same labs no longer start after migrating to CML 2.3.0+. | A default setting in the CML VM changed between CML 2.2.3 and CML 2.3.0. Because of the new virtualization layer in macOS 11+, this setting causes errors when running the CML VM on VMware Fusion. Workaround: follow the instructions to disable nested virtualization in the CML VM. |

| Bug in macOS Big Sur. After upgrading to Big Sur and VMware Fusion 12.x, simulations no longer start. | MacOS Big Sur introduced changes to the way macOS handles virtualization, but the problems were resolved in later versions of macOS Big Sur and VMware Fusion. Workaround 1: If you are still experiencing problems, be sure to upgrade to the latest version of VMware Fusion 12.x and the latest bug fix release of your current macOS version. Workaround 2: If you are using CML 2.3+, then follow the instructions to disable nested virtualization in the CML VM. |

| Installation fails if you click OK to continue installation after the reference platforms are copied but before the services finish restarting. | Workaround 1: After the reference platforms are copied, wait a couple of minutes before clicking the OK button to continue with the initial set-up. Workaround 2: If the installation failed because you pressed OK too quickly, reboot the CML instance and reconfigure the system again. All refplats are already copied, so the initial set-up screen won't restart the services this time. Note that the previously-configured admin user will already exist, so you will need to create a new admin account with a different username to complete the initial set-up. |

| Deleting or reinstalling a CML instance without first deregistering leaves the license marked as in use. Your new CML instance indicates that the license authorization status is out of compliance. | If you have deleted or otherwise destroyed a CML instance without deregistering it, then the Cisco Smart Licensing servers will still report that instance’s licenses are in use. Workaround 1: Before you destroy a CML instance, follow the Licensing - Deregistration instructions to deregister the instance. Properly deregistering an instance before destroying it will completely avoid this problem. Workaround 2: If you have already destroyed the CML instance, see the instructions for freeing the license in the CML FAQ. |

| When the refplat ISO is not mounted until after the initial set-up steps have started, CML will not load the node and image definitions after you complete the initial set-up. | Workaround: Log into Cockpit, and on the CML2 page, click the Restart Controller Services group to expand it, and click the Restart Services button. CML will load the node and image definitions when the services restart. |

| When the incorrect product license is selected, the SLR (Specific Licensing Reservation) still can be completed. It eventually raises an error, but the SLR reservation will be complete with no entitlements. | Workaround: If you accidentally select the wrong product license, then you will need to release the SLR reservation and then correct the product license before trying to complete the SLR licensing again. |

| If the same reservation authorization code is used twice, the CML Smart agent service crashes. | Workaround: Do not use the same reservation authorization code more than once. |

| If you have read-only access to a shared lab, some read/write actions are still shown in the UI. Attempting to change any properties or to invoke these actions raises error notifications in the UI. | Workaround 1: Do not attempt to invoke the read/write actions when working with a lab for which you only have read-only permissions. Workaround 2: Ask the lab owner to share the lab with read/write permissions so that you can make those changes to the lab. |

| If you have read-only access to a shared lab, you will still not be able to see the current link conditioning properties in the link's Simulate pane. | Workaround: ask the lab owner to share the lab with read/write permissions if you need to access the link conditioning properties. |

| The algorithm for computing the available memory does not take into account maximal possible memory needed by all running nodes. It only checks the currently allocated memory. CML will permit you to start nodes that fit within the current available memory of the CML server. Later, if the memory footprint of the already-running nodes grows and exceeds the available memory on the CML server, those nodes will crash. | Workaround 1: Launch fewer nodes to stay within your CML server's resources. Workaround 2: Add additional memory to the CML server to permit launching additional nodes. Of course, this workaround just moves the point where the CML server will eventually hit its limit, so you must remain aware of the your CML server's resource limits or ensure that the CML server has sufficient memory to accommodate all labs that will be running on your CML server simultaneously. |

On a CML server or VM with multiple NICs, the CML services are active and running and do not report an error, but the CML UI and APIs are not reachable. The CML services are listening on the wrong interface because, when the CML instance has multiple NICs, CML chooses the first alphabetically-ordered interface and configures it as the CML server's management interface. That may not be the correct interface. For example, an interface name like enp10s0 would be selected before enp9s0. |

Workaround 1: Change the network connections to the CML server so that the interface that CML selects is the one that you'd like it to use for its management interface. Workaround 2: After the CML server boots up, configure the network interface manually in the Cockpit Terminal: nmcli conThat command shows which interface bridge0-p1 is actually connected to. In this example, let's say that bridge0-p1 connected to ens10p0, and we want it to be connected to ens9p0. We need to remove the bridge0-p1 connection and then recreate it with a connection to the right interface. Adjust the following commands based on your interface IDs. check con del bridge0-p1In the end, you should see only 3 active connections (green with a device set). The first two are bridge0 and virbr0, and we do not touch these; the third one is bridge0-p1 which should now have the correct interface listed as the device. |

When starting a CSR 1000v node for the first time or after a Wipe operation, the interfaces are all in shutdown state. |

Workaround 1: Add no shut to each interface config in each CSR1000v node's Edit Config field in the UI. Workaround 2: Use the EEM applet on the CSR 1000v page. |

| New nodes cannot be started when CPU usage is over 95%, when RAM is over 80%, or when disk usage is over 95%. | Workaround: Allocate additional resources to the CML server. Workaround 2: If you're hitting the disk usage limit, check the Remove Orphaned VMs function in Cockpit. Orphaned VMs can consume a significant amount of disk space even though CML is not longer using or managing those VMs. |

VM Images uploaded via the API can only be used in an image definition if they are transmitted via a multipart/form-data request. The problem is that the SELinux context is not set correctly. |

Workaround 1: Use the Python Client Library, which handles image uploads correctly. Workaround 2: Create a multipart/form-data request when calling this API. |

The journalctl output shows errors like this: cockpit-tls gnutls_handshake failed: A TLS fatal alert has been received |

This log message only occurs with Chrome browsers and has no functional impact on the use of Cockpit. No workaround needed. |