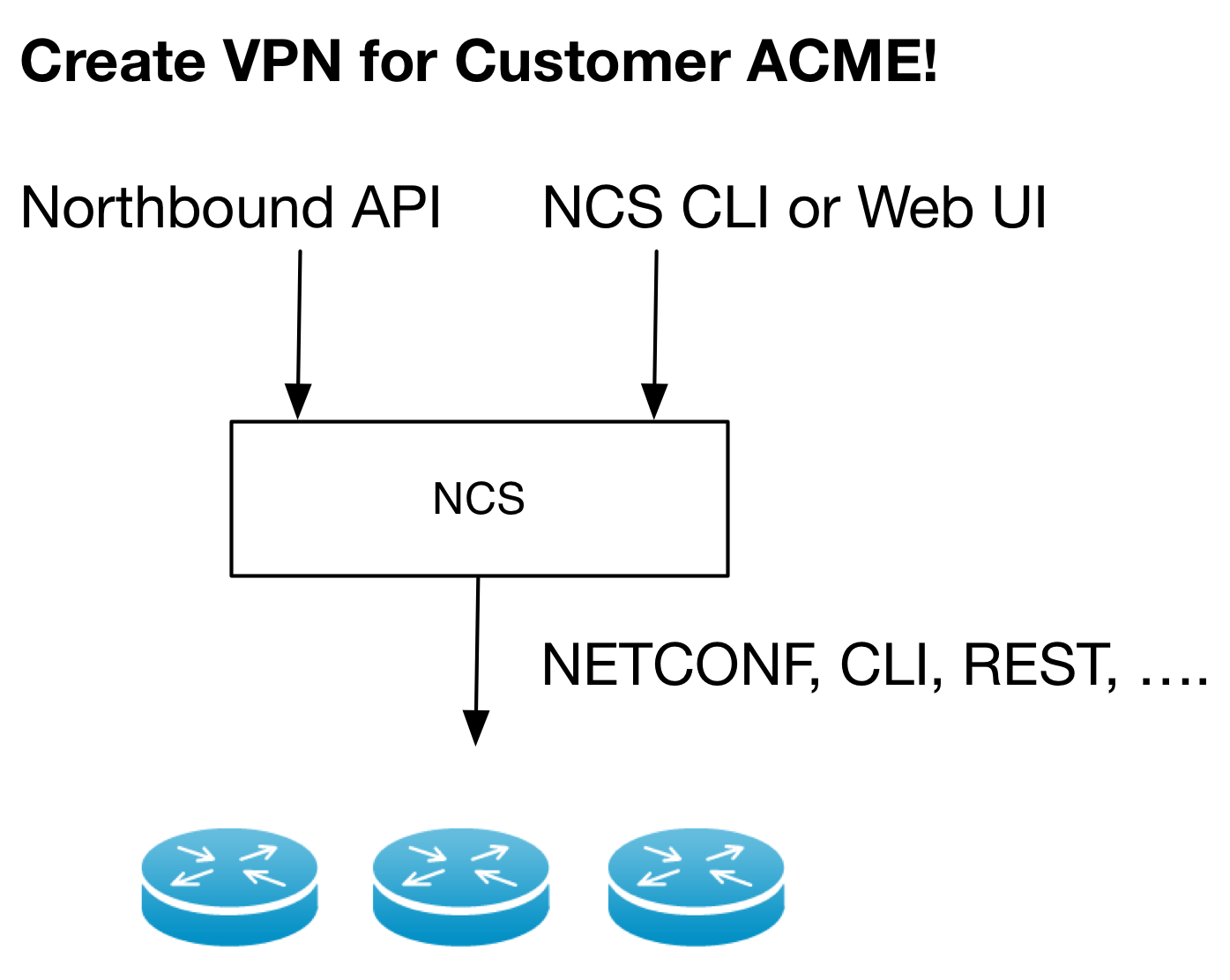

This section describes how to develop a service application. A service application maps input parameters to create, modify, and delete a service instance into the resulting native commands to devices in the network. The input parameters are given from a northbound system such as a self-service portal via API calls to NSO or a network engineer using any of the NSO User Interfaces such as the NSO CLI.

The service application has a single task: from a given set of input parameters for a service instance modification, calculate the minimal set of device interface operations to achieve the desired service change.

It is very important that the service application supports any change, i.e., full create, delete, and update of any service parameter.

Below follows a set of definitions that is used throughout this section:

- Service type

-

A specific type of service like "L2 VPN", "L3 VPN", "VLAN", "Firewall Rule set".

- Service instance

-

A specific instance of a service type, such as "ACME L3 VPN"

- Service model

-

The schema definition for a service type. In NSO YANG is used as the schema language to define service types. Service models are used in different contexts/systems and therefore have slightly different meanings. In the context of NSO, a service model is a black-box specification of the attributes required to instantiate the service.

This is different from service models in ITIL-based CMDBs or OSS inventory systems, where a service model is more of a white-box model that describes the complete structure.

- Service application

-

The code that implements a service, i.e., maps the parameters for a service instance to device configuration.

- Device configuration

-

Network devices are configured to perform network functions. Every service instance results in corresponding device configuration changes. The dominating way to represent and change device configurations in current networks are CLI representations and sequences. NETCONF represents the configuration as XML instance documents corresponding to the YANG schema.

Developing a service application that transforms a service request to corresponding device configurations is done differently in NSO than in other tools on the market. It is therefore important to understand the underlying fundamental concepts and how they differ from what you might assume.

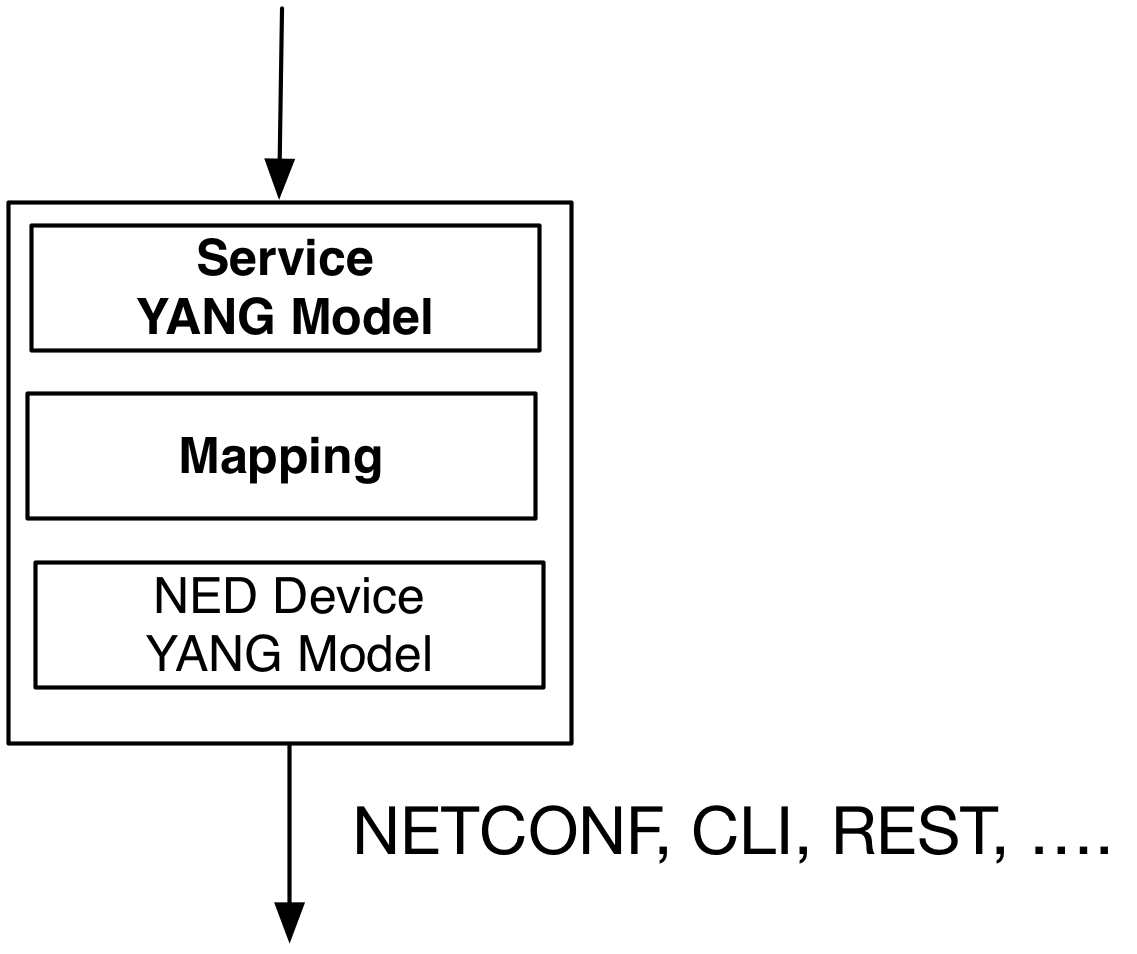

As a developer you need to express the mapping from a YANG service model to the corresponding device YANG model. This is a declarative mapping in the sense that no sequencing is defined.

Note well that irrespective of the underlying device type and corresponding native device interface, the mapping is towards a YANG device model, not the native CLI for example. This means that as you write the service mapping, you do not have to worry about the syntax of different devices' CLI commands or in which order these commands are sent to the devices. This is all taken care of by the NSO device manager.

The above means that implementing a service in NSO is reduced to transforming the input data structure (described in YANG) to device data structures (also described in YANG).

Who writes the models?

-

Developing the service model is part of developing the service application and is covered later in this chapter.

-

Every device NED comes with a corresponding device YANG model. This model has been designed by the NED developer to capture the configuration data that is supported by the device.

This means that a service application has two primary artifacts: a YANG service model and a mapping definition to the device YANG as illustrated below.

At this point you should realize the following:

-

The mapping is not defined using workflows, or sequences of device commands.

-

The mapping is not defined in the native device interface language.

A common problem for systems that tries to automate service activation is that a "back-end" needs to be defined for every possible service instance change. Take for example a L3 VPN, a northbound system or a network engineer may during a service life-cycle want to:

-

Create the VPN

-

Add a leg to the VPN

-

Remove a leg from the VPN

-

Modify the bandwidth of a VPN leg

-

Change the interface of a VPN leg

-

...

-

Delete the VPN

The possible run-time changes for an existing service instance are numerous. If a developer has to define a back-end for every possible change, like a script or a workflow, the task is daunting, error-prone, and never-ending.

NSO reduces this problem to a single data-mapping definition for the "create" scenario. At run-time NSO will render the minimum change for any possible change like all the ones mentioned below. This is managed by the FASTMAP algorithm explained later in this section.

Another challenge in traditional systems is that a lot of code goes into managing error scenarios. The NSO built-in transaction manager takes that away from the developer of the Service Application.

Since NSO automatically renders the northbound APIs and database schema from the YANG models, NSO enables a DevOps way of working with service models. A new service model can be defined as part of a package and loaded into NSO. An existing service model can be modified and the package upgraded. All northbound APIs and User Interfaces are automatically re-rendered to cater for the new models or updated models.

The YANG Service Model specifies the input parameters to NSO. For a specific service model think of the parameters that a northbound system sends to NSO or the parameters that a network engineer needs to enter in the NSO CLI.

This model can be iterated without having any mapping defined. Write the YANG model, reload the service package in NSO and try the model with network engineers or northbound systems.

The result of this exercises for a L3 VPN service might be:

-

VPN name

-

AS Number

-

End-point CE device and interface

-

End-point PE device and interface

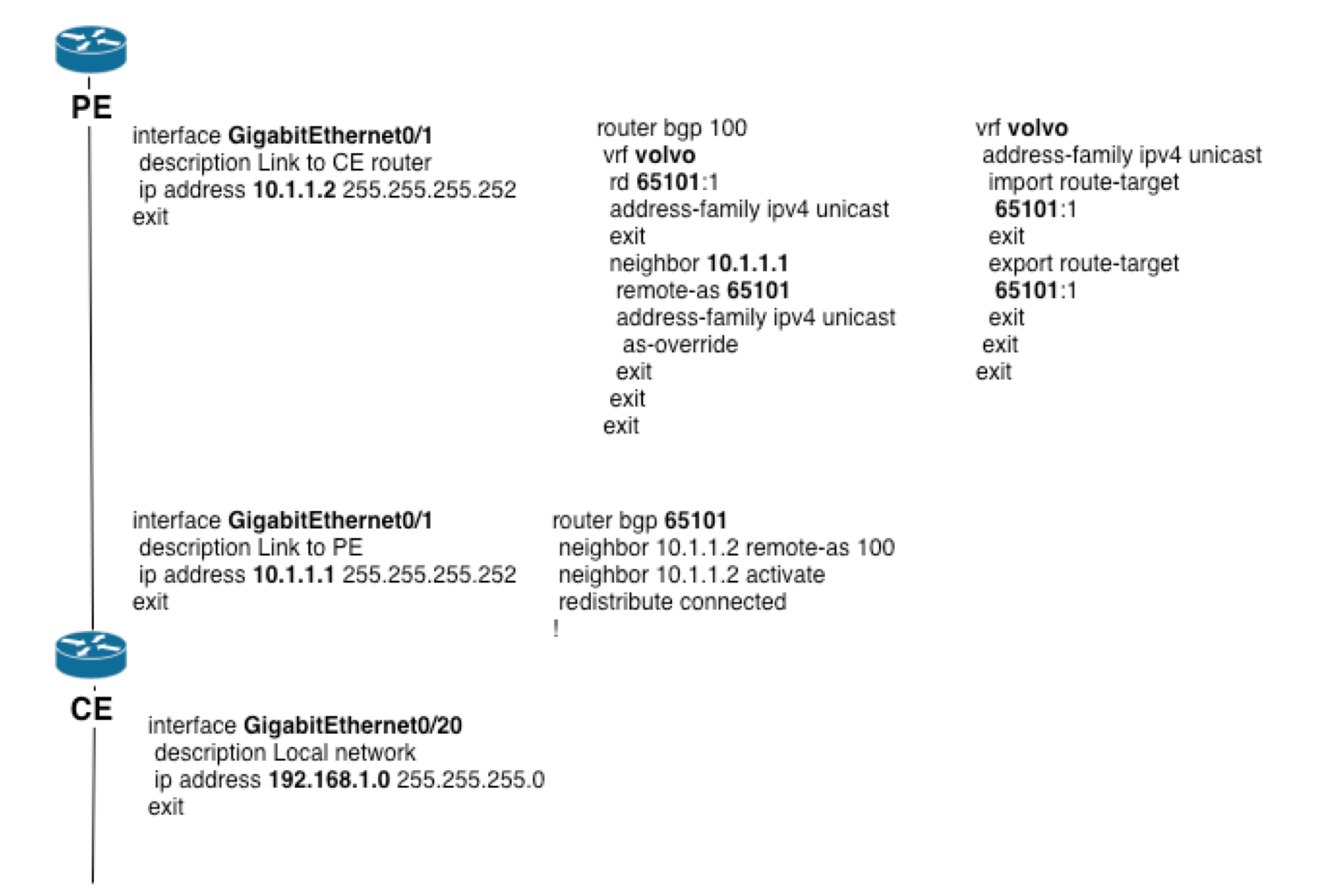

The most straight-forward way of finding the mapping is to create one example of the service instance manually on the devices. Either create it using the native device interface and then synchronize the configuration into NSO, or use the NSO CLI to create the device configuration.

Based on this example device configuration for a service instance, note which part of the device configuration are variables resulting from the service configuration.

The figure below illustrates an example VPN configuration. Configuration items in bold are variables that are mapped from the service input.

Now look at the attributes of the service model and make sure you have a clear picture how the values are mapped into the corresponding device configuration.

During the above exercises you might come into a situation where the input parameters for a service are not sufficient to render the device configuration.

Examples:

-

Assume the northbound system only provides the CE device and wants NSO to pick the right PE.

-

Assume the northbound system wants NSO to pick an IP address and does not pass that as an input parameter.

This is part of the service design iteration. If the input parameters are not sufficient to define the corresponding device configuration you either add more attributes to the service model so that the device configuration data can be defined as a pure data model mapping or you assume the mapping can fetch the missing pieces.

In the latter case there are several alternatives. All of these will be explained in detail later. Typical patterns are listed below:

-

If the mapping needs pre-configured data, you can define a YANG data model for this data. For example, in the VPN case NSO could have a list of CE-PE links loaded into NSO and the mapping then uses this list to find the PE for a CE and the PE therefore does not need to be part of the service model.

-

If the mapping needs to request data from an external system, for example query an IP address manager for the IP addresses, you can use the Reactive FASTMAP pattern.

-

Use NSO to handle allocation of resources like VLAN IDs etc. A package can be defined to manage VLAN pools within NSO and the mapping then requests a new VLAN from the VLAN pool and therefore it needs not to be passed as input. The Reactive FASTMAP pattern is used in this case as well.

This section gives an overview of the different design patterns to define the mapping. NSO provides three different ways to express the YANG service model to YANG device model mapping:

- Service templates

-

If the mapping is a pure data model mapping without any complex calculations, algorithms, external call-outs, resource management or Reactive FASTMAP patterns, the mapping can be defined as service templates. Service templates requires no programming skills and are derived from example device configurations. They are therefore well suited for network engineers. See

examples.ncs/service-provider/simple-mpls-vpnfor an example. - Java and configuration templates

-

This is the most common technique for real-life deployments. A thin layer of Java implements the device-type independent algorithms and passes variables to templates that maps this into device specific configurations across vendors. The templates are often defined "per feature". This means that the Java code calculates a number of variables that are device independent. The Java code then applies templates with these variables as inputs, and the templates maps this to the various device types. All device-specifics are done in the templates, thus keeping the Java code clean. See

examples.ncs/service-provider/mpls-vpnfor an example. - Java only

-

There are no real benefits of this approach compared to the above combination of Java and templates. This more depends on the skills of the developer, programmers with less networking skills might prefer this approach. Abstracting away different device vendors are often more cumbersome than in the Java and templates approach. See

examples.ncs/datacenter/datacenterfor an example.

The purpose of this section is to outline the overall steps in NSO to create a service application. The following sections exemplifies these steps for the different mapping strategies. The command ncs-make-package in NSO 5.7 Manual Pages is used in these examples to create a skeleton service package.

All of the below assume you have a NSO local installation (see NSO Local Install in NSO Installation Guide, and have created an NSO instance with ncs-setup in NSO 5.7 Manual Pages This command creates the NSO instance in a directory, called the NSO runtime directory, which is specified on the command line:

$ ncs-setup --dest ./ncs-run

In this example the NSO runtime directory is

./ncs-run.

-

Generate a service package in the packages directory in the runtime directory. In this example, the package name is

vlan, and it is a service package with java code and templates:$

cd ncs-run/packages$ncs-make-package --service-skeletonTYPEPACKAGE-NAME Edit the skeleton YANG service model in the generated package. The YANG file resides in

PACKAGE-NAME/src/yang-

Build the service model:

$

cd PACKAGE-NAME/src$make -

Try the service model in the NSO CLI. In order to have NSO to load the new package including the service model do:

admin@ncs#

packages reload -

Iterate the above steps from Step 2 until you have a service model you are happy with.

-

If the service does not have any templates, continue with Step 11

Create an example device configuration either directly on the devices or by using the NSO CLI. This can be done either using netsim or real devices. In case the configuration was created directly on the devices, synchronize the configuration back into NSO:

admin@ncs#

devices sync-from -

Save the example device configuration as an XML file which is the format of templates.

admin@ncs#

show full-configuration devices devices config ... | display xml | file save mytemplate.xml -

Move the XML file to the

templatefolder of the package. -

Replace hard-coded values of the XML template with variables referring to the service model or variables passed from the Java code. This is explained in detail later in this section.

-

If this template is a template without any Java code make sure the service-point name in the YANG service model has a corresponding service-point in the XML file. Again this is explained in detail later.

If a Java mapping layer is included, modify the Java in the

src/javadirectory. Build the Java code:$

cd PACKAGE-NAME/src$make-

Reload the packages; this reloads both the data models and the Java code:

admin@ncs#

packages reload -

Try the mapping by creating and modifying service instances in the CLI. Validate the changes by:

admin@ncs(config)#

commit dry-run outformat native

In this example, you will create a simple VLAN service using a mapping with service templates only (i.e., no Java code). To keep the example simple, it will use only one single device type (IOS).

In order to reuse an existing environment for NSO and netsim,

the

examples.ncs/getting-started/using-ncs/1-simulated-cisco-ios/

example is used. Make sure you have stopped any running NSO and

netsim.

-

Navigate to the example directory:

$

cd $NCS_DIR/examples.ncs/getting-started/using-ncs/1-simulated-cisco-ios -

Now you need to create a environment for the simulated IOS devices. This is done using the command ncs-netsim in NSO 5.7 Manual Pages .

$

ncs-netsim create-network $NCS_DIR/packages/neds/cisco-ios 3 cDEVICE c0 CREATED DEVICE c1 CREATED DEVICE c2 CREATEDThis command creates the simulated network in

./netsim. -

Next, you need an NSO instance with the simulated network:

$

ncs-setup --netsim-dir ./netsim --dest .Using netsim dir ./netsim

-

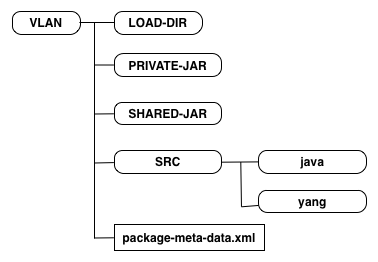

The first step is to generate a skeleton package for a service. (For details on packages see the section called “Packages” in NSO 5.7 Getting Started Guide). The package is called

vlan:$

cd packages$ncs-make-package --service-skeleton template vlanThis results in a directory structure:

vlan load-dir package-meta-data.xml src templates

For now lets focus on the

vlan/src/yang/vlan.yangfile.module vlan { namespace "http://com/example/vlan"; prefix vlan; import ietf-inet-types { prefix inet; } import tailf-ncs { prefix ncs; } augment /ncs:services { list vlan { key name; uses ncs:service-data; ncs:servicepoint "vlan"; leaf name { type string; } // may replace this with other ways of referring to the devices. leaf-list device { type leafref { path "/ncs:devices/ncs:device/ncs:name"; } } // replace with your own stuff here leaf dummy { type inet:ipv4-address; } } } }If this is your first exposure to YANG you can see that the modeling language is very straightforward and easy to understand. See RFC 6020 for more details and examples for YANG.

The concepts you should understand in the above generated skeleton are:

-

The vlan service list is augmented into the services tree in NSO. This specifies the path to reach vlans in the CLI, REST etc. There is no requirements on where the service shall be added into ncs, if you want vlans to be at the top-level, just remove the augments statement.

-

The two lines of

uses ncs:service-dataandncs:servicepoint "vlan"tells NSO that this is a service.

-

-

The next step is to modify the skeleton service YANG model and add the real parameters.

So, if a user wants to create a new VLAN in the network what should the parameters be? A very simple service model could look like below (modify the

src/yang/vlan.yangfile):augment /ncs:services { list vlan { key name; uses ncs:service-data; ncs:servicepoint "vlan"; leaf name { type string; } leaf vlan-id { type uint32 { range "1..4096"; } } list device-if { key "device-name"; leaf device-name { type leafref { path "/ncs:devices/ncs:device/ncs:name"; } } leaf interface-type { type enumeration { enum FastEthernet; enum GigabitEthernet; enum TenGigabitEthernet; } } leaf interface { type string; } } } }This simple VLAN service model says:

-

Each VLAN must have a unique name, for example "net-1".

-

The VLAN has an id from 1 to 4096.

-

The VLAN is attached to a list of devices and interfaces. In order to make this example as simple as possible the interface reference is selected by picking the type and then the name as a plain string.

-

-

The next step is to build the data model:

$

cd $NCS_DIR/examples.ncs/getting-started/using-ncs/1-simulated-cisco-ios/packages/vlan/src$make.../ncsc `ls vlan-ann.yang > /dev/null 2>&1 && echo "-a vlan-ann.yang"` \ -c -o ../load-dir/vlan.fxs yang/vlan.yangA nice property of NSO is that already at this point you can load the service model into NSO and try if it works well in the CLI etc. Nothing will happen to the devices since the mapping is not defined yet. This is normally the way to iterate a model; load it into NSO, test the CLI towards the network engineers, make changes, reload it into NSO etc.

-

Go to the root directory of the

simulated-iosexample:$

cd $NCS_DIR/examples.ncs/getting-started/using-ncs/1-simulated-cisco-ios -

Start netsim and NSO:

$

ncs-netsim startDEVICE c0 OK STARTED DEVICE c1 OK STARTED DEVICE c2 OK STARTED $ncs --with-package-reloadWhen NSO was started above, you gave NSO a parameter to reload all packages so that the newly added vlan package is included. Without this parameter, NSO starts with the same packages as last time. Packages can also be reloaded without starting and stopping NSO.

-

Start the NSO CLI:

$

ncs_cli -C -u admin -

Since this is the first time NSO is started with some devices, you need to make sure NSO synchronizes its database with the devices:

admin@ncs#

devices sync-fromsync-result { device c0 result true } sync-result { device c1 result true } sync-result { device c2 result true } -

At this point we have a service model for VLANs, but no mapping of VLAN to device configurations. This is fine; you can try the service model and see if it makes sense. Create a VLAN service:

admin@ncs#

configEntering configuration mode terminal admin@ncs(config)#services vlan net-0 vlan-id 1234 \ device-if c0 interface-type FastEthernet interface 1/0admin@ncs(config-device-if-c0)#topadmin@ncs(config)#show configurationservices vlan net-0 vlan-id 1234 device-if c0 interface-type FastEthernet interface 1/0 ! ! admin@ncs(config)#services vlan net-0 vlan-id 1234 \ device-if c1 interface-type FastEthernet interface 1/0admin@ncs(config-device-if-c1)#topadmin@ncs(config)#show configurationservices vlan net-0 vlan-id 1234 device-if c0 interface-type FastEthernet interface 1/0 ! device-if c1 interface-type FastEthernet interface 1/0 ! ! admin@ncs(config)#commit dry-run outformat nativeadmin@ncs(config)#commitCommit complete.Committing service changes at this point has no effect on the devices since there is no mapping defined. This is why the output to the command commit dry-run outformat native doesn't show any output. The service instance data will just be stored in the data base in NSO.

Note that you get tab completion on the devices since they are references to device names in CDB. You also get tab completion for interface types since the types are enumerated in the model. However the interface name is just a string, and you have to type the correct interface name. For service models where there is only one device type like in this simple example, a reference to the ios interface name according to the IOS model could be used. However that makes the service model dependent on the underlying device types and if another type is added, the service model needs to be updated and this is most often not desired. There are techniques to get tab completion even when the data type is a string, but this is omitted here for simplicity.

Make sure you delete the vlan service instance before moving on with the example:

admin@ncs(config)#

no services vlanadmin@ncs(config)#commitCommit complete.

-

Now it is time to define the mapping from service configuration to actual device configuration. The first step is to understand the actual device configuration. In this example, this is done by manually configuring one vlan on a device. This concrete device configuration is a starting point for the mapping; it shows the expected result of applying the service.

admin@ncs(config)#

devices device c0 config ios:vlan 1234admin@ncs(config-vlan)#topadmin@ncs(config)#devices device c0 config ios:interface \ FastEthernet 10/10 switchport trunk allowed vlan 1234admin@ncs(config-if)#topadmin@ncs(config)#show configurationdevices device c0 config ios:vlan 1234 ! ios:interface FastEthernet10/10 switchport trunk allowed vlan 1234 exit ! ! -

The concrete configuration above has the interface and VLAN hard-wired. This is what we now will make into a template. It is always recommended to start like this and create a concrete representation of the configuration the template shall create. Templates are device-configuration where parts of the config is represented as variables. These kind of templates are represented as XML files. Display the device configuration as XML:

admin@ncs(config)#

show full-configuration devices device c0 \ config ios:vlan | display xml<config xmlns="http://tail-f.com/ns/config/1.0"> <devices xmlns="http://tail-f.com/ns/ncs"> <device> <name>c0</name> <config> <vlan xmlns="urn:ios"> <vlan-list> <id>1234</id> </vlan-list> </vlan> </config> </device> </devices> </config> admin@ncs(config)#show full-configuration devices device c0 \ config ios:interface FastEthernet 10/10 | display xml<config xmlns="http://tail-f.com/ns/config/1.0"> <devices xmlns="http://tail-f.com/ns/ncs"> <device> <name>c0</name> <config> <interface xmlns="urn:ios"> <FastEthernet> <name>10/10</name> <switchport> <trunk> <allowed> <vlan> <vlans>1234</vlans> </vlan> </allowed> </trunk> </switchport> </FastEthernet> </interface> </config> </device> </devices> </config> -

Now, we shall build that template. When the package was created a skeleton XML file was created in

packages/vlan/templates/vlan.xml<config-template xmlns="http://tail-f.com/ns/config/1.0" servicepoint="vlan"> <devices xmlns="http://tail-f.com/ns/ncs"> <device> <!-- Select the devices from some data structure in the service model. In this skeleton the devices are specified in a leaf-list. Select all devices in that leaf-list: --> <name>{/device}</name> <config> <!-- Add device-specific parameters here. In this skeleton the service has a leaf "dummy"; use that to set something on the device e.g.: <ip-address-on-device>{/dummy}</ip-address-on-device> --> </config> </device> </devices> </config-template>We need to specify the right path to the devices. In our case the devices are identified by

/device-if/device-name(see the YANG service model).For each of those devices we need to add the VLAN and change the specified interface configuration. Copy the XML config from the CLI and replace with variables:

<config-template xmlns="http://tail-f.com/ns/config/1.0" servicepoint="vlan"> <devices xmlns="http://tail-f.com/ns/ncs"> <device> <name>{/device-if/device-name}</name> <config> <vlan xmlns="urn:ios"> <vlan-list tags="merge"> <id>{../vlan-id}</id> </vlan-list> </vlan> <interface xmlns="urn:ios"> <?if {interface-type='FastEthernet'}?> <FastEthernet tags="nocreate"> <name>{interface}</name> <switchport> <trunk> <allowed> <vlan tags="merge"> <vlans>{../vlan-id}</vlans> </vlan> </allowed> </trunk> </switchport> </FastEthernet> <?end?> <?if {interface-type='GigabitEthernet'}?> <GigabitEthernet tags="nocreate"> <name>{interface}</name> <switchport> <trunk> <allowed> <vlan tags="merge"> <vlans>{../vlan-id}</vlans> </vlan> </allowed> </trunk> </switchport> </GigabitEthernet> <?end?> <?if {interface-type='TenGigabitEthernet'}?> <TenGigabitEthernet tags="nocreate"> <name>{interface}</name> <switchport> <trunk> <allowed> <vlan tags="merge"> <vlans>{../vlan-id}</vlans> </vlan> </allowed> </trunk> </switchport> </TenGigabitEthernet> <?end?> </interface> </config> </device> </devices> </config-template>Walking through the template can give a better idea of how it works. For every

/device-if/device-namefrom the service instance do the following:-

Add the vlan to the vlan-list, the tag "merge" tells the template to merge the data into an existing list (default is to replace).

-

For every interface within that device, add the vlan to the allowed vlans and set mode to trunk. The tag "nocreate" tells the template to not create the named interface if it does not exist.

Tip

While experimenting with the template it can be helpful to remove the nocreate tag. In that way you will always create configuration from the template even if the interface does not exist.

It is important to understand that every path in the template above refers to paths from the service model in

vlan.yang.For details on the template syntax, see the section called “Service Templates”

-

-

Throw away the uncommitted changes to the device, and request NSO to reload the packages:

admin@ncs(config)#

exit no-confirmadmin@ncs#packages reloadreload-result { package cisco-ios result true } reload-result { package vlan result true }Previously we started NSO with a reload package option, the above shows how to do the same without starting and stopping NSO.

-

We can now create services that will make things happen in the network. Create a VLAN service:

admin@ncs#

configEntering configuration mode terminal admin@ncs(config)#services vlan net-0 vlan-id 1234 device-if c0 \ interface-type FastEthernet interface 1/0admin@ncs(config-device-if-c0)#topadmin@ncs(config)#services vlan net-0 device-if c1 \ interface-type FastEthernet interface 1/0admin@ncs(config-device-if-c1)#topadmin@ncs(config)#show configurationservices vlan net-0 vlan-id 1234 device-if c0 interface-type FastEthernet interface 1/0 ! device-if c1 interface-type FastEthernet interface 1/0 ! ! admin@ncs(config)#commit dry-run outformat nativenative { device { name c0 data vlan 1234 ! interface FastEthernet1/0 switchport trunk allowed vlan 1234 exit } device { name c1 data vlan 1234 ! interface FastEthernet1/0 switchport trunk allowed vlan 1234 exit } } admin@ncs(config)#commit | details... Commit complete.Note that the commit command stored the service data in NSO, and at the same time pushed the changes to the two devices affected by the service.

-

The VLAN service instance can now be changed:

admin@ncs(config)#

services vlan net-0 vlan-id 1222admin@ncs(config-vlan-net-0)#topadmin@ncs(config)#show configurationservices vlan net-0 vlan-id 1222 ! admin@ncs(config)#commit dry-run outformat nativenative { device { name c0 data no vlan 1234 vlan 1222 ! interface FastEthernet1/0 switchport trunk allowed vlan 1222 exit } device { name c1 data no vlan 1234 vlan 1222 ! interface FastEthernet1/0 switchport trunk allowed vlan 1222 exit } } admin@ncs(config)#commitCommit complete.It is important to understand what happens above. When the VLAN id is changed, NSO is able to calculate the minimal required changes to the configuration. The same situation holds true for changing elements in the configuration or even parameters of those elements. In this way NSO does not need any explicit mappings to for a VLAN change or deletion. NSO does not overwrite a new configuration on the old configuration. Adding an interface to the same service works the same:

admin@ncs(config)#

services vlan net-0 device-if c2 \ interface-type FastEthernet interface 1/0admin@ncs(config-device-if-c2)#topadmin@ncs(config)#commit dry-run outformat nativenative { device { name c2 data vlan 1222 ! interface FastEthernet1/0 switchport trunk allowed vlan 1222 exit } } admin@ncs(config)#commitCommit complete. -

To clean up the configuration on the devices, run the delete command as shown below:

admin@ncs(config)#

no services vlan net-0admin@ncs(config)#commit dry-run outformat nativenative { device { name c0 data no vlan 1222 interface FastEthernet1/0 no switchport trunk allowed vlan 1222 exit } device { name c1 data no vlan 1222 interface FastEthernet1/0 no switchport trunk allowed vlan 1222 exit } device { name c2 data no vlan 1222 interface FastEthernet1/0 no switchport trunk allowed vlan 1222 exit } } admin@ncs(config)#commitCommit complete. -

To make the VLAN service package complete edit the

vlan/package-meta-data.xmlto reflect the service model purpose.

This example showed how to use template-based mapping. NSO also allows for programmatic mapping and also a combination of the two approaches. The latter is very flexible, if some logic need to be attached to the service provisioning that is expressed as templates and the logic applies device agnostic templates.

This section will illustrate how to implement a simple VLAN service in Java. The end-result will be the same as shown previously using templates but this time implemented in Java instead.

Note well that the examples in this section are extremely simplified from a networking perspective in order to illustrate the concepts.

We will first look at the following preparatory steps:

-

Prepare a simulated environment of Cisco IOS devices: in this example we start from scratch in order to illustrate the complete development process. We will not reuse any existing NSO examples.

-

Generate a template service skeleton package: use NSO tools to generate a Java based service skeleton package.

-

Write and test the VLAN Service Model.

-

Analyze the VLAN service mapping to IOS configuration.

The above steps are no different from defining services using templates. Next is to start playing with the Java Environment:

-

Configuring start and stop of the Java VM.

-

First look at the Service Java Code: introduction to service mapping in Java.

-

Developing by tailing log files.

-

Developing using Eclipse.

We will start by setting up a run-time environment that includes

simulated Cisco IOS devices and configuration data for NSO. Make

sure you have sourced the ncsrc file. Create

a directory somewhere like:

$mkdir ~/vlan-service$cd ~/vlan-service

Now lets create a simulated environment with 3 IOS devices and a NSO that is ready to run with this simulated network:

$ncs-netsim create-network $NCS_DIR/packages/neds/cisco-ios 3 c$ncs-setup --netsim-dir ./netsim/ --dest ./

Start the simulator and NSO:

$ncs-netsim startDEVICE c0 OK STARTED DEVICE c1 OK STARTED DEVICE c2 OK STARTED $ncs

Use the Cisco CLI towards one of the devices:

$ncs-netsim cli-i c0admin connected from 127.0.0.1 using console on ncs c0>enablec0#configureEnter configuration commands, one per line. End with CNTL/Z. c0(config)#show full-configurationno service pad no ip domain-lookup no ip http server no ip http secure-server ip routing ip source-route ip vrf my-forward bgp next-hop Loopback 1 ! ...

Use the NSO CLI to get the configuration:

$ncs_cli -C -u adminadmin connected from 127.0.0.1 using console on ncs admin@ncs#devices sync-fromsync-result { device c0 result true } sync-result { device c1 result true } sync-result { device c2 result true } admin@ncs#configEntering configuration mode terminal admin@ncs(config)#show full-configuration devices device c0 configdevices device c0 config no ios:service pad ios:ip vrf my-forward bgp next-hop Loopback 1 ! ios:ip community-list 1 permit ios:ip community-list 2 deny ios:ip community-list standard s permit no ios:ip domain-lookup no ios:ip http server no ios:ip http secure-server ios:ip routing ...

Finally, set VLAN information manually on a device to prepare for the mapping later.

admin@ncs(config)#devices device c0 config ios:vlan 1234admin@ncs(config)#devices device c0 config ios:interface FastEthernet 1/0 switchport mode trunkadmin@ncs(config-if)#switchport trunk allowed vlan 1234admin@ncs(config-if)#topadmin@ncs(config)#show configurationdevices device c0 config ios:vlan 1234 ! ios:interface FastEthernet1/0 switchport mode trunk switchport trunk allowed vlan 1234 exit ! ! admin@ncs(config)#commit

In the run-time directory you created:

$ ls -F1

README.ncs

README.netsim

logs/

ncs-cdb/

ncs.conf

netsim/

packages/

scripts/

state/

Note the packages directory,

cd to it:

$cd packages$ls -ltotal 8 cisco-ios -> .../packages/neds/cisco-ios

Currently there is only one package, the Cisco IOS NED. We will now create a new package that will contain the VLAN service.

$ncs-make-package --service-skeleton java vlan$lscisco-ios vlan

This creates a package with the following structure:

During the rest of this section we will work with the

vlan/src/yang/vlan.yang and

vlan/src/java/src/com/example/vlan/vlanRFS.java files.

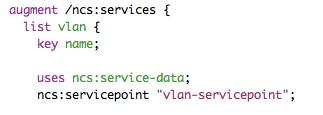

Edit the vlan/src/yang/vlan.yang according to below:

augment /ncs:services {

list vlan {

key name;

uses ncs:service-data;

ncs:servicepoint "vlan-servicepoint";

leaf name {

type string;

}

leaf vlan-id {

type uint32 {

range "1..4096";

}

}

list device-if {

key "device-name";

leaf device-name {

type leafref {

path "/ncs:devices/ncs:device/ncs:name";

}

}

leaf interface {

type string;

}

}

}

}This simple VLAN service model says:

-

We give a VLAN a name, for example

net-1 -

The VLAN has an id from 1 to 4096

-

The VLAN is attached to a list of devices and interfaces. In order to make this example as simple as possible the interface name is just a string. A more correct and useful example would specify this is a reference to an interface to the device, but for now it is better to keep the example simple.

Make sure you do keep the lines generated by the ncs-make-package:

uses ncs:service-data; ncs:servicepoint "vlan-servicepoint";

The first line expands to a YANG structure that is shared amongst all services. The second line connects the service to the Java callback.

To build this service model cd to

packages/vlan/src and type make

(assuming you have the make build system installed).

$cd packages/vlan/src/$make

We can now test the service model by requesting NSO to reload all packages:

$ncs_cli -C -U adminadmin@ncs#packages reload>>> System upgrade is starting. >>> Sessions in configure mode must exit to operational mode. >>> No configuration changes can be performed until upgrade has completed. >>> System upgrade has completed successfully. result Done

You can also stop and start NSO, but then you have to pass the

option --with-package-reload when starting

NSO. This is important, NSO does not by default take any changes in

packages into account when restarting. When packages are reloaded

the state/packages-in-use is updated.

Now, create a VLAN service, (nothing will happen since we have not defined any mapping).

admin@ncs(config)#services vlan net-0 vlan-id 1234 device-if c0 interface 1/0admin@ncs(config-device-if-c0)#topadmin@ncs(config)#commit

Ok, that worked let us move on and connect that to some device configuration using Java mapping. Note well that Java mapping is not needed, templates are more straight-forward and recommended but we use this as an "Hello World" introduction to Java Service Programming in NSO. Also at the end we will show how to combine Java and templates. Templates are used to define a vendor independent way of mapping service attributes to device configuration and Java is used as a thin layer before the templates to do logic, call-outs to external systems etc.

The default configuration of the Java VM is:

admin@ncs(config)# show full-configuration java-vm | details

java-vm stdout-capture enabled

java-vm stdout-capture file ./logs/ncs-java-vm.log

java-vm connect-time 60

java-vm initialization-time 60

java-vm synchronization-timeout-action log-stop

java-vm jmx jndi-address 127.0.0.1

java-vm jmx jndi-port 9902

java-vm jmx jmx-address 127.0.0.1

java-vm jmx jmx-port 9901By default, ncs will start the Java VM invoking the command $NCS_DIR/bin/ncs-start-java-vm That script will invoke

$ java com.tailf.ncs.NcsJVMLauncher

The class NcsJVMLauncher contains the

main() method. The started java vm will automatically

retrieve and deploy all java code for the packages defined in the

load-path of the ncs.conf file. No other

specification than the package-meta-data.xml

for each package is needed.

The verbosity of Java error messages can be controlled by:

admin@ncs(config)# java-vm exception-error-message verbosity

Possible completions:

standard trace verboseFor more detail on the Java VM settings see The NSO Java VM.

The service model and the corresponding Java callback is bound by

the service point name. Look at the service model in

packages/vlan/src/yang:

The corresponding generated Java skeleton, (one print hello world

statement added):

Modify the generated code to include the print "Hello World!" statement in the same way. Re-build the package:

$cd packages/vlan/src/$make

Whenever a package has changed we need to tell NSO to reload the package. There are three ways:

-

Just reload the implementation of a specific package, will not load any model changes: admin@ncs# packages package vlan redeploy

-

Reload all packages including any model changes: admin@ncs# packages reload

-

Restart NSO with reload option: $ncs --with-package-reload

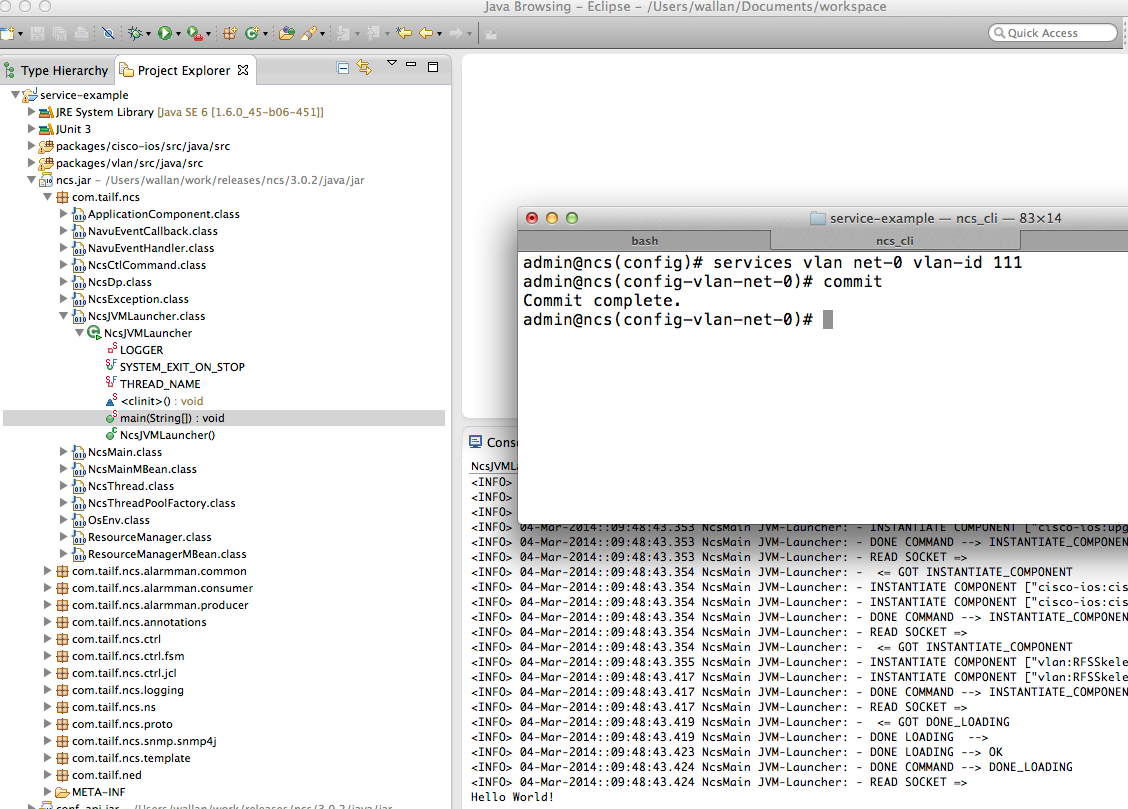

When that is done we can create a service (or modify an existing) and the callback will be triggered:

admin@ncs(config)#vlan net-0 vlan-id 888admin@ncs(config-vlan-net-0)#commit

Now, have a look in the logs/ncs-java-vm.log:

$ tail ncs-java-vm.log

...

<INFO> 03-Mar-2014::16:55:23.705 NcsMain JVM-Launcher: \

- REDEPLOY PACKAGE COLLECTION --> OK

<INFO> 03-Mar-2014::16:55:23.705 NcsMain JVM-Launcher: \

- REDEPLOY ["vlan"] --> DONE

<INFO> 03-Mar-2014::16:55:23.706 NcsMain JVM-Launcher: \

- DONE COMMAND --> REDEPLOY_PACKAGE

<INFO> 03-Mar-2014::16:55:23.706 NcsMain JVM-Launcher: \

- READ SOCKET =>

Hello World!

Tailing the ncs-java-vm.log is one way of

developing. You can also start and stop the Java VM explicitly and

see the trace in the shell. First of all tell NSO not to start the

VM by adding the following snippet to ncs.conf:

<java-vm>

<auto-start>false</auto-start>

</java-vm>Then, after restarting NSO or reloading the configuration, from the shell prompt:

$ ncs-start-java-vm

.....

.. all stdout from JVMSo modifying or creating a VLAN service will now have the "Hello World!" string show up in the shell. You can modify the package and reload/redeploy and see the output.

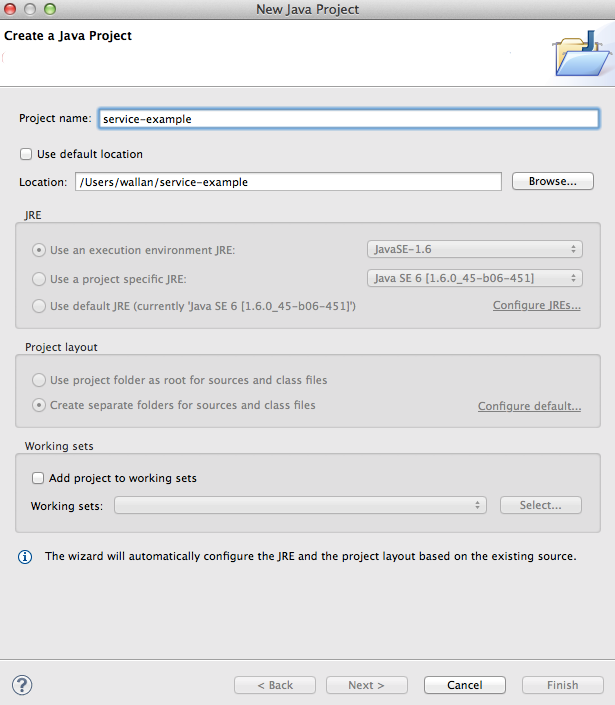

First of all generate environment for Eclipse:

$ ncs-setup --eclipse-setup

This will generate two files, .classpath and

.project. If we add this directory to eclipse

as a "File->New->Java Project", uncheck the "Use the default

location" and enter the directory where the .classpath and

.project have been generated. We're immediately ready to run this

code in eclipse.

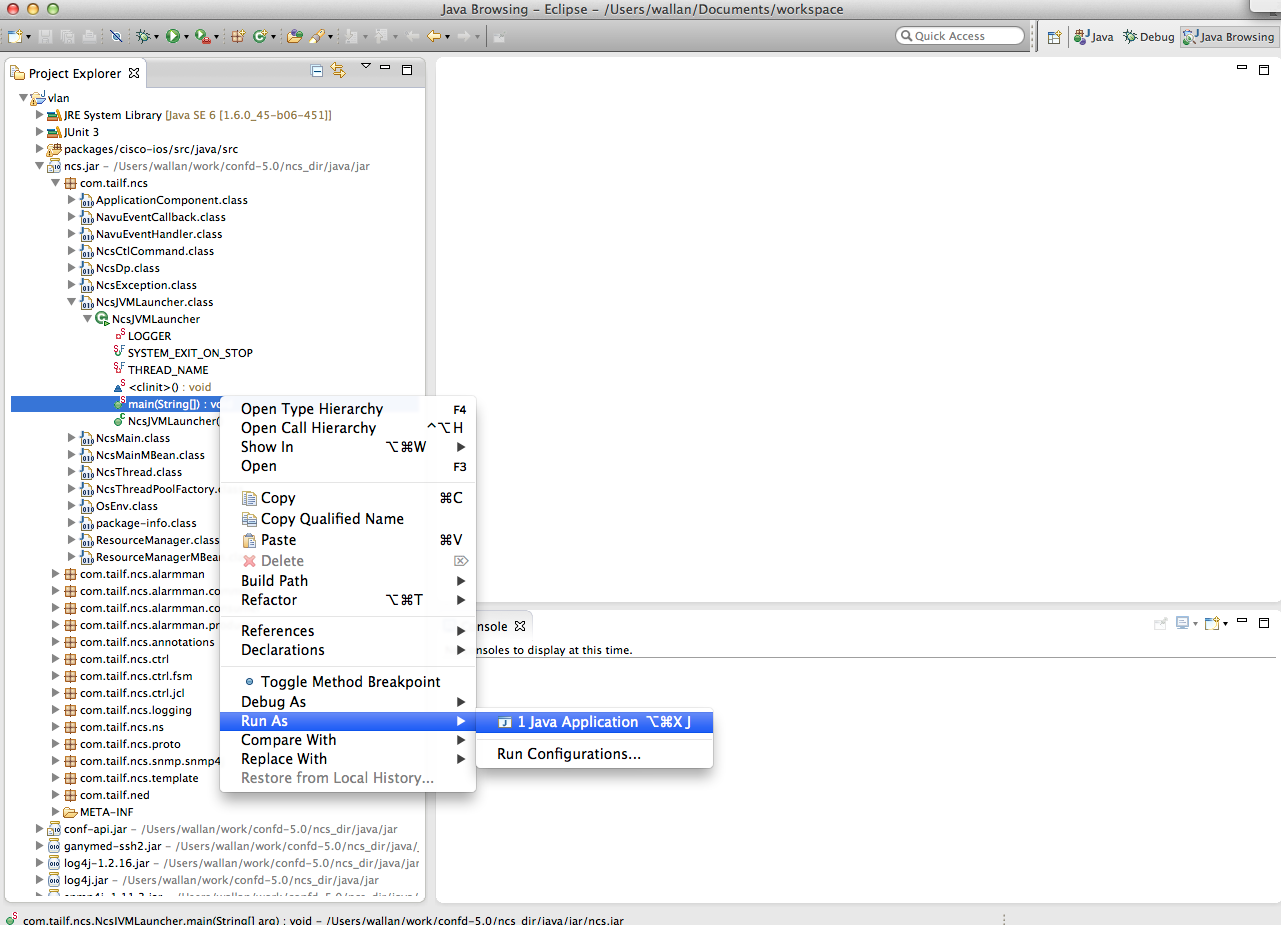

All we need to do is to choose the main() routine in

the NcsJVMLauncher class.

The eclipse debugger works now as usual, and we can at will start

and stop the Java code.

One caveat here which is worth mentioning is that there are a few timeouts between NSO and the Java code that will trigger when we sit in the debugger. While developing with the eclipse debugger and breakpoints we typically want to disable all these timeouts. First we have 3 timeouts in ncs.conf that matter. Set the three values of /ncs-config/japi/new-session-timeout /ncs-config/japi/query-timeout /ncs-config/japi/connect-timeout to a large value. See man page ncs.conf(5) for a detailed description on what those values are. If these timeouts are triggered, NSO will close all sockets to the Java VM and all bets are off.

$ cp $NCS_DIR/etc/ncs/ncs.conf .Edit the file and enter the following XML entry just after the Webui entry.

<japi>

<new-session-timeout>PT1000S</new-session-timeout>

<query-timeout>PT1000S</query-timeout>

<connect-timeout>PT1000S</connect-timeout>

</japi>Now restart ncs, and from now on start it as

$ ncs -c ./ncs.confYou can verify that the Java VM is not running by checking the package status:

admin@ncs# show packages package vlan

packages package vlan

package-version 1.0

description "Skeleton for a resource facing service - RFS"

ncs-min-version 3.0

directory ./state/packages-in-use/1/vlan

component RFSSkeleton

callback java-class-name [ com.example.vlan.vlanRFS ]

oper-status java-uninitialized

Create a new project and start the launcher main in Eclipse:

You can start and stop the Java VM from Eclipse. Note well that

this is not needed since the change cycle is: modify the Java

code, make in the src directory and then reload the package. All

while NSO and the JVM is running.

Change the VLAN service and see the console output in Eclipse:

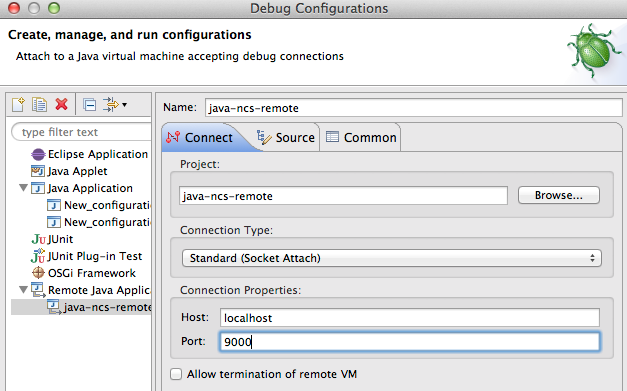

Another option is to have Eclipse connect to the running VM. Start the VM manually with the -d option.

$ ncs-start-java-vm -d

Listening for transport dt_socket at address: 9000

NCS JVM STARTING

...Then you can setup Eclipse to connect to the NSO Java VM:

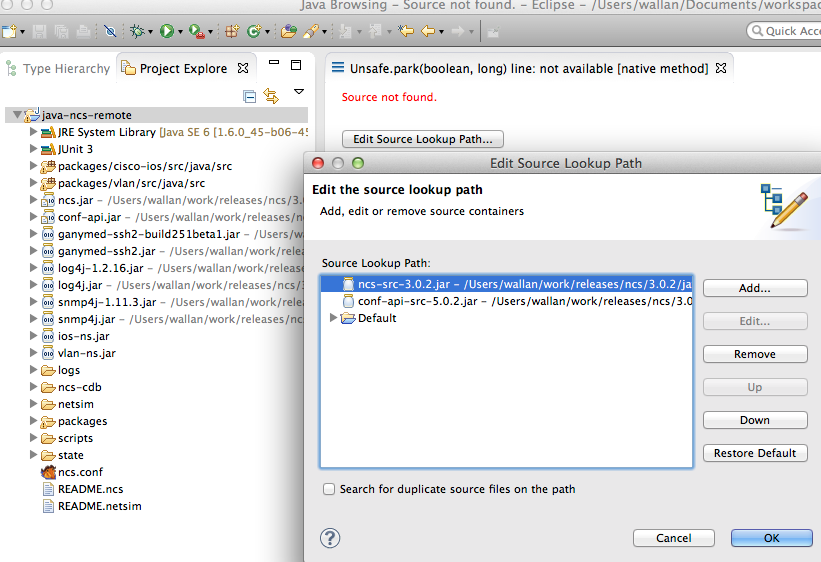

In order for Eclipse to show the NSO code when debugging add the

NSO Source Jars, (add external Jar in Eclipse):

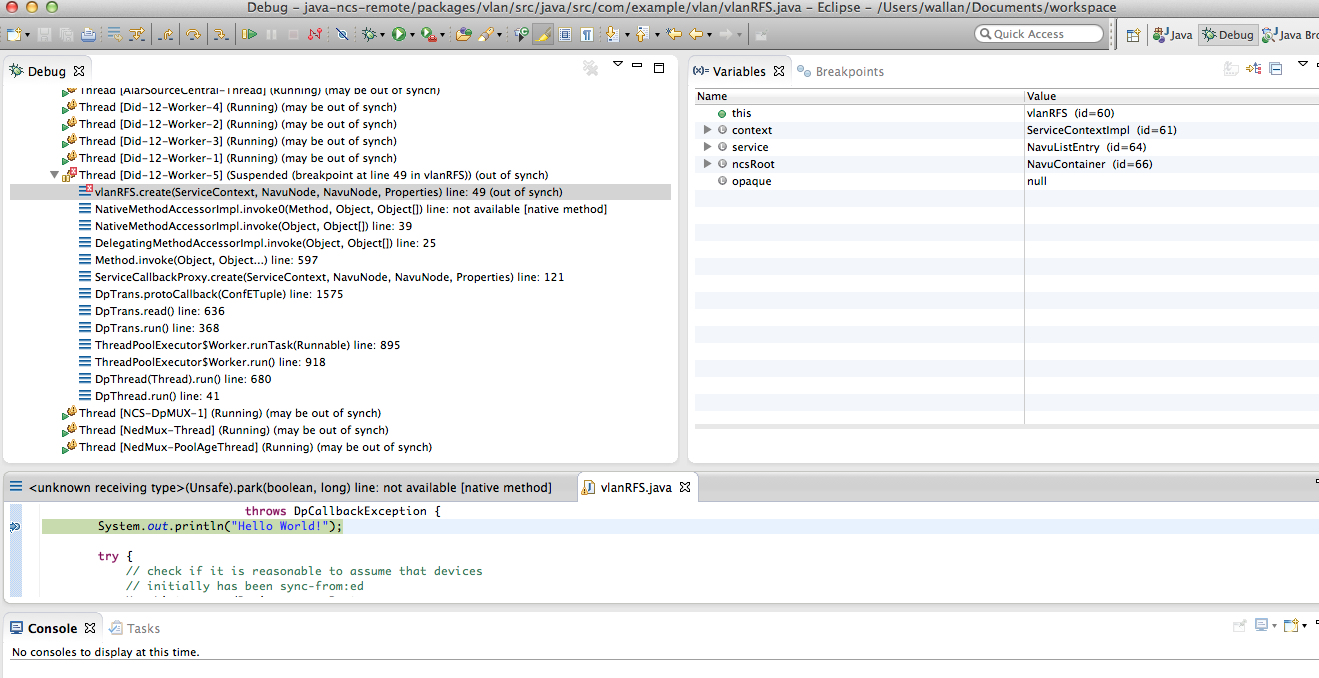

Navigate to the service create for the VLAN service and add a breakpoint:

Commit a change of a VLAN service instance and Eclipse will stop

at the breakpoint:

So the problem at hand is that we have service parameters and a resulting device configuration. Previously in this user guide we showed how to do that with templates. The same principles apply in Java. The service model and the device models are YANG models in NSO irrespective of the underlying protocol. The Java mapping code transforms the service attributes to the corresponding configuration leafs in the device model.

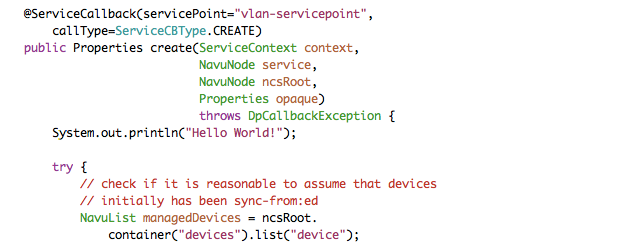

The NAVU API lets the Java programmer navigate the service model and the device models as a DOM tree. Have a look at the create signature:

@ServiceCallback(servicePoint="vlan-servicepoint",

callType=ServiceCBType.CREATE)

public Properties create(ServiceContext context,

NavuNode service,

NavuNode ncsRoot,

Properties opaque)

throws DpCallbackException {

Two NAVU nodes are passed: the actual service

serviceinstance and the NSO root

ncsRoot.

We can have a first look at NAVU be analyzing the first try statement:

try {

// check if it is reasonable to assume that devices

// initially has been sync-from:ed

NavuList managedDevices =

ncsRoot.container("devices").list("device");

for (NavuContainer device : managedDevices) {

if (device.list("capability").isEmpty()) {

String mess = "Device %1$s has no known capabilities, " +

"has sync-from been performed?";

String key = device.getKey().elementAt(0).toString();

throw new DpCallbackException(String.format(mess, key));

}

}

NAVU is a lazy evaluated DOM tree that represents the

instantiated YANG model. So knowing the NSO model:

devices/device, (container/list)

corresponds to the list of capabilities for a device, this can be

retrieved by

ncsRoot.container("devices").list("device").

The service node can be used to fetch the values of

the VLAN service instance:

-

vlan/name

-

vlan/vlan-id

-

vlan/device-if/device and vlan/device-if/interface

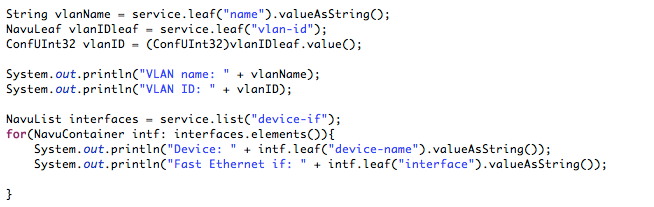

A first snippet that iterates the service model and prints to the console looks like below:

The com.tailf.conf package contains Java Classes

representing the YANG types like ConfUInt32.

Try it out by the following sequence:

-

Rebuild the Java Code : in

packages/vlan/srctype make. -

Reload the package : in the NSO Cisco CLI do admin@ncs# packages package vlan redeploy.

-

Create or modify a vlan service: in NSO CLI admin@ncs(config)# services vlan net-0 vlan-id 844 device-if c0 interface 1/0, and commit.

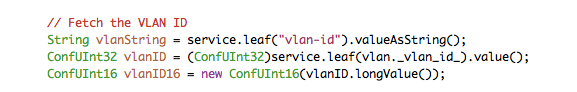

Remember the service attribute is passed as a

parameter to the create method. As a starting point, look at the

first three lines:

-

To reach a specific leaf in the model use the NAVU leaf method with the name of the leaf as parameter. This leaf then has various methods like getting the value as a string.

-

service.leaf("vlan-id")andservice.leaf(vlan._vlan_id_)are two ways of referring to the vlan-id leaf of the service. The latter alternative uses symbols generated by the compilation steps. If this alternative is used, you get the benefit of compilation time checking. From this leaf you can get the value according to the type in the YANG modelConfUInt32in this case. -

Line 3 shows an example of casting between types. In this case we prepare the VLAN ID as a 16 unsigned int for later use.

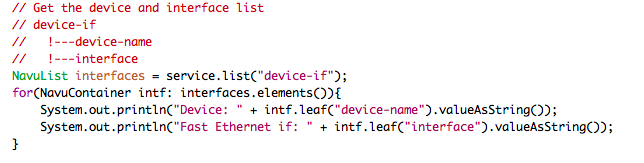

Next step is to iterate over the devices and interfaces. The NAVU

elements() returns the elements of a NAVU list.

In order to write the mapping code, make sure you have an understanding of the device model. One good way of doing that is to create a corresponding configuration on one device and then display that with pipe target "display xpath". Below is a CLI output that shows the model paths for "FastEthernet 1/0":

admin@ncs% show devices device c0 config ios:interface

FastEthernet 1/0 | display xpath

/devices/device[name='c0']/config/ios:interface/

FastEthernet[name='1/0']/switchport/mode/trunk

/devices/device[name='c0']/config/ios:interface/

FastEthernet[name='1/0']/switchport/trunk/allowed/vlan/vlans [ 111 ]

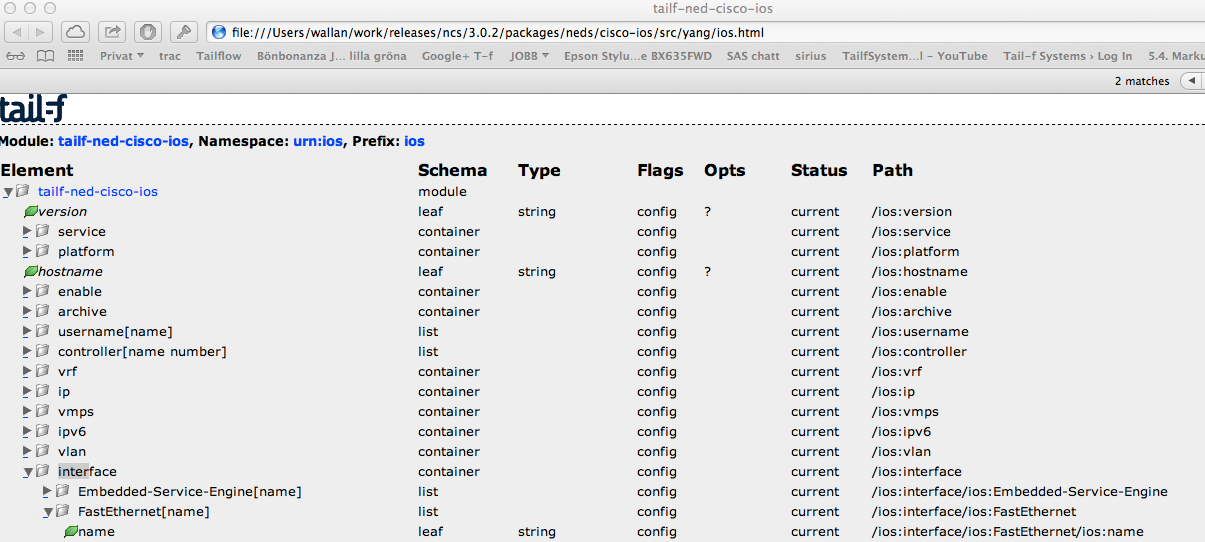

Another useful tool is to render a tree view of the model:

$ pyang -f jstree tailf-ned-cisco-ios.yang -o ios.htmlThis can then be opened in a Web browser and model paths are shown to the right:

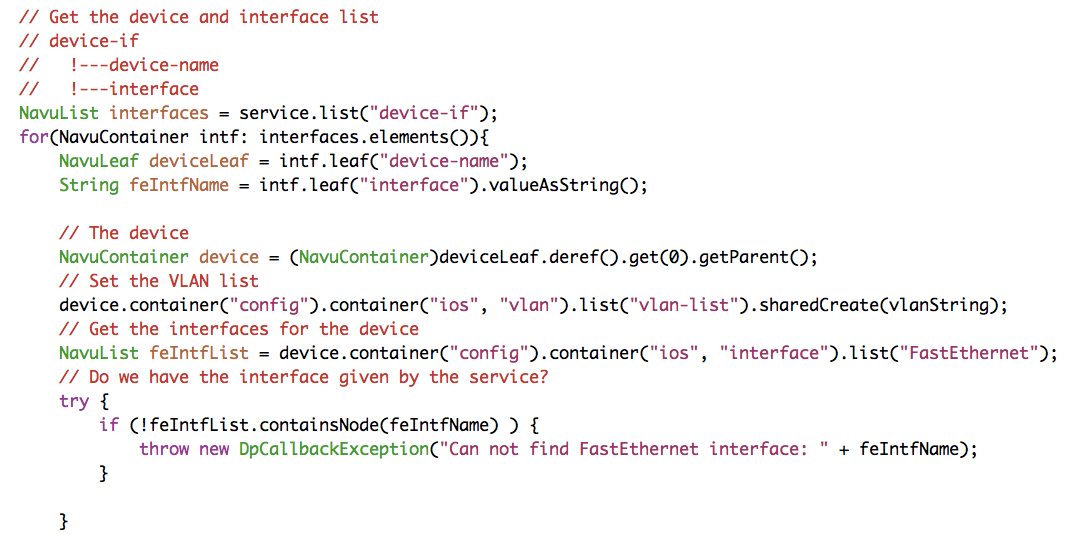

Now, we replace the print statements with setting real configuration on the devices.

Let us walk through the above code line by line. The

device-name is a leafref. The

deref method returns the object that the

leafref refers to. The getParent() might

surprise the reader. Look at the path for a leafref:

/device/name/config/ios:interface/name. The

name leafref is the key that identifies a specific

interface. The deref returns that key, while we want to

have a reference to the interface,

(/device/name/config/ios:interface), that is the reason

for the getParent().

The next line sets the vlan-list on the device. Note well that this

follows the paths displayed earlier using the NSO CLI. The

sharedCreate() is important, it creates device

configuration based on this service, and it says that other services

might also create the same value, "shared". Shared create maintains

reference counters for the created configuration in order for the

service deletion to delete the configuration only when the last

service is deleted. Finally the interface name is used as a key to

see if the interface exists, "containsNode()".

The last step is to update the VLAN list for each interface. The

code below adds an element to the VLAN leaf-list.

// The interface

NavuNode theIf = feIntfList.elem(feIntfName);

theIf.container("switchport").

sharedCreate().

container("mode").

container("trunk").

sharedCreate();

// Create the VLAN leaf-list element

theIf.container("switchport").

container("trunk").

container("allowed").

container("vlan").

leafList("vlans").

sharedCreate(vlanID16);

The above create method is all that is needed for create, read, update and delete. NSO will automatically handle any changes, like changing the VLAN ID, adding an interface to the VLAN service and deleting the service. Play with the CLI and modify and delete VLAN services and make sure you realize this. This is handled by the FASTMAP engine, it renders any change based on the single definition of the create method.

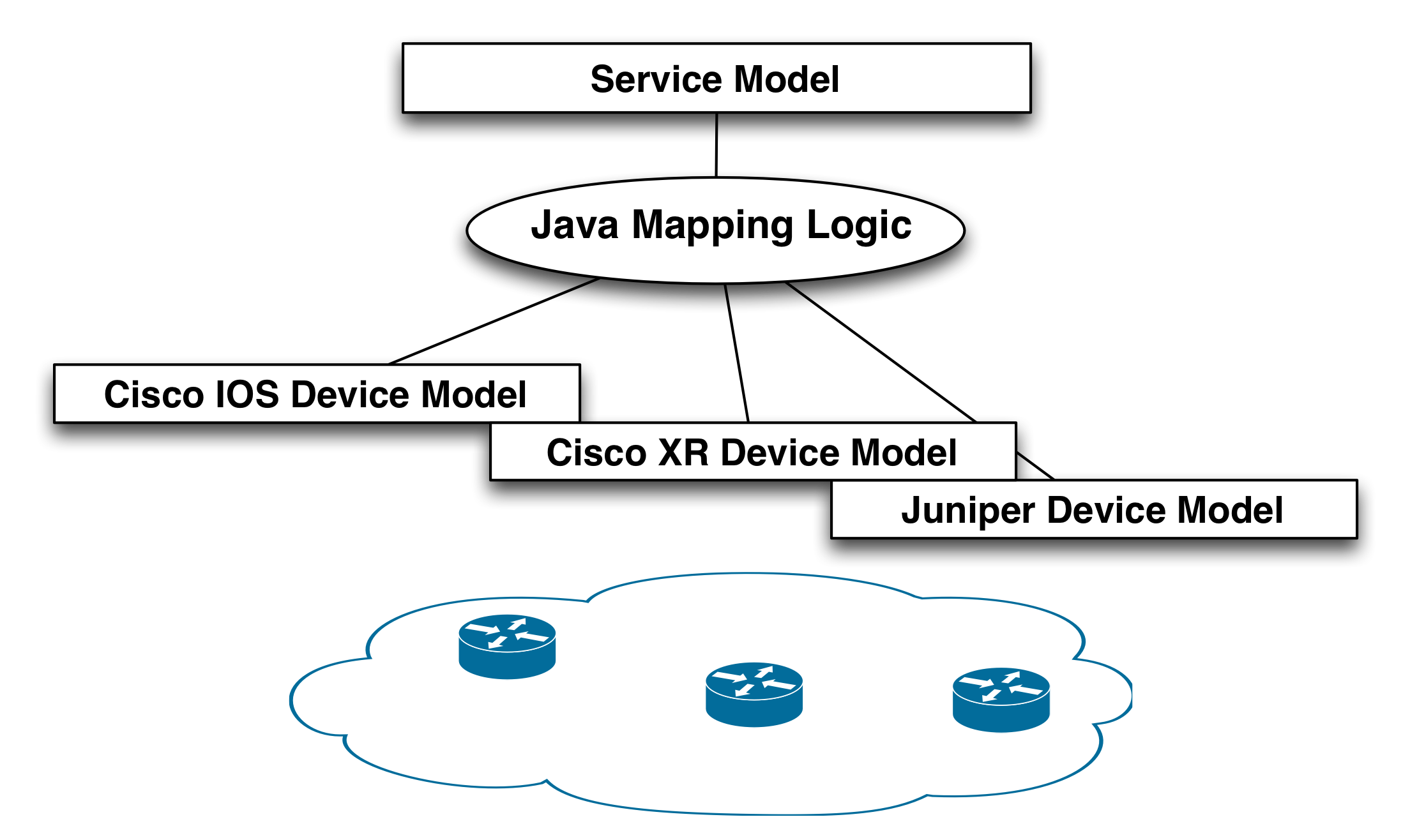

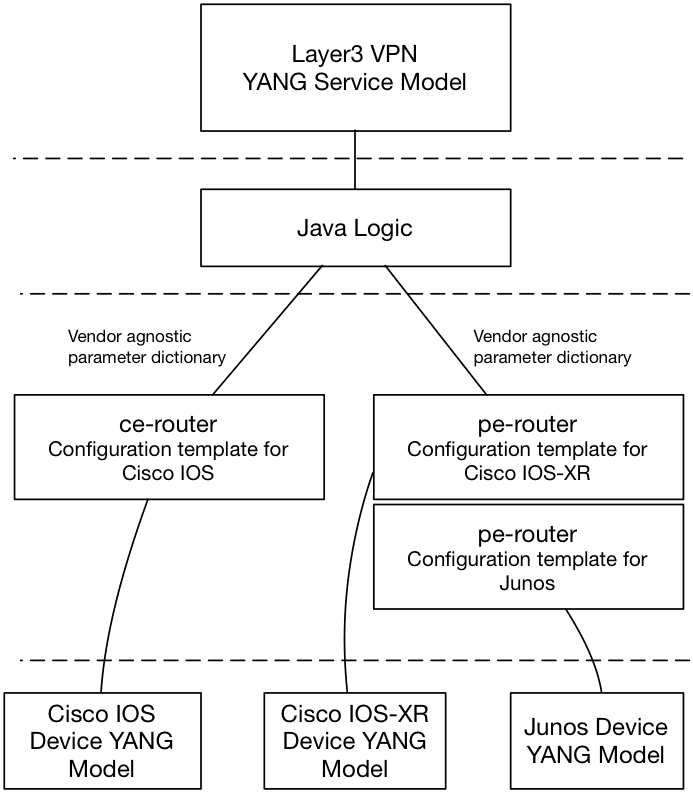

We have shown two ways of mapping a service model to device configurations, service templates and Java. The mapping strategy using only Java is illustrated in the Figure below.

This strategy has some drawbacks:

-

Managing different device vendors. If we would introduce more vendors in the network this would need to be handled by the Java code. Of course this can be factored into separate classes in order to keep the general logic clean and just passing the device details to specific vendor classes, but this gets complex and will always require Java programmers for introducing new device types.

-

No clear separation of concerns, domain expertise. The general business logic for a service is one thing, detailed configuration knowledge of device types something else. The latter requires network engineers and the first category is normally separated into a separate team that deals with OSS integration.

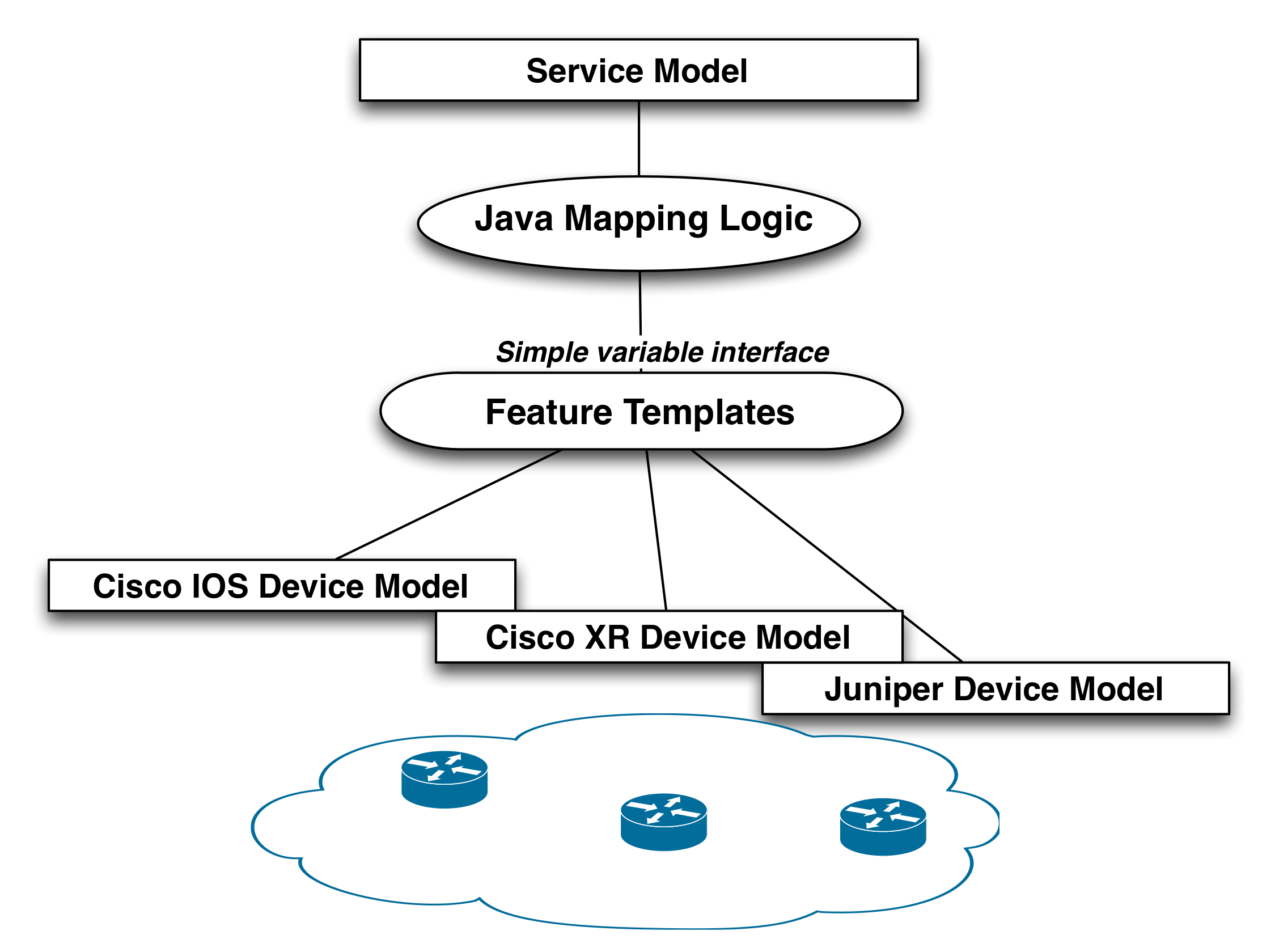

Java and templates can be combined according to below:

In this model the Java layer focus on required logic, but it never

touches concrete device models from various vendors. The vendor

specific details are abstracted away using feature templates. The

templates takes variables as input from the service logic, and the

templates in turn transforms these into concrete device

configuration. Introducing of a new device type does not affect the

Java mapping.

This approach has several benefits:

-

The service logic can be developed independently of device types.

-

New device types can be introduced at runtime without affecting service logic.

-

Separation of concerns: network engineers are comfortable with templates, they look like a configuration snippet. They have the expertise how configuration is applied to real devices. People defining the service logic often are more programmers, they need to interface with other systems etc, this suites a Java layer.

Note that the logic layer does not understand the device types, the templates will dynamically apply the correct leg of the template depending on which device is touched.

From an abstraction point of view we want a template that takes the following variables:

-

VLAN id

-

Device and interface

So the mapping logic can just pass these variables to the feature template and it will apply it to a multi-vendor network.

Create a template as described before.

-

Create a concrete configuration on a device, or several devices of different type

-

Request NSO to display that as XML

-

Replace values with variables

This results in a feature template like below:

<!-- Feature Parameters -->

<!-- $DEVICE -->

<!-- $VLAN_ID -->

<!-- $INTF_NAME -->

<config-template xmlns="http://tail-f.com/ns/config/1.0"

servicepoint="vlan">

<devices xmlns="http://tail-f.com/ns/ncs">

<device>

<name>{$DEVICE}</name>

<config>

<vlan xmlns="urn:ios" tags="merge">

<vlan-list>

<id>{$VLAN_ID}</id>

</vlan-list>

</vlan>

<interface xmlns="urn:ios" tags="merge">

<FastEthernet tags="nocreate">

<name>{$INTF_NAME}</name>

<switchport>

<trunk>

<allowed>

<vlan tags="merge">

<vlans>{$VLAN_ID}</vlans>

</vlan>

</allowed>

</trunk>

</switchport>

</FastEthernet>

</interface>

</config>

</device>

</devices>

</config-template>This template only maps to Cisco IOS devices (the xmlns="urn:ios" namespace), but you can add "legs" for other device types at any point in time and reload the package.

Note

Nodes set with a template variable evaluating to the empty string are ignored, e.g., the setting <some-tag>{$VAR}</some-tag> is ignored if the template variable $VAR evaluates to the empty string. However, this does not apply to XPath expressions evaluating to the empty string. A template variable can be surrounded by the XPath function string() if it is desirable to set a node to the empty string.

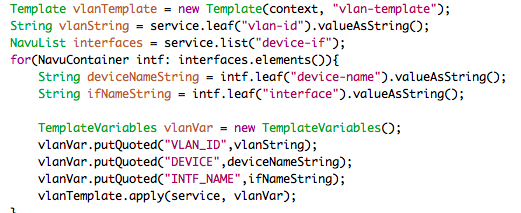

The Java mapping logic for applying the template is shown below:

Note that the Java code has no clue about the underlying device

type, it just passes the feature variables to the template. At

run-time you can update the template with mapping to other device

types. The Java-code stays untouched, if you modify an existing

VLAN service instance to refer to the new device type the

commit will generate the corresponding configuration

for that device.

The smart reader will complain, "why do we have the Java layer at all?", this could have been done as a pure template solution. That is true, but now this simple Java layer gives room for arbitrary complex service logic before applying the template.

The steps to build the solution described in this section are:

-

Create a run-time directory: $ mkdir ~/service-template; cd ~/service-template

-

Generate a netsim environment: $ ncs-netsim create-network $NCS_DIR/packages/neds/cisco-ios 3 c

-

Generate the NSO runtime environment: $ ncs-setup --netsim-dir ./netsim --dest ./

-

Create the VLAN package in the packages directory: $ cd packages; ncs-make-package --service-skeleton java vlan

-

Create a template directory in the VLAN package: $ cd vlan; mkdir templates

-

Save the above described template in

packages/vlan/templates -

Create the YANG service model according to above:

packages/vlan/src/yang/vlan.yang -

Update the Java code according to above:

packages/vlan/src/java/src/com/example/vlan/vlanRFS.java -

Build the package: in

packages/vlan/srcdomake -

Start NSO

The purpose of this section is to show a more complete example of

a service mapping. It is based based on the example

examples.ncs/service-provider/mpls-vpn.

In the previous sections we have looked at service mapping when the input parameters are enough to generate the corresponding device configurations. In many cases this is not the case. The service mapping logic may need to reach out to other data in order to generate the device configuration. This is common in the following scenarios:

-

Policies: it might make sense to define policies that can be shared between service instances. The policies, for example QoS, have data models of their own (not service models) and the mapping code reads from that.

-

Topology information: the service mapping might need to know connected devices, like which PE the CE is connected to.

-

Resources like VLAN IDs, IP addresses: these might not be given as input parameters. This can be modeled separately in NSO or fetched from an external system.

It is important to design the service model to consider the above examples: what is input? what is available from other sources? This example illustrates how to define QoS policies "on the side". A reference to an existing QoS policy is passed as input. This is a much better principle than giving all QoS parameters to every service instance. Note well that if you modify the QoS definitions that services are referring to, this will not change the existing services. In order to have the service to read the changed policies you need to perform a re-deploy on the service.

This example also uses a list that maps every CE to a PE. This list needs to be populated before any service is created. The service model only has the CE as input parameter, and the service mapping code performs a lookup in this list to get the PE. If the underlying topology changes a service re-deploy will adopt the service to the changed CE-PE links. See more on topology below.

NSO has a package to manage resources like VLAN and IP addresses as a pool within NSO. In this way the resources are managed within the transaction. The mapping code could also reach out externally to get resources. The Reactive FASTMAP pattern is recommended for this.

Using topology information in the instantiation of a NSO service is a common approach, but also an area with many misconceptions. Just like a service in NSO takes a black-box view of the configuration needed for that service in the network NSO treats topologies in the same way. It is of course common that you need to reference topology information in the service but it is highly desirable to have a decoupled and self-sufficient service that only uses the part of the topology that is interesting/needed for the specific service should be used.

Other parts of the topology could either be handled by other services or just let the network state sort it out, it does not necessarily relate to configuration the network. A routing protocol will for example handle the IP path through the network.

It is highly desirable to not introduce unneeded dependencies towards network topologies in your service.

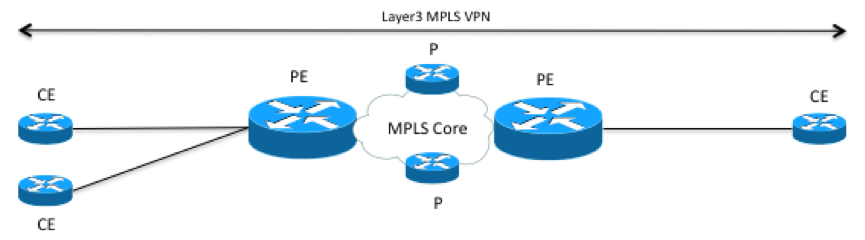

To illustrate this, lets look at a Layer3 MPLS VPN service. A logical overview of an MPLS VPN with three endpoints could look something like this. CE routers connecting to PE routers, that are connected to an MPLS core network. In the MPLS core network there are a number of P routers.

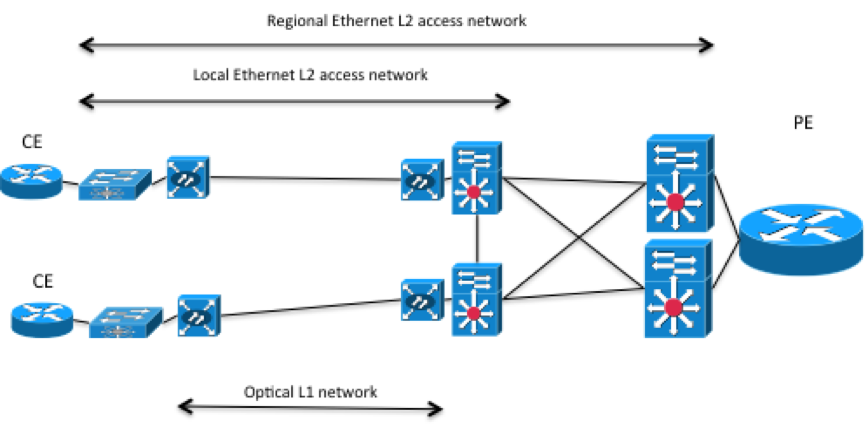

In the service model you only want to configure the CE devices to use as endpoints. In this case topology information could be used to sort out what PE router each CE router is connected to. However what type of topology do you need. Lets look at a more detailed picture of what the L1 and L2 topology could look like for one side of the picture above.

In pretty much all networks there is an access network between the CE and PE router. In the picture above the CE routers are connected to local Ethernet switches connected to a local Ethernet access network, connected through optical equipment. The local Ethernet access network is connected to a regional Ethernet access network, connected to the PE router. Most likely the physical connections between the devices in this picture has been simplified, in the real world redundant cabling would be used. The example above is of course only one example of how an access network could look like and it is very likely that a service provider have different access technologies. For example Ethernet, ATM, or a DSL based access network.

Depending on how you design the L3VPN service, the physical cabling or the exact traffic path taken in the layer 2 Ethernet access network might not be that interesting, just like we don't make any assumptions or care about how traffic is transported over the MPLS core network. In both these cases we trust the underlying protocols handling state in the network, spanning tree in the Ethernet access network, and routing protocols like BGP in the MPLS cloud. Instead in this case it could make more sense to have a separate NSO service for the access network, both so it can be reused for both for example L3VPN's and L2VPN's but also to not tightly couple to the access network with the L3VPN service since it can be different (Ethernet or ATM etc.).

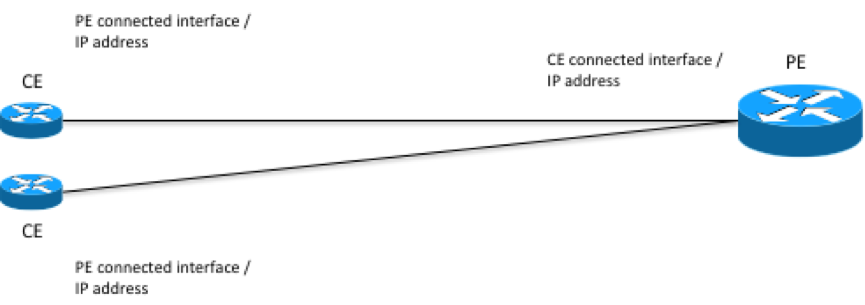

Looking at the topology again from the L3VPN service perspective, if services assume that the access network is already provisioned or taken care of by another service, it could look like this.

The information needed to sort out what PE router a CE router is connected to as well as configuring both CE and PE routers is:

-

Interface on the CE router that is connected to the PE router, and IP address of that interface.

-

Interface on the PE router that is connected to the CE router, and IP address to the interface.

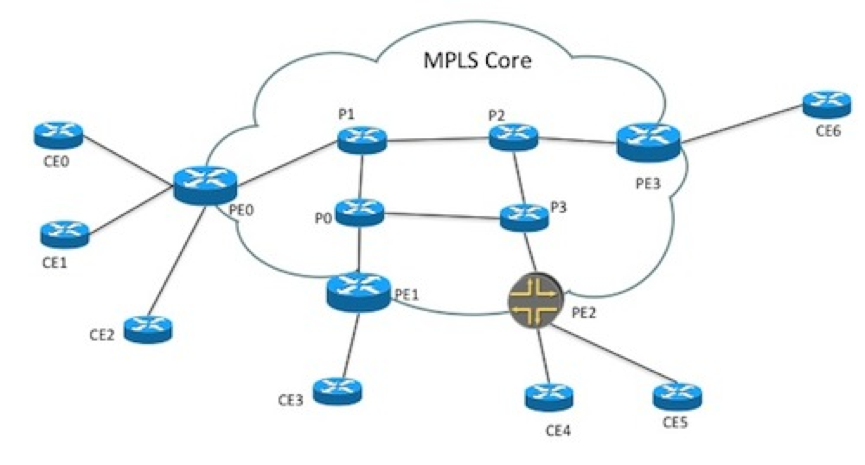

This section describes the creation of a MPLS L3VPN service in a

multi vendor environment applying the concepts described above.

The example discussed can be found in

examples.ncs/service-provider/mpls-vpn. The

example network consists of Cisco ASR 9k and Juniper core routers

(P and PE) and Cisco IOS based CE routers.

The goal with the NSO service is to setup a MPLS Layer3 VPN on a number of CE router endpoints using BGP as the CE-PE routing protocol. Connectivity between the CE and PE routers is done through a Layer2 Ethernet access network, which is out of scope for this service. In a real world scenario the access network could for example be handled by another service.

In the example network we can also assume that the MPLS core network already exists and is configured.

When designing service YANG models there are a number of things to take into consideration. The process usually involves the following steps:

-

Identify the resulting device configurations for a deployed service instance.

-

Identify what parameters from the device configurations that are common and should be put in the service model.

-

Ensure that the scope of the service and the structure of the model works with the NSO architecture and service mapping concepts. For example, avoid unnecessary complexities in the code to work with the service parameters.

-

Ensure that the model is structured in a way so that integration with other systems north of NSO works well. For example, ensure that the parameters in the service model map to the needed parameters from an ordering system.

Step 1 and 2: Device Configurations and Identifying parameters

Deploying a MPLS VPN in the network results in the following basic CE and PE configurations. The snippets below only include the Cisco IOS and Cisco IOS-XR configurations. In a real process all applicable device vendor configurations should be analyzed.

interfaceGigabitEthernet0/1.77descriptionLink to PE / pe0 - GigabitEthernet0/0/0/3encapsulation dot1Q77ip address192.168.1.5 255.255.255.252service-policy outputvolvo! policy-mapvolvoclass class-default shape average6000000! ! interfaceGigabitEthernet0/11descriptionvolvo local networkip address10.7.7.1 255.255.255.0exit router bgp65101neighbor192.168.1.6 remote-as 100neighbor192.168.1.6 activatenetwork10.7.7.0!

vrf volvo

address-family ipv4 unicast

import route-target

65101:1

exit

export route-target

65101:1

exit

exit

exit

policy-map volvo-ce1

class class-default

shape average 6000000 bps

!

end-policy-map

!

interface GigabitEthernet 0/0/0/3.77

description Link to CE / ce1 - GigabitEthernet0/1

ipv4 address 192.168.1.6 255.255.255.252

service-policy output volvo-ce1

vrf volvo

encapsulation dot1q 77

exit

router bgp 100

vrf volvo

rd 65101:1

address-family ipv4 unicast

exit

neighbor 192.168.1.5

remote-as 65101

address-family ipv4 unicast

as-override

exit

exit

exit

exit

The device configuration parameters that need to be uniquely

configured for each VPN have been marked in bold.

Step 3 and 4: Model Structure and Integration with other Systems

When configuring a new MPLS l3vpn in the network we will have to configure all CE routers that should be interconnected by the VPN, as well as the PE routers they connect to.

However when creating a new l3vpn service instance in NSO it would be ideal if only the endpoints (CE routers) are needed as parameters to avoid having knowledge about PE routers in a northbound order management system. This means a way to use topology information is needed to derive or compute what PE router a CE router is connected to. This makes the input parameters for a new service instance very simple. It also makes the entire service very flexible, since we can move CE and PE routers around, without modifying the service configuration.

Resulting YANG Service Model:

container vpn {

list l3vpn {

tailf:info "Layer3 VPN";

uses ncs:service-data;

ncs:servicepoint l3vpn-servicepoint;

key name;

leaf name {

tailf:info "Unique service id";

type string;

}

leaf as-number {

tailf:info "MPLS VPN AS number.";

mandatory true;

type uint32;

}

list endpoint {

key id;

leaf id {

tailf:info "Endpoint identifier";

type string;

}

leaf ce-device {

mandatory true;

type leafref {

path "/ncs:devices/ncs:device/ncs:name";

}

}

leaf ce-interface {

mandatory true;

type string;

}

leaf ip-network {

tailf:info “private IP network”;

mandatory true;

type inet:ip-prefix;

}

leaf bandwidth {

tailf:info "Bandwidth in bps";

mandatory true;

type uint32;

}

}

}

}

The snipped above contains the l3vpn service model. The structure of the model is very simple. Every VPN has a name, an as-number and a list of all the endpoints in the VPN. Each endpoint has:

-

A unique id

-

A reference to a device (a CE router in our case)

-

A pointer to the LAN local interface on the CE router. This is kept as a string since we want this to work in a multi-vendor environment.

-

LAN private IP network

-

Bandwidth on the VPN connection.

To be able to derive the CE to PE connections we use a very simple topology model. Notice that this YANG snippet does not contain any servicepoint, which means that this is not a service model but rather just a YANG schema letting us store information in CDB.

container topology {

list connection {

key name;

leaf name {

type string;

}

container endpoint-1 {

tailf:cli-compact-syntax;

uses connection-grouping;

}

container endpoint-2 {

tailf:cli-compact-syntax;

uses connection-grouping;

}

leaf link-vlan {

type uint32;

}

}

}

grouping connection-grouping {

leaf device {

type leafref {

path "/ncs:devices/ncs:device/ncs:name";

}

}

leaf interface {

type string;

}

leaf ip-address {

type tailf:ipv4-address-and-prefix-length;

}

}The model basically contains a list of connections, where each connection points out the device, interface and ip-address in each of the connection.

Since we need to lookup which PE routers to configure using the topology model in the mapping logic it is not possible to use a declarative configuration template based mapping. Using Java and configuration templates together is the right approach.

The Java logic lets you set a list of parameters that can be consumed by the configuration templates. One huge benefit of this approach is that all the parameters set in the Java code is completely vendor agnostic. When writing the code there is no need for knowledge of what kind of devices or vendors that exists in the network, thus creating an abstraction of vendor specific configuration. This also means that in to create the configuration template there is no need to have knowledge of the service logic in the Java code. The configuration template can instead be created and maintained by subject matter experts, the network engineers.

With this service mapping approach it makes sense to modularize the service mapping by creating configuration templates on a per feature level, creating an abstraction for a feature in the network. In this example means we will create the following templates:

-

CE router

-

PE router

This is both to make services easier to maintain and create but also to create components that are reusable from different services. This can of course be even more detailed with templates with for example BGP or interface configuration if needed.

Since the configuration templates are decoupled from the service logic it is also possible to create and add additional templates in a running NSO system. You can for example add a CE router from a new vendor to the layer3 VPN service by only creating a new configuration template, using the set of parameters from the service logic, to a running NSO system without changing anything in the other logical layers.

The Java code part for the service mapping is very simple and follows the following pseudo code steps:

READ topology

FOR EACH endpoint

USING topology

DERIVE connected-pe-router

READ ce-pe-connection

SET pe-parameters

SET ce-parameters

APPLY TEMPLATE l3vpn-ce

APPLY TEMPLATE l3vpn-pe

This section will go through relevant parts of the Java outlined by the pseudo code above. The code starts with defining the configuration templates and reading the list of endpoints configured and the topology. The Navu API is used for navigating the data models.

Template peTemplate = new Template(context, "l3vpn-pe");

Template ceTemplate = new Template(context,"l3vpn-ce");

NavuList endpoints = service.list("endpoint");

NavuContainer topology = ncsRoot.getParent().

container("http://com/example/l3vpn").

container("topology");

The next step is iterating over the VPN endpoints configured in the service, find out connected PE router using small helper methods navigating the configured topology.

for(NavuContainer endpoint : endpoints.elements()) {

try {

String ceName = endpoint.leaf("ce-device").valueAsString();

// Get the PE connection for this endpoint router

NavuContainer conn =

getConnection(topology,

endpoint.leaf("ce-device").valueAsString());

NavuContainer peEndpoint = getConnectedEndpoint(

conn,ceName);

NavuContainer ceEndpoint = getMyEndpoint(

conn,ceName);

The parameter dictionary is created from the TemplateVariables class and is populated with appropriate parameters.

TemplateVariables vpnVar = new TemplateVariables();

vpnVar.putQuoted("PE",peEndpoint.leaf("device").valueAsString());

vpnVar.putQuoted("CE",endpoint.leaf("ce-device").valueAsString());

vpnVar.putQuoted("VLAN_ID", vlan.valueAsString());

vpnVar.putQuoted("LINK_PE_ADR",

getIPAddress(peEndpoint.leaf("ip-address").valueAsString()));

vpnVar.putQuoted("LINK_CE_ADR",

getIPAddress(ceEndpoint. leaf("ip-address").valueAsString()));

vpnVar.putQuoted("LINK_MASK",

getNetMask(ceEndpoint. leaf("ip-address").valueAsString()));

vpnVar.putQuoted("LINK_PREFIX",

getIPPrefix(ceEndpoint.leaf("ip-address").valueAsString()));

The last step after all parameters have been set is applying the templates for the CE and PE routers for this VPN endpoint.

peTemplate.apply(service, vpnVar); ceTemplate.apply(service, vpnVar);

The configuration templates are XML templates based on the structure of device YANG models.There is a very easy way to create the configuration templates for the service mapping if NSO is connected to a device with the appropriate configuration on it, using the following steps.

-

Configure the device with the appropriate configuration.

-

Add the device to NSO

-

Sync the configuration to NSO.

-

Display the device configuration in XML format.

-

Save the XML output to a configuration template file and replace configured values with parameters

The commands in NSO give the following output. To make the example simpler only the BGP part of the configuration is used

admin@ncs#devices device ce1 sync-fromadmin@ncs#show running-config devices device ce1 config \ ios:router bgp | display xml<config xmlns="http://tail-f.com/ns/config/1.0"> <devices xmlns="http://tail-f.com/ns/ncs"> <device> <name>ce1</name> <config> <router xmlns="urn:ios"> <bgp> <as-no>65101</as-no> <neighbor> <id>192.168.1.6</id> <remote-as>100</remote-as> <activate/> </neighbor> <network> <number>10.7.7.0</number> </network> </bgp> </router> </config> </device> </devices> </config>

The final configuration template with the replaced parameters marked in bold is shown below. If the parameter starts with a $-sign is taken from the Java parameter dictionary, otherwise it is a direct xpath reference to the value from the service instance.

<config-template xmlns="http://tail-f.com/ns/config/1.0">

<devices xmlns="http://tail-f.com/ns/ncs">

<device tags="nocreate">

<name>{$CE}</name>

<config>

<router xmlns="urn:ios" tags="merge">

<bgp>

<as-no>{/as-number}</as-no>

<neighbor>

<id>{$LINK_PE_ADR}</id>

<remote-as>100</remote-as>

<activate/>

</neighbor>

<network>

<number>{$LOCAL_CE_NET}</number>

</network>

</bgp>

</router>

</config>

</device>

</devices>

</config-template>

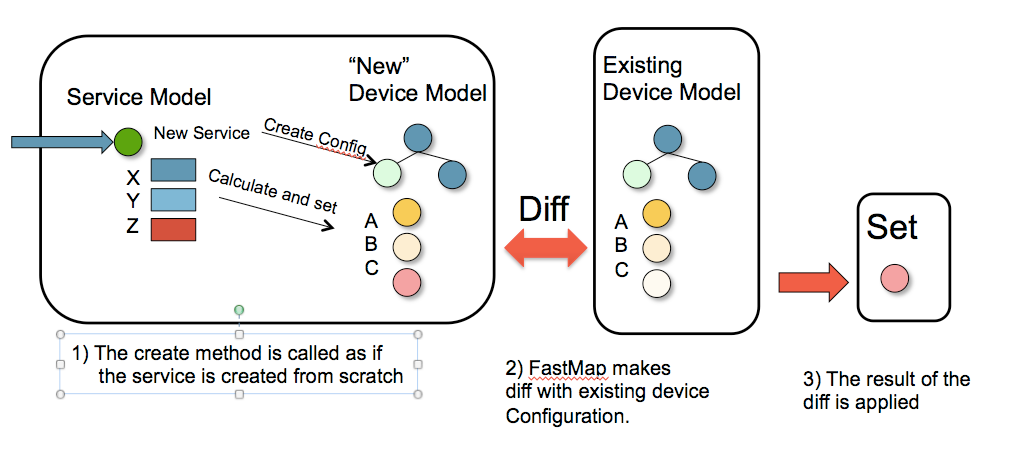

FASTMAP covers the complete service life-cycle: creating, changing and deleting the service. The solution requires a minimum amount of code for mapping from a service model to a device model.

FASTMAP is based on generating changes from an initial create. When the service instance is created the reverse of the resulting device configuration is stored together with the service instance. If an NSO user later changes the service instance, NSO first applies (in a transaction) the reverse diff of the service, effectively undoing the previous results of the service creation code. Then it runs the logic to create the service again, and finally executes a diff to current configuration. This diff is then sent to the devices.

Note

This means that it is very important that the service create code produces the same device changes for a given set of input parameters every time it is executed. See the section called “ Persistent FASTMAP Properties ” for techniques to achieve this.

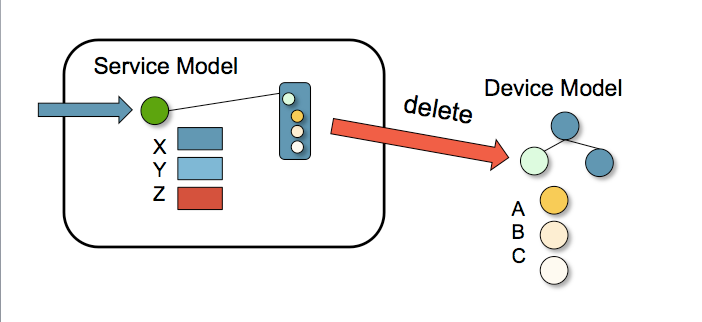

If the service instance is deleted, NSO applies the reverse diff of the service, effectively removing all configuration changes the service did from the devices.

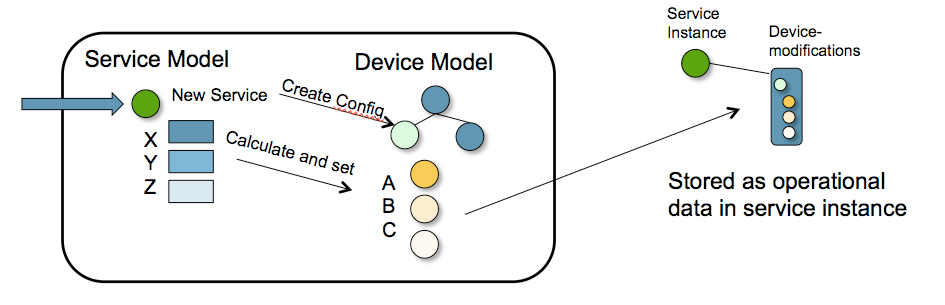

Assume we have a service model that defines a service with attributes X, Y, and Z. The mapping logic calculates that attributes A, B, and C shall be created on the devices. When the service is instantiated, the inverse of the corresponding device attributes A, B, and C are stored with the service instance in the NSO data-store CDB. This inverse answers the question: what should be done to the network to bring it back to the state before the service was instantiated.

Now let us see what happens if one service attribute is changed. In the scenario below the service attribute Z is changed. NSO will execute this as if the service was created from scratch. The resulting device configurations are then compared with the actual configuration and the minimum diff is sent to the devices. Note that this is managed automatically, there is no code to handle "change Z".

When a user deletes a service instance NSO can pick up the stored device configuration and delete that:

A FASTMAP service is not allowed to perform explicit function

calls that have side effects. The only action a service is

allowed to take is to modify the configuration of the current

transaction. For example, a service may not invoke a RPC to

allocate a resource or start a virtual machine. All such actions

must take place before the service is created and provided as

input parameters to the service. The reason for this restriction

is that the FASTMAP code may be executed as part of a

commit dry-run, or the commit may fail, in which

case the side effects would have to be undone.

Reactive FASTMAP is a design pattern that provides a side-effect free solution to invoking RPCs from a service. In the services discussed previously in this chapter, the service was modeled in such a way that all required parameters were given to the service instance. The mapping logic code could immediately do its work.

Sometimes this is not possible. Two examples where Reactive FASTMAP is the solution are:

-

A resource is allocated from an external system, such as an IP address or vlan id. It's not possible to do this allocation from within the normal FASTMAP

create()code since there is no ways to deallocate the resource on commit abort or failure, and when the service is deleted. Furthermore, thecreate()code runs within the transaction lock. The time spent in thecreate()should be as short as possible. -

The service requires the start of one or more Virtual Machines, Virtual Network Functions. The VMs don't yet exist, and the

create()code needs to trigger something that starts the VMs, and then later, when the VMs are operational, configure them.

The basic idea is to let the create() code not just

write data in the /ncs:devices tree, but also write

data in some auxiliary data structure. A CDB subscriber

subscribes to that auxiliary data structure and perform the

actual side effect, for example a resource allocation. The

response is written to CDB as operational data where the

service can read it during subsequent invocations.

The pseudo code for a Reactive FASTMAP service that allocates an id from an id pool may look like this:

create(serv) {

/* request resource allocation */

ResourceAllocator.requestId(serv, idPool, allocId);

/* check for allocation response */

if (!ResourceAllocator.idReady(idPool, allocId))

return;

/* read allocation id */

id = ResourceAllocator.idRead(idPool, allocId);

/* use id in device config */

configure(id)

}

The actual deployment of a Reactive FASTMAP service will

involve multiple executions of the create()

code.

-