CML 2.4 Release Notes

Cisco Modeling Labs (CML) is a network simulation platform. CML 2.4 is the latest feature release of CML and introduces support for deploying CML as a cluster.

New and Changed Information

This table shows any published changes to this CML 2.4 release notes document.

| Date | Changes |

|---|---|

| June 10, 2022 | Initial version for the CML 2.4.0 release. |

| October 4, 2022 | CML 2.4.1 release. Updated other changes, bug fixes, and known issues. |

| November 26, 2022 | Updated workarounds for running CML on macOS with VMware Fusion. |

| January 24, 2023 | Updated details about CML 2.4 API changes. Added note about migration from CML 2.2.x when using a CSSM On-Prem server for licensing. |

Installing or Migrating to CML 2.4

Upgrading to CML 2.4

If you already have a CML 2.3.x installation, you can upgrade it to CML 2.4.0. See the In-Place Upgrade page for upgrade instructions. In-place upgrades from CML version 2.2.3 or earlier are not supported.

Migrating from an Existing CML 2.2 Installation

It is not possible to upgrade a CML 2.2.x installation to CML 2.4. You must migrate to a new CML 2.4 installation. If you have an existing CML 2.2.x installation, the migration tool will help you migrate your data from your existing CML installation to the new CML 2.4 installation. If your existing CML installation is CML 2.0.x or 2.1.x, we recommend first following the CML 2.2.x documentation to perform an in-place upgrade to CML 2.2.3 before migrating to a new CML 2.4 installation. The following video demonstrates the migration process with CML 2.3, and you can use the same steps to migrate from CML 2.2.3 to CML 2.4.

- Deregister your existing CML 2.2.x instance.

- If you choose to migrate an existing, licensed CML 2.2.x installation to a new installation of CML 2.4, you must deregister the old CML instance before decommissioning it. See Licensing - Deregistration for instructions on deregistering a CML instance.

- Warning for CML instances that use the On-Prem licensing option. There is a known issue with CML 2.2.x that causes license deregistration to fail with some versions of the CSSM On-Prem software. If you are unable to deregister the CML 2.2.x instance from your CSSM On-Prem server, open a support case for CML.

- Install CML 2.4 (see below).

- Since you are migrating from an existing CML 2.2.x installation, mounting the refplat ISO before running the CML 2.4 installation is optional.

- If the reference platform ISO is mounted before starting the CML 2.4 installation, the setup script will copy reference platform images from a mounted refplat ISO to the local disk.

- You can skip the copying of reference platform images during installation because the migration tool will copy all of the reference platform images from your CML 2.2.x installation to the new CML 2.4 installation.

- Only mount the new refplat ISO if you want to add the new versions of the reference platforms in addition to the existing versions from your CML 2.2.x installation.

- Follow the instructions in the migration tool's README.md to migrate data from your existing CML 2.2 installation to your new CML 2.4 installation.

- License your new CML 2.4 installation.

The migration tool can be found on your CML 2.4 installation at /usr/local/bin/virl2-migrate-data.sh. You can also download the latest version of the migration tool from https://github.com/CiscoDevNet/cml-community/tree/master/scripts/migration-tool.

The migration tool also supports offline migration. If you want to install CML 2.4 on the same server that's currently running CML 2.2.x, you can use the Offline Version Migration instructions to back up the data to a .tar file. That way, you can transfer the .tar file to a separate datastore temporarily while you reinstall with CML 2.4. As always, make sure that you deregister your old CML 2.2.x instance before decommissioning it and starting the CML 2.4 installation.

Installing CML 2.4

The CML Installation Guide provides detailed steps for installing your new CML 2.4 instance.

- In CML 2.4, all of the reference platform VM images must be copied to the local disk of the CML instance. Be sure to provision enough disk space for your new CML instance to accommodate the reference platforms.

- Downloading the refplat ISO file is optional if you are migrating from an existing CML 2.2.x instance. The migration tool will migrate all of your existing image definitions and VM images from your old CML 2.2.x instance.

- Be sure to check the System Requirements for updated system requirements.

- Important: After the reference platforms are copied to disk during the Initial Set-Up, wait a couple of minutes for the CML services to finish restarting before clicking OK to continue. If you click OK too soon, the initial set-up can fail. (See the Known Issues below.)

Demonstration of CML 2.4 Features

You can see a brief demo of the new CML 2.4 features in the following video:

Clustering

The primary new feature in CML 2.4 is the introduction of clustering (phase 1). Prior to the 2.4 release, CML 2.x scaled vertically. Vertical scaling means that to run large topologies or a larger number of small topologies, you had to deploy CML on a very large server. Clustering allows for horizontal scaling across multiple servers while still providing a single user interface to manage labs. To permit regular CML feature releases, we chose to split the CML clustering functionality into multiple phases. Future CML releases will add additional clustering functionality.

Note: Clustering is only available in the non-personal CML products, such as CML-Enterprise and CML-Education.

This first phase of clustering support adds the ability to join one or more computes to a CML controller, forming a CML cluster. Within such a cluster, you can start large topologies, which would not fit into a single all-in-one CML instance, and the CML controller will dynamically assign nodes to the compute members of the cluster. The cluster controller can place VMs for nodes from a single topology on different computes. During the initial setup of a CML instance, the administrator chooses whether it will be a cluster controller, a cluster compute, or a standalone (non-cluster) instance.

Clustering phase 1 includes the following high-level features:

- Dynamic registration of compute members to the cluster controller

- Sharing of reference platform images from the cluster controller to each compute member

- Automatic distribution of large labs across available compute members

- In-place upgrade from CML 2.3.x

Nomenclature

- node / VM: a virtual machine, which is part of a lab topology

- fabric: the software component connecting VM NICs with each other, also providing external connectivity

- controller: the CML instance that hosts the CML controller services, the CML UI, and the CML APIs

- compute or compute member: a member of a cluster where CML runs the VMs for running nodes

- The admin can configure the controller so that it runs VMs just like the compute members or so that it does not run any VMs.

- We generally recommend disallowing VMs from running on the controller, if possible.

- cluster: a set of CML instances with one controller and one or more compute members

Clustering Constraints

Before deploying a CML cluster, you should be aware of the current constraints. Since CML 2.4 is just the first phase of CML clustering, more clustering functionality will be added in future CML releases. Phase 1 clustering in CML 2.4 has the following constraints:

- Clustering is only available on non-personal CML products. Personal products, i.e., CML-Personal and CML-Personal Plus, cannot run a CML cluster.

- No influence on placement. Nodes are placed in no particular order on computes. CML starts the node VM on an arbitrary compute that can run it.

- No migration of nodes. Once a node has been allocated to a specific compute, it will stay on that compute every time you start the lab unless you wipe the node (or lab) first. The next time you start a node after wiping it, it will be assigned to a compute again. It may be allocated to the same compute as before.

- No re-balancing. For example, if all running nodes were allocated to one compute, and other computes of the cluster are idle, there is no automatic re-balancing of the nodes to the available computes. As above, stopping and wiping the lab will cause the nodes to be reallocated the next time you start the lab, and that reallocation may rebalance the nodes across the compute members.

- No external connectivity via computes. All external traffic goes through the controller.

- Software upgrade for a cluster. To upgrade a cluster, you must manually trigger the upgrade of the controller and all of the computes.

- No integrated cluster management. The controller and each of the computes run Cockpit, but there is no aggregated management functionality at this time.

- No compute management after adding a compute. Compute registration is automatic, but there is currently no way for the admin to either put a compute in maintenance mode or remove a compute from service. Some of this functionality will be added in phase 2 clustering.

- Compute state handling. When a node's VM has been allocated to a compute, and the compute is not available, the state of that VM cannot be determined and may be incorrect. Starting, stopping, or wiping a node that has already been allocated to a particular compute while that compute is offline is likely to cause undesirable side effects. Controller re-syncing of computes has only been partially implemented. The state of nodes that were running on the compute when it was disconnected will not recover automatically, and manual intervention may be required to bring affected labs into a consistent state.

- No ability to limit resource usage. Just like on a standalone CML instance, a single lab or a single user can use all of the resources of a CML cluster. The CML admin has no way to restrict or limit this resource usage.

- A CML cluster may be licensed for up to 300 nodes.

- Cluster size is currently limited to 8 computes.

Summary of Other Changes

The main focus of the CML 2.4 release is the clustering phase 1 functionality, but there are other updates and bug fixes in this release. This section summarizes the other changes since CML 2.3.x.

UI Changes

Tables in the CML UI

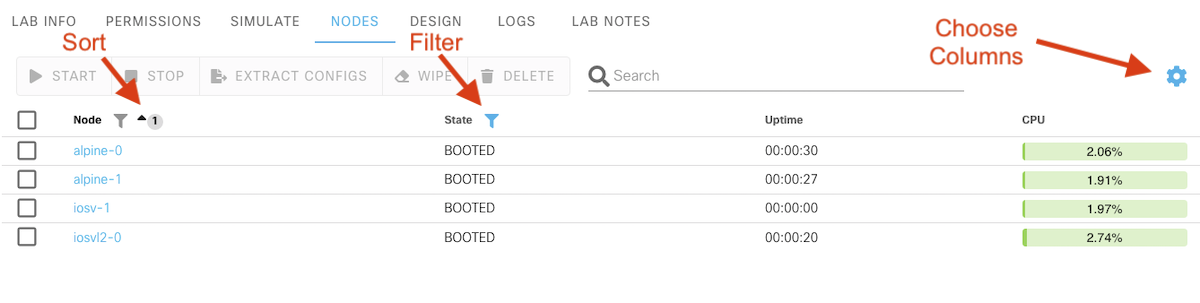

CML 2.4 adds new functionality to some of the tables shown in the UI.

You can now filter the data in the tables based on the values in a specific column. Columns that support filtering are decorated with the filter icon. Click the filter icon itself to set the filter for the column.

The search box at the top of a table performs a full text search across all columns that are both displayed and filterable. Only rows that match both the search and the active filters are shown in the table.

To help you focus on the data that's important to you, some of the tables in the UI now support customization of the columns to display. These tables offer new columns that display additional properties of the elements shown in the table. Click the gear icon at the top-right of the table to choose the table columns to display.

Lab Preview on the Dashboard Page

In the Dashboard page, the Show Preview toggle is no longer available in list layout of the labs. To achieve the same effect in CML 2.4, you can simply select or unselect the Preview column when choosing which columns to display.

Visual Link and Interface State on the Workbench Canvas

CML now provides a graphical representation of the interface and link state directly on the canvas in the Workbench.

- Interface state is represented by icons at the ends of the link:

- Grey Square - Interface down

- Green Circle - Interface up

- Link state is represented by the line style of the link:

- Dashed Line - Link down

- Solid Line - Link up

Simulate Disconnecting a Link

CML 2.4 adds new functionality on the Simulate pane for links. If you want to simulate disconnecting a link in a CML lab, it is generally not sufficient to Stop the link. Because of the implementation of the reference platform VMs and the simulated network, even when the link is stopped, the interfaces at the ends of the link will generally still report that they are connected. To simulate a disconnecting a link, you generally need to stop the link and stop the interfaces at each end of the link.

In CML 2.4, the link's Simulate pane now includes a Connect/Disconnect toggle button to make this action easier.

- Connect - start both interfaces on each side of the link to simulate a cable being connected

- Disconnect - stops both interfaces on each side of the link to simulate a cable being disconnected

To make it easier to see the state of interfaces associated with a link, the Simulate pane for the link now shows the state of the interfaces. You can also toggle the interface state (Start/Stop the interface) directly in the link's Simulate pane.

API Changes

CML 2.4 introduces several changes to the REST-based web service APIs. For details about a specific API, log into the CML UI and click Tools > API Documentation to view the CML API documentation page.

When a CML API endpoint completes successfully but returns no response object, it uses the return code HTTP/204 instead of HTTP/200 to indicate success. Not all API endpoints followed that pattern in previous releases. In this release, the following API endpoints will return an HTTP/204 code and no response body on success:

- DELETE /image_definitions/{def_id}

- DELETE /labs/{lab_id}

- DELETE /labs/{lab_id}/nodes/{node_id}

- DELETE /labs/{lab_id}/nodes/{node_id}/tags/{tag}

- DELETE /node_definitions/{def_id}

- GET /build_configurations

- PUT /labs/{lab_id}/start

- PUT /labs/{lab_id}/stop

- PUT /labs/{lab_id}/undo

- PUT /labs/{lab_id}/wipe

- PUT /labs/{lab_id}/interfaces/{interface_id}/state/start

- PUT /labs/{lab_id}/interfaces/{interface_id}/state/stop

- PUT /labs/{lab_id}/links/{link_id}/state/start

- PUT /labs/{lab_id}/links/{link_id}/state/stop

- PUT /labs/{lab_id}/nodes/{node_id}/wipe_disks

- PUT /labs/{lab_id}/nodes/{node_id}/state/start

- PUT /labs/{lab_id}/nodes/{node_id}/state/stop

- PUT /labs/{lab_id}/nodes/{node_id}/wipe_disks

APIs Removed in CML 2.4

The following formerly-deprecated APIs have been removed:

| Deprecated API Endpoint | Use this API Endpoint Instead |

|---|---|

POST /update_lab_topology |

PATCH /labs/{lab_id} |

PUT /labs/{lab_id}/nodes/{node_id}/check_if_converged |

GET /labs/{lab_id}/nodes/{node_id}/check_if_converged |

PUT /labs/{lab_id}/links/{link_id}/check_if_converged |

GET /labs/{lab_id}/links/{link_id}/check_if_converged |

New APIs in CML 2.4

This table summarizes the API endpoints that are new in CML 2.4.

| API Endpoint | Description |

|---|---|

GET /nodes |

Get information for all nodes visible to the user making the request. |

GET /labs/{lab_id}/links |

Get the list of links in the specified labs. |

GET /labs/{lab_id}/nodes/{node_id}/check_if_converged |

Wait for convergence on the specified node. |

GET /labs/{lab_id}/links/{link_id}/check_if_converged |

Wait for convergence on the specified link. |

APIs Changed

This table summarizes the APIs changed in CML 2.4.

| API Endpoint | Summary of Change |

|---|---|

GET /system_stats |

The structure of the response has changed to accommodate additional information that's included in the response for a CML cluster. |

GET /labs/{lab_id}/nodes |

We added a new, optional query parameter, data. Specify data=true to request that the response include the node data instead of just the node IDs. |

GET /node_definition_schema |

Schema includes new nic_driver property. |

GET /node_definitions |

The response may include the new nic_driver property in the node definition. |

GET /node_definitions/{def_id} |

The node definition may include the new nic_driver property. |

GET /simplified_node_definitions |

The response may include the new nic_driver property in the node definitions. |

PUT /node_definitions/ |

You may now set the nic_driver property via this API. |

POST /node_definitions/ |

Your new node definition my use the nic_driver property. |

Other Changes

| Summary | Release Notes |

|---|---|

| Make it easier to find the documentation for CML. | Since 2.4.1. Added a link to the official CML documentation in the CML UI under Tools > CML Documentation. |

| Consolidate virlutils | Since 2.4.1. Fixed a bug for a potentially missing owner in topologies with older schema version that are imported in the client library. Use proper deprecation functions. |

| Allow configuration provision file with no content in UI | Since 2.4.1. The content attribute is optional for files in the configuration provisioning of node definitions |

| Interfaces in the virl2-client library should only have one link attached | Since 2.4.1. In the Interface class:

|

| Forbid LLD actions while virl2-lowlevel-driver is being synced | Since 2.4.1. While the low-level driver is being synced with the Linux system, forbid user actions to prevent inconsistencies. |

| The CSR 1000v node definition was not correctly updated during migration to 2.4.0 | Since 2.4.1. Fixed a broken patch for CSR1000v node definition. |

| Add ability to select a different serial port in the UI | Since 2.4.1. In the CML UI, on the Console tab for the selected node in the Workbench, you now have the ability to select a different console serial line. |

| PCL support for the compute ID | Since 2.4.1. Added a new "compute_id" property to the node class in the client library. |

| Clear old console logs | Since 2.4.1. Node console history is now cleared whenever a node is wiped or restarted; a stopped node's console history is still available. Upgrade will wipe console history for all previously started nodes. |

| Fabric and devicemux readiness for system health | Since 2.4.1. Improved GET "/system_stats" API endpoint to provide information about the fabric and devicemux services. |

| Expose new health status items in UI | Since 2.4.1. Added new health checks to system status bar |

| Initial setup allows using "password" as the password but UI does not allow login | Since 2.4.1. Fixed a bug that allowed to continue in installation with a dictionary password. As a result the installation ended up with the default password. |

| smaller breakout improvements | Since 2.4.1. Added breakout support for ARM architecture. |

| Remove and disable numad as dependency | Since 2.4.1. Disabled numad service for all installs and removed it as a dependency. This non-essential system service may crash occasionally, causing a lockup in the libvirt virtual machine management service. The service was already disabled in both OVA and ISO installs since CML 2.3.0; this change covers other installation methods. |

| Allow to resync a disconnected compute member | Since 2.4.1. Previously, when a compute member was reconnected, the whole controller had to be restarted to resync with it. The newly-improved workflow allows automatic re-connection. |

| make diagnostic UI only refresh on button click instead of every 2s | Since 2.4.1. Improved diagnostics in UI to not load updates every few seconds. The set of data is huge especially in a cluster deployment and there's no need to download it periodically. Instead a button to reload the data was added. |

| fix plus/minus zooming on canvas when looking at topology areas away from 0/0 | Since 2.4.1. Changed zoom in/out buttons in toolbar. From now it will zoom in/out to the center of canvas. |

| Exception refactoring | Since 2.4.1. Exception improvements in client library:

|

| Properly remove node and interfaces | Since 2.4.1. Decreased severity of lab update logs from warning to info. |

| allow UUIDs in terminal server | Since 2.4.1. Implemented a switch (uuid) in the terminal server that allows to switch between human readable values (labels/titles) and UUIDs. Another method (connect) was implemented to allow direct access to a console by its ID instead of connecting via /lab/node/console_number combination. |

| Settings button should be disabled when pcap is running | Since 2.4.1. The settings button in the pcap menu is disabled when a pcap is running. |

| link conditioning, en/decap frames on veth pair into ipeth ethertype | Since 2.4.1. Encapsulate frames on the veth pair to ensure the kernel does not touch them (and strip e.g. 802.1Q layers). Avoid / drop OS generated packets on the veth pair. Remove link conditioning when link is stopped. |

| Workbench navigates to multilink panel when multiple nodes selected | Selecting multiple nodes and links at the same time will show the Nodes tab instead of the Multilink tab |

| Make UUID for nodes copyable to clipboard | Added option to copy node/interface/link UUID in the workbench |

| Powering off OVA during virl2-initial-setup breaks it | Initial setup of a VMWare deployment should start correctly a second time if it was interrupted the first time (e.g. by halting to adjust the VM's hardware make up). |

| Handle unexpected node events | Nodes can be started, destroyed or undefined from libvirt (via virsh) without issues. |

| Add compute hostname to diagnostic page | Diagnostics page will show compute's hostname instead of compute id |

| Display compute information in expanded footer | Expanded footer will show more detailed stats about each compute |

| Ensure link start works on running links | The API calls for stopping and starting links and interfaces while the item is in the desired state now returns success as a no-op, instead of rejecting the request as a client error. |

| External-connector support for CML clusters | Ensure ext-connector nodes only started on controller |

| Generate a lab title on .virl import when no title is provided | If no title is provided when importing VIRL1x topology - title will be generated as empty string |

| System health should identify controller via flag | NodeImage and .deb upload will check if there is enough free space on the controller System health check in the footer will check LLD connectivity for all computes |

| Aggregate system stats of computes at the BE | The footer now shows aggregated stats of all computes |

| Define compute.id in launch queue | If sufficient resources are available on any computes, the node will enter the launch queue, but will not be assigned to a compute when entering the queue. When the launch queue is processed, it will attempt to find the least loaded compute. If a valid compute is found, the node will be assigned to that compute and launched. If no compute is found, the launch queue will skip that node until the next cycle (no compute assigned). If after one minute in the queue, no compute is found, a warning will be displayed to the user but no further action is taken. If after one hour in the queue, no compute is found, a error will be displayed, and the node will be removed from the queue and revert to a wiped state. |

| Add missing endpoint GET /labs/{lab_id}/links | new /labs/{lab_id}/links endpoint that returns IDS of all links in lab changed meaning of data query parameter for following endpoints:

|

| Update lab element endpoints to 204 No Content |

|

| Node tab view in workbench should show compute member name for each node | Added new column "compute" into the Nodes table on the workbench page |

| Handle unexpected node events | Transitions for some libvirt events have been adjusted. Now, when a node is stopped, started or wiped outside CML (in console or via virsh), all of those operations are handled in CML. |

| Improved ISO installation for bare metal | Move user-data commands to shell scripts |

| Security updates | Improved web security against XSS attacks |

| Reduce size of CML distribution | Remove unneeded software from the base Ubuntu distribution |

| Remove finish upgrade feature | Removed "Finish upgrade" button from cockpit. |

Bug Fixes

| Bug Description | Resolution |

|---|---|

| Port-channels do not come up in IOSvL2 nodes. | A bug in CML 2.3.x changed the way that CML generates mac addresses for interfaces in running labs. This change breaks some features in certain reference platforms, such as port-channels and SVIs on IOSvL2. The bug will be fixed in a future CML release. Workaround: For IOSvL2 port-channels, use channel mode on. For IOSvL2 SVIs, manually configure unique mac-addresses for each SVI. |

| Link hiding feature does not work in CML 2.3.1. | Workaround: There is no workaround at this time. If you need the link hiding feature, continue to use CML 2.3.0 and do not upgrade to CML 2.3.1. |

| The CML 2.3 VM consumes all of the allocated memory even with no labs running. When you start the CML 2.3 VM in VMware, it will quickly allocate all of the memory assigned to the VM. For example, if you configured the VM to use 16 GB of memory, the VM process will show all 16 GB of memory in use shortly after it starts. If you check the guest OS itself (in the CML UI's status bar or by running Note: this problem affects all VM deployments of the CML 2.3.0 OVA. Earlier versions of the release notes incorrectly stated that the problem did not impact CML 2.3.0 deployments on VMware ESXi. |

Upgrade to CML 2.3.1. This problem only affects new deployments that used the CML 2.3.0 OVA. If you installed CML with the 2.3.1 OVA, your CML VM should not be affected. If you upgraded to CML 2.3.1 from CML 2.3.0, be sure to power down the CML VM from VMware after the upgrade. |

| Your web browser shows an error that indicates an expired certificate when you try to use the CML UI or the Cockpit admin portal for your CML server even if you have previously added a local exception for the certificate. | The default SSL / TLS certificate included with CML 2.3.0 expires in June 2022. After that date, web browsers will report a warning or error because of the expired certificate. Upgrade to CML 2.3.1, which includes an updated certificate that is valid until 2024. Workaround: Install your own TLS / SSL certificate that's signed by a trusted Certificate Authority (CA). See the CML FAQ for instructions on installing your own certificate. |

| Licensing quota is not decreased on failed node start | A licensed allocated by a node is released when the node fails to start. |

| BM install of 2.4dev0-938 only works if server has 1 disk | Bare metal installations now look for the first valid disk device, skipping earlier disk devices which appear to be physically removed while still enumerated by the server hardware, e.g. unmapped CIMC vHDD. |

| Improper error thrown when an undo operation is performed n Node label | Fixed commit/cancel field when it showed an validation error message after resetting the value to the original/valid one |

| Core driver startup mishandles node def filenames with spaces | Fixed error in core driver startup where node definition file containing spaces was mishandled. |

| MAC address/IP data is not removed when a node is stopped | Layer 3 addresses which are detected through DHCP snooping on the bridge0 interface are now cleared for nodes as they are stopped. In general, only the last entry in the respective IP list for a given MAC address is current and valid; previous entries may be preserved in the list in case the assignment changed. |

| virl2-monitor service fails occasionally | Fixed virl2-monitor failing to start occasionally due to a race condition between tmux commands. |

| Do not log unknown username authentication | Login attempts are now logged in the controller service by the logged-in user ID, not name. For unknown users, a SHA256 sum of the entered username is logged. |

| Fix upgrade button in case of failure | Starting with upgrades from 2.4.0 to newer versions, a failed upgrade will be detected and reported on properly in the Cockpit UI. |

| Drop hardcoded cml2cml2 password | The factory-default password of the 'cml2' admin user is no longer 'cml2cml2'. The password is taken from the controller host's machine-id - a value generated on boot if not present yet. Upon factory reset, use the virl2-switch-admin.sh command in the Cockpit's Terminal to replace the admin user name and password. |

| Bridge setup hang on connecting bridge | CML services including the UI may fail to start if the primary interface is configured for DHCP and it cannot receive an address. This can prevent successful completion of the initial configuration. |

| Nginx end-slash enforcement loses port | Fixed missing port in URL after a redirect |

| Link data not unique on link start | Fixed occasional case of a link to external connector not starting properly, putting it into inconsistent state. |

| Ext-connector mac address changes upon virl2.target restart | External connector interfaces are no longer assigned a MAC address when the node is started, which was never used in the first place. |

| Handle ext-ext connections reasonably | Direct links between two external connectors will not be started unless they refer to different external bridges in their configuration. Users should be mindful of the implications of indirectly creating such connections, or whether such connection between different bridges is legitimate in their use case. |

| Height/width min/max limits do not work | Fixed broken validation for min/max lines/cols of the xterm |

| CML does not support '-' for DNS search domains | Allow dash (-) in DNS names in the initial setup. |

| A heartbeat exception corrupts labs | Resolve heartbeat exceptions when LLD is not available. |

| uuidd.service is failing | Do not install failing uuidd (uuid daemon). |

| Duplicate lab displayed in UI | Fixed a rare case where CML was displaying the same simulation twice due to async |

| Remove orphaned VMs feature is broken | API endpoint /lab/ |

| Controller crash: corrupted unsorted chunks | We haven't seen the issue since, thus closing. |

| TypeError when accessing node config | Fixed some console errors |

| Handle topologies with interface names that don't match the node def | Interface labels of imported topologies do not longer have to match labels in node definitions. |

| Imported node definition without cpu limit value should default to 100 | Fixed case when a node definition was invalid when imported without cpu_limit value. |

| Delete Selected in List feature should be disabled without doing Wipe Selected action, just as Tiles feature | Display a confirmation dialog with the a warning if certain selected labs can't be wiped/deleted based on the state. After the confirmation from the user, it attempts to delete/wipe whatever is selected and bubble the errors from the backend to the user. |

| Build Initial Bootstrap Configurations stripped the alphabet "z" from hostnames when generating hostnames from node labels. | Fixed in CML 2.4.1. |

| Resolve.sh must force IPv6 address | Fixed in CML 2.4.1. Fixed a bug where in cluster hosts failed to resolve the link-local ipv6 address of remote computes' cluster interfaces in nonstandard network environments |

| Forbid node's node definition updates | Fixed in CML 2.4.1. Fixed a bug that allowed to change a node's node definition. |

| Fix ISO installation errors when no disks are available | Fixed in CML 2.4.1. Improved error logging in ISO installation process when no suitable disk has been found. |

| No-op interface actions should not raise | Fixed in CML 2.4.1. Fixed a bug that raised an error when a user tried to stop an interface on a stopped node. |

| A random string can be set as node's image definition ID | Fixed in CML 2.4.1. Fixed a bug where updating a node's image definition was allowed even if it did not exist or match the node definition |

| Copy UEFI code when no var file is provided | Fixed in CML 2.4.1. |

| There is no pop-up window or info for "common password" change attempt | Fixed in CML 2.4.1. Fix a bug that prevented warnings related to dictionary passwords from being displayed in UI. |

| Upgrade to 2.4.0 fails if compute hostname set with domain | Fixed in CML 2.4.1. Fixed a failure to handle CML upgrades of 2.3 instances with domain name set; since 2.4.0, initial setup requires that a plain hostname be used for system hostnames. Changing the domain name in Cockpit after the fact is now supported. |

| Cockpit console log tarball diagnostics filename contains colons | Fixed in CML 2.4.1. Re-added missing diagnostics logs into CML-log.tar.gz and renamed it, so it's compatible with Windows. |

| Imported/joined lab in client missing configurations | Fixed in CML 2.4.1. Imported/joined labs now contain node configurations in the client library. Deprecated "with_node_configuration" argument in Lab.sync, replaced with exclude_configurations. |

| Do not connect compute member to the controller if their product versions don't match | Fixed in CML 2.4.1. On cluster upgrade, connections to compute hosts will be terminated unless their CML release version matches the controller. Complete the upgrade process on all computes before using the system to run labs again. |

| Cluster upgrade fails if nodes are running on compute members | Fixed in CML 2.4.1. Added a check that prevents upgrade on cluster if any compute has any nodes running; users must make sure all labs are stopped before upgrading the controller, or compute upgrades will fail and require manual intervention. |

| Small fixes to initial configuration script | Fixed in CML 2.4.1.

|

| Node definitions with a sim.vnc property cause problems 2.4. | Fixed in CML 2.4.1. Improved logging around node definition initial load. |

| Baremetal install fails on creating bootloader partition | Fixed in CML 2.4.1. In baremetal installs, an additional warning is issues early in the installation when UEFI boot is not detected. Legacy BIOS baremetal installation are not supported and very likely to fail. |

| Support long file names for dropfolder and images | Fixed in CML 2.4.1. Allowed the full 255-byte limit for image and node definitions IDs (previously 64). |

| remove configs from db when wiping node | Fixed in CML 2.4.1. Wipe read-only node configuration taken from a node definition for cases when the node definition is updated between node starts. Previously if a node definition was updated, the update wasn't propagated to a node created from that node definition. |

| Disk usage in CML doesn't match the real usage | Fixed in CML 2.4.1. Fix a bug that incorrectly displayed available disk in system stats in UI and launch sequencer. |

| Optimize interface.link property | Fixed in CML 2.4.1. Improved node interface and link handling in controller that caused API slowdowns at scale. |

| Core driver not reconnected by controller | Fixed in CML 2.4.1. Fixed a bug that prevented core-driver from reconnecting to the controller when the initialized connection was terminated. |

| update ldap configuration breaks auth | Fixed in CML 2.4.1. Fixed a bug that prevented an LDAP configuration from saving when switching between LDAP servers. |

| Baremetal install fails on previous CML install | Fixed in CML 2.4.1. Fixed a bug that caused bare metal installation to fail if there was an existing installation of CML on the disk. |

| Link start succeeds when LLD not connected | Fixed in CML 2.4.1. Fixed a case where under load, unresponsive lab operations marked a compute as disconnected, and caused other operations on the compute to fail silently, putting them into inconsistent state. |

| Removal a node of a lab that has pending actions leads to failures | Fixed in CML 2.4.1. Fixed a bug that prevented nodes from being correctly removed. This was causing issue specially when other nodes of that lab were starting at the same time. |

| 500 Internal Server Error when getting lab events | Fixed in CML 2.4.1. Fixed a broken GET "/labs/ |

| get_link_by_nodes and get_link_by_interfaces are broken | Fixed in CML 2.4.1. Deprecated Lab.get_link_by_nodes/interfaces, used IDs instead of objects like the name would suggest. Added Node.get_links_to and .get_link_to, these replace get_link_by_nodes Added Lab.get_interface_by_id Deprecations in Interface:

|

| Nodes tab throws error while lab is loading | Fixed in CML 2.4.1. Fixed a bug that caused the node table to be empty if a compute member cannot be resolved in the nodes pane. |

| UI doesn't parse message for missing LDAP user | Fixed in CML 2.4.1. Fixed an invalid error message displayed when logging as an LDAP user while the server is configured with local authentication. |

| Console history doesn't work on compute members | Fixed in CML 2.4.1. Fixed a bug that was preventing console history collection from nodes defined on compute members. |

| Wipe lab failure leads to Bad request: 'null' does not match error | Fixed in CML 2.4.1. Fixed very rare error message which was caused by calling update title request with 'null' after leaving dashboard page |

| Multi link is not displayed even after a page refresh under Link section | Fixed in CML 2.4.1. Fixed a bug with invalid multi link representation in the link pane. |

| On non-VM controller kvm-ok not being ok is ok | Fixed in CML 2.4.1. On a cluster controller whose initial setup disables starting VM nodes, VMX extensions are not required. The health status indicator will draw a different (inverted) green checkmark regardless of VMX presence for such controllers. |

| Vuex statisticsStore getter.controller returns undefined | Fixed in CML 2.4.1. Fixed validation to check upload file size to see if there is enough space on the controller |

| Uptime is not reset after controller restart | Fixed in CML 2.4.1. Fix broken node uptime timer after restart of CML services. |

| Improper notification thrown when switching between the nodes | Fixed in CML 2.4.1. Fixed a bug that displayed invalid node labels in lab notifications when switching between nodes while extracting node configuration. |

| api allows for the deletion of interfaces from node out of order | Fixed in CML 2.4.1. Fix a bug that allowed interface deletion out of order. |

| Update import_sample_lab in PCL to use /sample/labs API endpoint | Fixed in CML 2.4.1. import_sample_lab now uses sample/labs API endpoint, added get_sample_labs NOTE: import_sample_lab functionality changed, so code using it will break - as the function only worked on the host, this shouldn't break much |

Known Issues and Caveats for CML 2.4

| Bug Description | Notes |

|---|---|

| Cannot run simulations on a macOS system that uses Apple silicon (e.g., the Apple M1 chip). | CML and the VM Images for CML Labs are built to run on x86_64 processors. In particular, CML is not compatible with Apple silicon (e.g., the Apple M1 chip). Workaround: There are no plans to support ARM64 processors at this time. Deploy CML to a different system that meets the system requirements for running CML. |

| Even if you were able to run network simulations with CML 2.2.x on VMWare Fusion and macOS Big Sur or above, the same labs no longer start after migrating to CML 2.3.0+. | A default setting in the CML VM changed between CML 2.2.3 and CML 2.3.0. Because of the new virtualization layer in macOS 11+, this setting causes errors when running the CML VM on VMware Fusion. Workaround: follow the instructions to disable nested virtualization in the CML VM. |

| Bug in macOS Big Sur. After upgrading to Big Sur and VMware Fusion 12.x, simulations no longer start. | MacOS Big Sur introduced changes to the way macOS handles virtualization, but the problems were resolved in later versions of macOS Big Sur and VMware Fusion. Workaround 1: If you are still experiencing problems, be sure to upgrade to the latest version of VMware Fusion 12.x and the latest bug fix release of your current macOS version. Workaround 2: If you are using CML 2.3+, then follow the instructions to disable nested virtualization in the CML VM. |

| Installation fails if you click OK to continue installation after the reference platforms are copied but before the services finish restarting. | Workaround 1: After the reference platforms are copied, wait a couple of minutes before clicking the OK button to continue with the initial set-up. Workaround 2: If the installation failed because you pressed OK too quickly, reboot the CML instance and reconfigure the system again. All refplats are already copied, so the initial set-up screen won't restart the services this time. Note that the previously-configured admin user will already exist, so you will need to create a new admin account with a different username to complete the initial set-up. |

| Deleting or reinstalling a CML instance without first deregistering leaves the license marked as in use. Your new CML instance indicates that the license authorization status is out of compliance. | If you have deleted or otherwise destroyed a CML instance without deregistering it, then the Cisco Smart Licensing servers will still report that instance’s licenses are in use. Workaround 1: Before you destroy a CML instance, follow the Licensing - Deregistration instructions to deregister the instance. Properly deregistering an instance before destroying it will completely avoid this problem. Workaround 2: If you have already destroyed the CML instance, see the instructions for freeing the license in the CML FAQ. |

| When the refplat ISO is not mounted until after the initial set-up steps have started, CML will not load the node and image definitions after you complete the initial set-up. | Workaround: Log into Cockpit, and on the CML2 page, click the Restart Controller Services group to expand it, and click the Restart Services button. CML will load the node and image definitions when the services restart. |

| When the incorrect product license is selected, the SLR (Specific Licensing Reservation) still can be completed. It eventually raises an error, but the SLR reservation will be complete with no entitlements. | Workaround: If you accidentally select the wrong product license, then you will need to release the SLR reservation and then correct the product license before trying to complete the SLR licensing again. |

| If the same reservation authorization code is used twice, the CML Smart agent service crashes. | Workaround: Do not use the same reservation authorization code more than once. |

| If you have read-only access to a shared lab, some read/write actions are still shown in the UI. Attempting to change any properties or to invoke these actions raises error notifications in the UI. | Workaround 1: Do not attempt to invoke the read/write actions when working with a lab for which you only have read-only permissions. Workaround 2: Ask the lab owner to share the lab with read/write permissions so that you can make those changes to the lab. |

| If you have read-only access to a shared lab, you will still not be able to see the current link conditioning properties in the link's Simulate pane. | Workaround: ask the lab owner to share the lab with read/write permissions if you need to access the link conditioning properties. |

| The algorithm for computing the available memory does not take into account maximal possible memory needed by all running nodes. It only checks the currently allocated memory. CML will permit you to start nodes that fit within the current available memory of the CML server. Later, if the memory footprint of the already-running nodes grows and exceeds the available memory on the CML server, those nodes will crash. | Workaround 1: Launch fewer nodes to stay within your CML server's resources. Workaround 2: Add additional memory to the CML server to permit launching additional nodes. Of course, this workaround just moves the point where the CML server will eventually hit its limit, so you must remain aware of the your CML server's resource limits or ensure that the CML server has sufficient memory to accommodate all labs that will be running on your CML server simultaneously. |

On a CML server or VM with multiple NICs, the CML services are active and running and do not report an error, but the CML UI and APIs are not reachable. The CML services are listening on the wrong interface because, when the CML instance has multiple NICs, CML chooses the first alphabetically-ordered interface and configures it as the CML server's management interface. That may not be the correct interface. For example, an interface name like enp10s0 would be selected before enp9s0. |

Workaround 1: Change the network connections to the CML server so that the interface that CML selects is the one that you'd like it to use for its management interface. Workaround 2: After the CML server boots up, configure the network interface manually in the Cockpit Terminal: nmcli conThat command shows which interface bridge0-p1 is actually connected to. In this example, let's say that bridge0-p1 connected to ens10p0, and we want it to be connected to ens9p0. We need to remove the bridge0-p1 connection and then recreate it with a connection to the right interface. Adjust the following commands based on your interface IDs. check con del bridge0-p1In the end, you should see only 3 active connections (green with a device set). The first two are bridge0 and virbr0, and we do not touch these; the third one is bridge0-p1 which should now have the correct interface listed as the device. |

When starting a CSR 1000v node for the first time or after a Wipe operation, the interfaces are all in shutdown state. |

Workaround 1: Add no shut to each interface config in each CSR1000v node's Edit Config field in the UI. Workaround 2: Use the EEM applet on the CSR 1000v page. |

| New nodes cannot be started when CPU usage is over 95%, when RAM is over 80%, or when disk usage is over 95%. | Workaround: Allocate additional resources to the CML server. Workaround 2: If you're hitting the disk usage limit, check the Remove Orphaned VMs function in Cockpit. Orphaned VMs can consume a significant amount of disk space even though CML is not longer using or managing those VMs. |

VM Images uploaded via the API can only be used in an image definition if they are transmitted via a multipart/form-data request. The problem is that the SELinux context is not set correctly. |

Workaround 1: Use the Python Client Library, which handles image uploads correctly. Workaround 2: Create a multipart/form-data request when calling this API. |

The journalctl output shows errors like this: cockpit-tls gnutls_handshake failed: A TLS fatal alert has been received |

This log message only occurs with Chrome browsers and has no functional impact on the use of Cockpit. No workaround needed. |