Test

Introduction

It is extremely important to have good tests, for two big reasons:

- It ensures the quality of the initial delivery

- It makes it easy to guard against regressions when making changes or updates to the package.This holds true whether the changes are to the package or in NSO, NED, or another external component that the project depends on.

It is suggested to divide the testing into stages, so that early tests can give quick feedback in the case of big errors and later tests on real hardware can ensure complete functionality in a real-world environment. Manual tests have a value for early developer tests and for troubleshooting issues, but the focus must be on test automation to allow repeated, reliable execution of the test cases. This helps ensure consistent quality.

For this reason it is important to plan for testing, both continuous testing during development and acceptance testing before going to production, and competence in both software testing and test automation is crucial in the Automation group.

If a project consists of multiple use-cases it is important to have both use-case specific tests and integration tests that run all use-cases together.

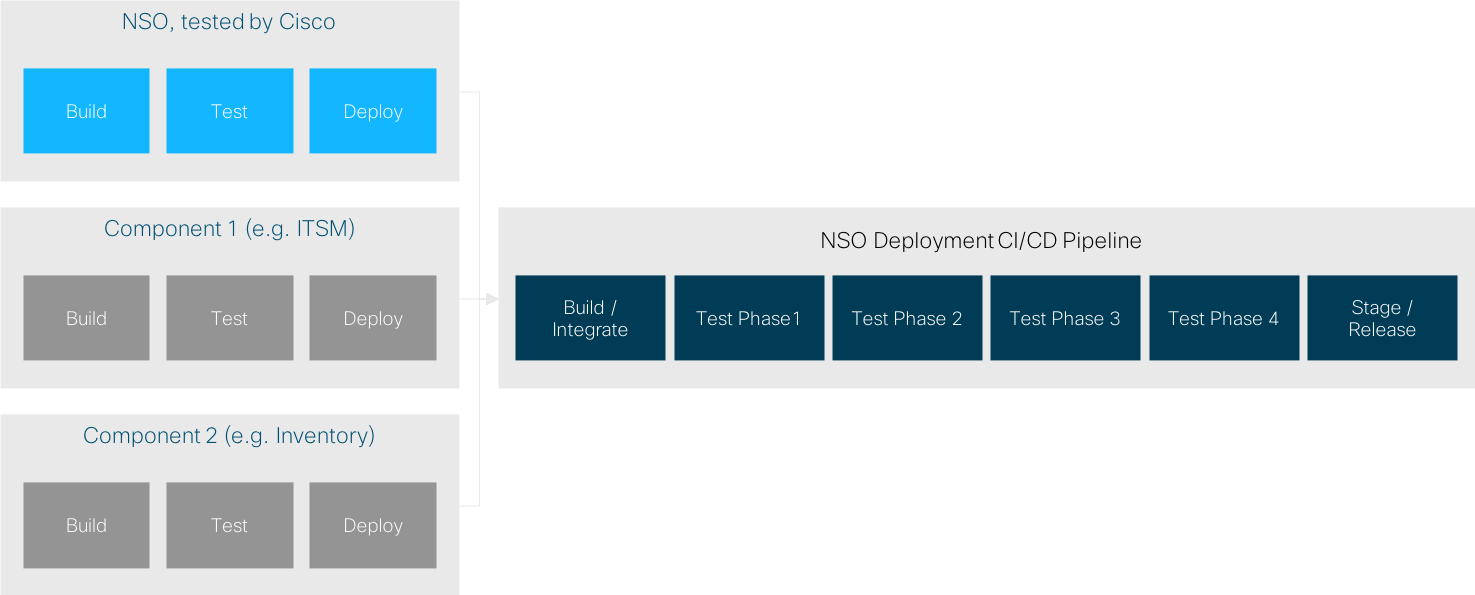

Test Stages

There are many possible ways to setup the test pipeline, and the following is a good reference:

Build/Smoke tests

Ensures that the package builds and can be loaded into NSO, makes sure to preserve basic functionality.

Function pack unit tests

Ensures that the individual units of code in the Function Pack works as expected

Static analysis

Static tests suites such as sonarqube or similar, giving feedback on the structure and quality of the code itself.

Function pack functional tests

The goal with this phase is to test the functionality of the use-case together with NSO and NEDs. This phase will quickly identify if changes are needed due to model changes if for example a NED is upgraded. This stage can typically run in the netsim simulated environment.

System integration tests

The goal in this phase is to test the functionality of all the use-case together with NSO and NEDs together with real devices to ensure compatibility with specific device versions. This phase requires a lab with real network devices (possibly running as VMs for some of the test). This may require an automated reservation system if the lab is shared and an automated way to restore the lab to a known state.

Depending on the speed of the tests, some or all of them can be used as 'gating' tests, forbidding a merge of a change that does not pass the tests. Setting up a full-featured pipeline is a complex job and it is important to evaluate your test strategy independently and to have resources available in the project to setup and maintain it.

Types of tests

Automation tests can be automated in several different ways.

Unit-level Mocking

Parts which are foundational or which contain interesting algorithmic problems can be unit-tested using mocking, where the individual module is isolated and tested against a simulated (mocked) environment. This tends to be very fast and efficient when feasible, but tests only an individual component in isolation.

Device-level Mocking

Using NSO netsim the entire device can be mocked, allowing the automation to be run in the real NSO environment but without needing the resources of a whole environment

Virtual lab

Using virtual routers, for instance using Cisco Modelling Labs to spin up an instance similar to the real environment.

Physical lab

It is very desirable to have a physical lab with real devices that more precisely corresponds to what exists in production. Using a real environment early ensures that all the quirks in the real system are found at an early stage.

Both the physical and virtual labs can also be used for development of new features and troubleshooting.

Test coverage

It is important to measure the test coverage, both in terms of how many lines of code are covered and in terms of how well the use-cases are tested. Generally speaking the first stages provide code-coverage and the later stages are responsible for testing entire use-cases. Make sure that test reports are automatically generated providing information on what the test results are and what the tests cover.

To avoid regression it is important that bugs are given their own test-cases so that future versions of code are tested against it.

How to implement tests

It is important to start the test development early, it is much harder to start testing once the use-case is deployed to production. For this reason it is important to designate a Test lead early and to make sure the Automation group has the competence needed to build a test pipeline and test infrastructure. If that is in place it is easy to extend coverage at a later time.

Designing for test

Because a modern network has many components, from many different vendors, each undergoing rapid change at the same time as the services on top of them are themselves changing, network automation systems have have to handle frequent changes. Testing and testability are extremely important to be able to deliver a stable experience from the automation system. When designing the models and implementing the underlying code, it is important to make sure that all the interesting test-cases are possible, so that regressions can be detected when any one component changes.

In particular, it should be possible to decompose and open up the system to test smaller pieces independently. Being able to do sub-system test simplifies the task of writing test-cases enables higher coverage and faster re-testing after changes.

It is also important to document the use-cases that the system supports so that the model reflects not only all that is possible but also what is desired from the system.

Adding in special self-test actions that test the actual network configuration is especially helpful since they give the opportunity to make sure that the automation is really putting the system in the appropriate state.

Best Practices

- A member of the team should have responsibility for coordinating test activities

- Have an automated way to run the tests

- Tests should be divided up so that parts of the test suite can be run in isolation

- Automatically trigger a select set of tests on every commit / pull-request

- Run a full test suite with a regular cadence

- Enable developers to run tests on demand

- Have a dedicated test environment that replicates the live environment

- Test results and test coverage should be shared with the automation steering group

Checklist

- We have chosen our testing tools

- A staging lab is available before we go into production

- Tests run consistently, without intermittent failures

- Testing and writing test-cases is part of your definition of done

- Basic tests are executed on every checkin

- Someone has responsibility for following up on tests

- We have documentation for what the tests cover

- There is an established procedure for reviewing test results before going to production