Overview to develop Data Logic

Alert: Cisco has made the end-of-life (EOL) announcement for the Cisco Edge Intelligence.

Data logic based policies allow you to add data intelligence to the Edge by writing data processing scripts in Microsoft Visual Studio Code using the JavaScript language. Once created, the Data Logic scripts can be configured with a cloud or MQTT destinations and deployed onto EI Agents for data processing.

Create Data Logic scripts by installing an EI extension in VS Code, and then create a script entry. Click through the script options to add input sources (Asset Types), Output Models, Runtime Options and other settings. You can then implement the data transformation logic in JavaScript and debug it from within VS Code. When the script is ready for mass deployment to EI Agents, save it to your organization's EI cloud system from where the script can be deployed (and run) on an EI Agent.

Log in to Cisco EI from VS Code

To create Data Logic scripts, log in to your Cisco IoT account from the VS Code Edge Intelligence extension. This gives you access to IoT Asset Types and Runtime Options, and allows you to add your Data Logic to Cisco IoT. Once added to IoT, the Data Logic can be deployed to an EI Agent.

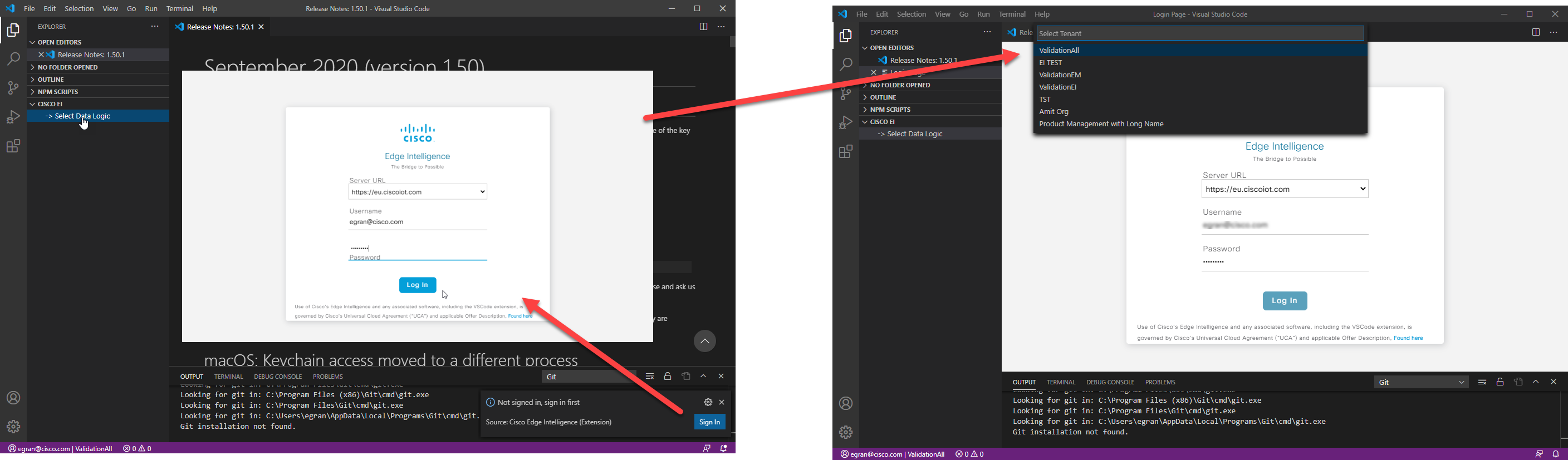

Click the Explorer icon in the top left.

Click Cisco EI > Data Logic. This option appears only if the Cisco Edge Intelligence extension was installed.

Click Sign-In (in the bottom right).

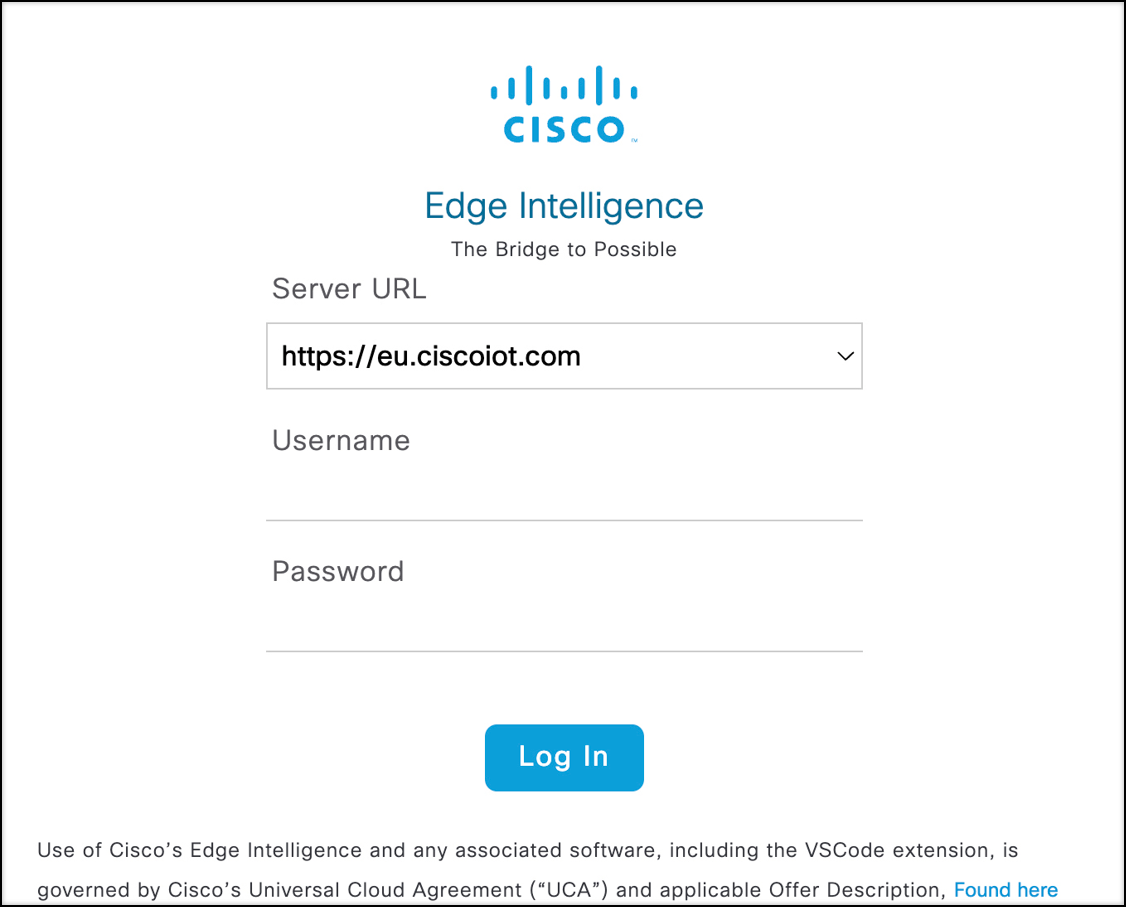

You can log in using either IoT OD credentials or an API Key and Secret.- Follow below given steps to Log in using IoT OD Credentials:

Note: From VS code v1.16.4, this login page will no longer be operational.

- Server URL: Select the URL for your Cisco IoT account.

- Username: Enter the email address for your Cisco IoT account.

- Password: Enter your password.

- Click Log In.

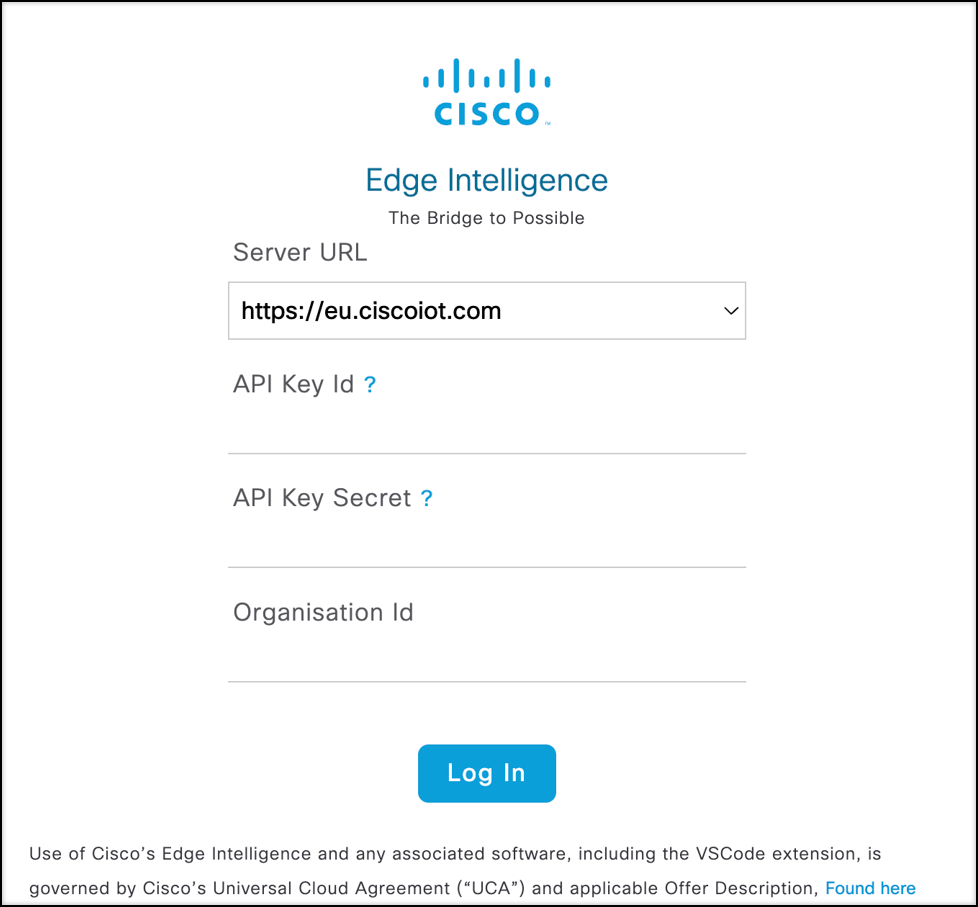

- Follow below given steps to Log in using API Key Id, API Key Secret, and Organisation Id (Refer here to get those details):

- Server URL: Select the URL for your Cisco IoT account.

- API Key Id: Enter your API Key Id.

- API Key Secret: Enter your API Key Secret.

- Organisation ID: Enter your Organisation ID.

- Click Log In.

- Follow below given steps to Log in using IoT OD Credentials:

Under Select Organization, select the Cisco IoT Organization where the Data Logic scripts will be deployed.

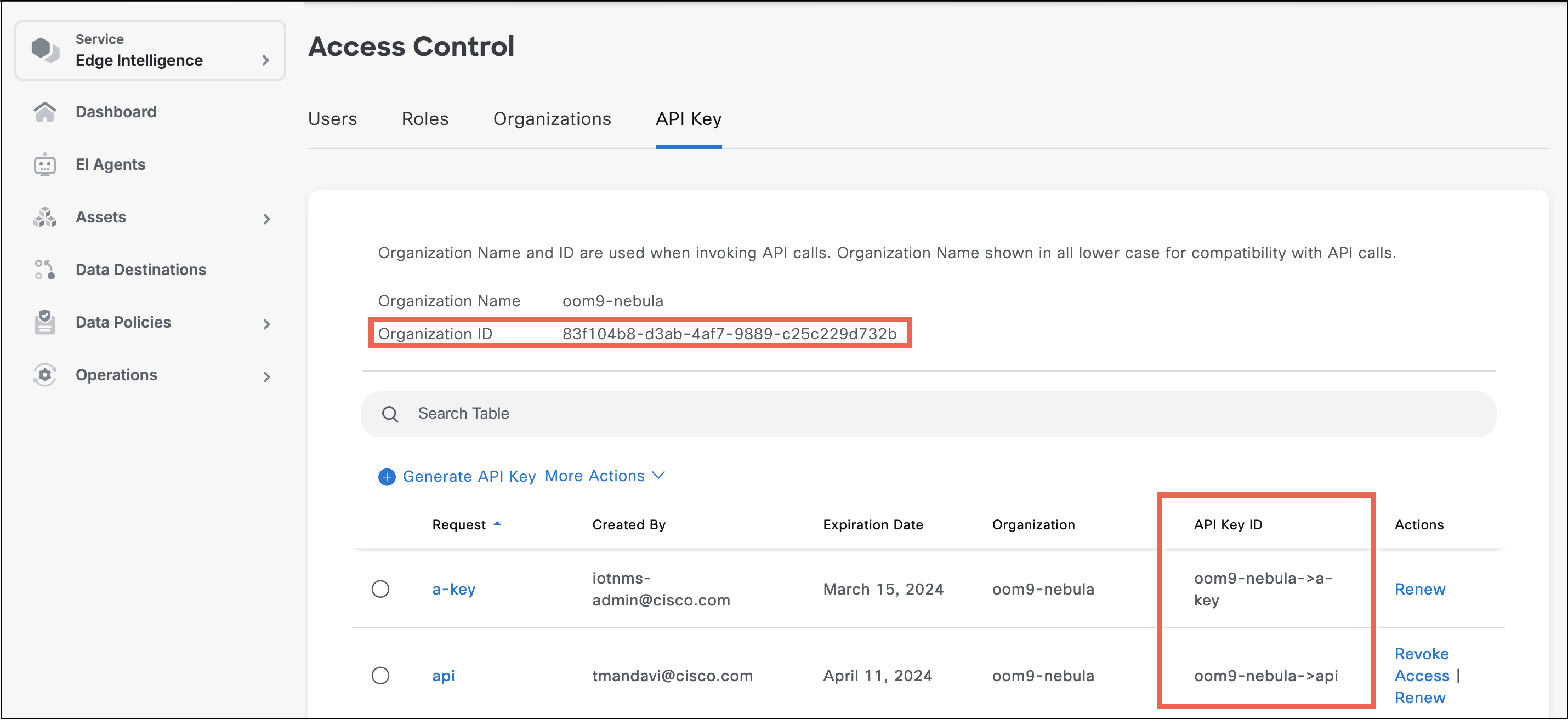

Get API Key Id, API Key Secret, and Organisation Id

Follow the below given steps:

- Log in to IoT Operations Dashboard and click the "people icon" on the far right of the header. Click Access Control.

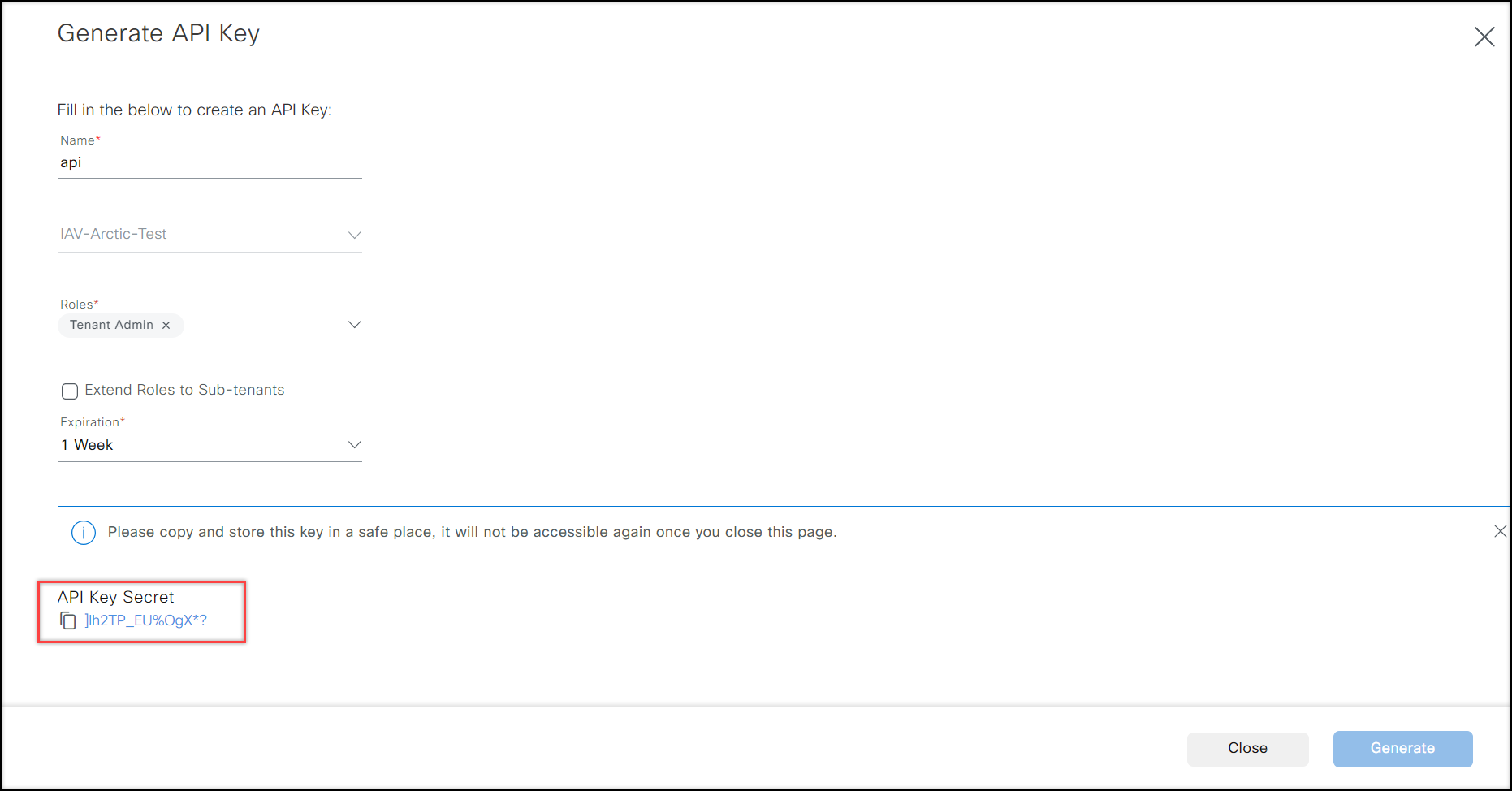

- Click API Key > Generate API Key.

- Enter the API key Name, select Role as Tenant Admin and Expiration time/duration. Click Generate.

- Copy the API Key Secret.

Important: Save this key safely. Once you close this page, it cannot be retrieved.

- Close the page to return to the API Key page, where you'll find your API Key ID and Organization ID.

Create Data Logic

Summary

To create or modify your data logic scripts:

Open VS Code and log in to Cisco IoT.

Click Data Logic, right-click Data Logic and select Create New to create a new script. Enter a name and press Enter. You can also select and revise an existing script.

Enter a name and press Enter. (For example: "LogicExample")

Select the new created script name. ("LogicExample.js"). The script that transforms data is shown when you click the script name.

Click Input Asset Types and select one or more Asset Types that provide the source data.

- The EI Agent can have only one physical asset instance of each Asset type that the Data Logic expects.

- A default variable name is created for each selected Asset type for use in your script (for example nhrserial.read_value). Right-click the name and select Rename Variable Name to change it.

- Right-click the name and select View Data Model to see the Data Model of Asset Type.

Click Runtime Options and select when the script will be run.

- Invoke on New Data: The script is called when data changes. This option is always enabled.

- Invoke Periodically (ms): Enter a time, in ms. For example, if you enter 5, the Data Logic script will be called every 5 ms. Enable this option if needed.

- Cloud to Device Command: This function is called when you receive a command from the cloud.

(Optional) Click Output Logic Data Model and enter the format of the telemetry message the script will output.

- Modify the default Output Logic Data Model, or delete the entry and specify the output model in the script (in JSON).

- See "Data Model" below for more information.

Click the script name and create your logic to transforms data.

- Default script created when you create a Data Logic.

- To modify the Data Logic script see the Scripting Engine Tutorial and Additional Examples below.

Be sure to include any required console print statements to test the data logic, using the built-in function "logger.info()" You can print anything, such as transformed variables, data coming in, etc. In the following example, the log output will be printed in the output drop-down panel of VSCode:

output.value = input.value logger.info("debug statement")

Input Asset Types

The Input Asset Types provide the source data (and in case of Local action, target to set values) for your script. Currently, the user must map exactly only one physical asset instance of each Asset that the Data Logic expects to an EI Agent to deploy the Data Logic policy successfully.

For example, a Data Logic script expects 3 Asset Types -

- TempSensor (MQTT)

- PressureSensor (MQTT)

- RotationsPerMinute (OPCUA)

When the Data Logic is deployed on an EI Agent, the EI Agent should have exactly one asset instance for each Asset Type mapped to that particular EI Agent. For example:

- TempSensor: example "TempSensor-asset-1"

- PressureSensor: example "PressureSensor-asset-1"

- RotationsPerMinute: example "RotationsPerMinute-asset-1"

If more than one asset of any Asset Type is mapped to the same EI Agent, the deployment will fail.

Related topics

Output Logic Data Model

There are 2 options for the Output Logic Data Model:

- The "Output Logic Data Model" is specified.

- A default model is created when you create an EI script. This can be modified as described below.

- Raw Mode: The JSON data that will be published to the destination is created within the Data Logic script.

- In order to use the Raw Mode, the default Data Model that was generated when the script was created must be deleted or an error will occur.

- The JSON structure is not verified by EI against the destination's requirements. So, it is up to the script writer to ensure that the final JSON structure meets the destination requirements.

"Output Logic Data Model" is specified (default)

An output JSON data model is automatically created when a Data Logic script is created. You can revise this default output model as needed.

The output model for a Data Logic script is defined as a JSON array: one entry in the JSON array corresponds to one key in the output JSON generated by the script.

To change the output model:

- Under your Data Logic entry, click Output Logic Data Model.

- Modify the model JSON file by adding or removing array entries using the following JSON keys.

- “key” – The output data label. The supported JSON characters for "key" attribute are:

- [_A-Za-z] for the first character

- [_0-9A-Za-z] for the remaining characters

- "type” – The output datatype. The supported values are:

- INT

- BOOLEAN

- DOUBLE

- STRING

- "category” – The category of the JSON key in the output message - The supported values are:

- "TELEMETRY” – The JSON key will appear in the "metrics" section of the output message (with or without nesting according to what was specified in the concrete destination for telemetry values).

- "ATTRIBUTE” – Publishes the JSON key in the "attributes" section of the output message (with or without nesting according to what was specified in the concrete destination for telemetry values).

- “key” – The output data label. The supported JSON characters for "key" attribute are:

- Save the output model JSON.

To specify the concrete values that should be emitted by a Data Logic, do the following inside your script code:

- Specify the values for the individual keys that should be emitted on "output.publish()" by assigning them to the correct key of the the output object. Example:

output.avg_analog_input_1=2.34;

output.avg_analog_input_1=2.34;

output.count_digital_input_1 = 22;

- Use the publish function to emit a JSON with the configured structure (after you have updated all the properties of the output object). Example:

output.publish();

Examples:

Output Logic Data Model JSON:

[

{"key": "avg_analog_input_1", "type": "DOUBLE", "category": "TELEMETRY"},

{"key": "avg_analog_input_2", "type": "DOUBLE", "category": "TELEMETRY"},

{"key": "count_digital_input_1", "type": "INT", "category": "TELEMETRY"},

{"key": "count_updates", "type": "INT", "category": "TELEMETRY"},

{"key": "location", "type": "STRING", "category": "ATTRIBUTE"},

{"key": "manufacturer", "type": "STRING", "category": "ATTRIBUTE"}

]

Sample output (to destination application) in JSON format (assuming that the concrete used destination has configured "machine_data" as the json container for TELEMETRY properties and no json container (flat) for "ATTRIBUTES".

{

"location": “Lab”,

"manufacturer": "ACME Corp",

"machine_data": {

"avg_analog_input_1": 2.34,

"avg_analog_input_2": null,

"count_digital_input_1": 22,

"count_updates": 20

}

}

RAW mode: include the JSON structure in your script

RawMode allows you to construct the emitted data structure dynamically in your script. All defined fields are published to the destination. To use RawMode, you must delete the "Output Logic Data Model" and construct the JSON data structure in your Data Logic script.

Important: You are responsible to correctly define the JSON structure for the destination requirements. No validation is performed by the script or EI software for data logic policies that are configured to emit RawMode JSONs.

To include a RawMode dynamic output Data Model:

- Delete the default "Output Logic Data Model" entry (click Output Logic Data Model and delete all text).

Note: if the "Output Logic Data Model" is NOT blank, an error occurs after data deployment: "Error executing Javascript: Reference Error: identifier 'publish' undefined"

- In your Data Logic script, construct the data structure that contains the output data to be published to the destination. The structure must be in the right hierarchy/format.

- Use the global "publish" function.

a. The first parameter should always be "output".

b. The second parameter is the data that will be sent to the destination.

Example:

publish("output", my_result);

Examples:

Data Logic script example:

function on_update(){

Var my_result = {

"first_value": 1.23, "second_value": 11833, "desc": "two values"

};

publish("output", my_result);

}

Sample output (to destination application) in JSON format:

{

"first_value": 1.23,

"second_value": 11833,

"desc": "two values"

}

Debug your script (Remote Debugging)

For undeployed, not yet productive used datalogics there is a "Debug Script" option available inside Visual Studio. The purpose of than option is to deploy your script for testing on a specific EI Agent and test the functionality (for example the output that the script produces) before making it available for broader deployment ("Read For Production"). Use this debug mode to verify the results and revise the script, if necessary.

- In the script entry, click Debug Script to expand it. (this option is only available for scripts which are not yet used in production).

- Click EI Agent and select an agent where the script will be deployed.

- See Enable and Manage EI Agents) for more information.

Note: You have to make sure that you select an agent where exactly 1 instance of each required asset type is mapped to already.

- See Enable and Manage EI Agents) for more information.

- Click Data Destination and select the destination where data will be sent.

- See Add Data Destinations for more information.

Note: On top of the productive destinations there is an additional "Testing" destination available in the dropdown - if you select "Testing" the output of the script will not be send to any real world destination. You can use that Testing Destination for Debugging in case you do not want to send the output of the script already to a real destination.

- See Add Data Destinations for more information.

- Click Deploy Data Logic to deploy the script for debugging on the selected EI Agent. Click Yes to confirm.

Note: in case you have selected an agent that does not have the right assets mapped to it the debug deployment will fail. Make sure exactly 1 Asset instance is mapped to the selected EI Agent that matches the Input Asset Type.

- Click Start Debug.

Note: Start Debug only appears in the GUI after you deployed the script for debugging.

- Select View > Debug Console to display the console along the bottom.

- Click the Output tab.

- The drop-down menu will have 2 options related to Cisco Edge Intelligence. a. Select Cisco EI from the drop-down menu, to view output related to the EI VSCode extension. b. Select LogicExample (or the appropriate Data logic name), to view the console output of the newly created script.

- The script returns data from the EI Agent installed on a router, which appears locally in the Debug Console. This allows you to see the effect of your script.

- In case your script does not behave like you intended and you want to change it:

a. Click Stop Debug (this will stop the debug dataflow from the script to your IDE).

b. Undeploy the script (this will pull back the script from the Agent; in case you had a real world destination configured this will also stop the dataflow to the destination).

c. Change the script inside your IDE.

d. Save the Script

Note: If you do not pull-back your script from the Agent before that, you will not be able to save your modified script. e. Deploy the Script again for debugging. f. Click Start Debug again.

- In case you script behaves like expected: a. Click Stop Debug and then Undeploy Data Logic to stop debugging. b. Click Ready For Production to publish your script to EI so it can be used in productive data policies (via the Cloud Web UI).

Publish your Data Logic to the EI Cloud for deployment

When your script is complete, click Ready For Production to publish your script to the EI cloud (Cisco IoT Organization) where it can be deployed to an EI Agent and used to transform data (via the Edge Intelligence Web UI).

Scripting Engine Tutorial

This section explains how to implement scripts for Cisco Edge Intelligence. This feature is based on Duktape 2.5.0 and supports the same subset as Duktape does (conforms to ES5.0/ES5.1 with semantics updated from ES2015 or later where appropriate).

For additional information, refer to the following links:

High Level Flow

A data policy can be configured to use data logic (JavaScript code) to transform data. Each data logic script will be called for every change to fields in the input data model, or periodically based on the "runtime" option selected. The user code may decide to publish a value so that it can be consumed by an egress link or not.

User code may create global objects that are persistent over different invocations, allowing it to implement stateful transformations such as a sliding average, sliding median, histograms and so on. Further a script can be configured to allow customization and reuse in different data policies. For example, the same script could be used to aggregate the last 30 values in one policy and 100 in another, the count of values to aggregate being the configuration parameter.

Required Implementation

Each script is required to provide an on_update() function that is called whenever there is new data value from the input data model. Similarly, an on_time_trigger() function will need to be defined when the script needs to be invoked at a certain interval. The functions are not expected to return anything. Desired outputs are to be set on the global output object, but they will only be published once output.publish() is called.

Built-in global objects

output

The global object output shall be used to set the desired output values. The names match the output model as specified in the VSCode plugin (see Output Data Model). For example, if the output model specifies a foo field, it has to be set with output.foo = ....

Setting the output fields is not sufficient, however, to give the script implementer strict control over when and if he wants to publish the changed output model he needs to call output.publish() to explicitly publish the transformed value.

global input identifier

The global input identifier is the representation of the input model. Each field of the input model is a field on global input identifier(for example nhr serial: nhrserial). The input data model is the same data model as defined in Adding Device Models. When on_update() is called, global input identifier will contain all last known values of the input model. They can simply be accessed with var x = nhrserial.foo.

trigger

The global object trigger identifies the field name of the input model that has been changed. Be aware the simultaneous changes to multiple values of the input model are not possible and each change has to be handled on its own. This can be used to access the last changed value: var x = globalThis[trigger.device_name][trigger.field_name].

The global object trigger can also be used to access the timestamp of the last changed value: timestamp = trigger.timestamp.

Built-in global functions

Optional initialization

It is optional to provide an init() function. If present, this function will be called exactly once when the policy is instantiated, i.e. when the policy is first created and after each restart.

BinaryUtils Methods

The global object BinaryUtils is the representation of binary utility methods to operate on binary data. The following methods are part of the BinaryUtils object and available inside the Scripting Engine:

Binary Mask String

- This should always start with the prefix

0bor0B. - Allowed characters in Binary Mask String are

0,1. - Character

_is also allowed which can be used as separator it gives more readability for the user and separator character_can be present in mask any number of times. - The length of string mask depends upon which type of API is used so for Eg.

mask16AndShiftRightAPI expects mask string should consist 16 of0and1character or else it will be an invalid mask. - The character 1 should always be in consecutive manner or else the mask is considered as invalid mask.

- mask string cannot have all 0 characters in the mask

Few examples of invalid and valid binary mask strings are

0b_0000_1111_1111_0000=> valid hex string mask for 16 Bit APIs0B_0000_0011_1111_1100_0000_0000_0000_0000=> valid hex string mask for 32 Bit APIs0b00_11_11_11_=> valid hex string mask for 8 Bit APIs0b_0010_0111_1110_0000=> Invalid hex string mask for 16 Bit APIs because of non cosicutive 1 bit in mask0x00_1111_1111_0=> Invalid hex string mask for 16 Bit APIs because of invalid length0b00_11_11_1F_=> Invalid hex string mask for 8 Bit APIs because invalid character in mask

Hex Mask String

- This should always start with the prefix

0xor0X. - Allowed characters in Hex Mask String are [0-9, a-f, A-F].

- Character

_is also allowed which can be used as separator that gives more readability for the user and separator character_can be present in mask any number of times. - The length of string mask depends upon which type of API is used so for Eg.

mask32AndShiftRightAPI expects mask string should consist of 8 characters in the range of [0-9, a-f, A-F] or else it will be an invalid mask. - The character mask should always be in such a manner that it creates consecutive 1 or else it will be an invalid mask.

- mask string cannot have all 0 characters in the mask

Few examples of invalid and valid hex mask strings are

0x_00_FF_FF_00=> valid hex string mask for 32 Bit APIs0x_3F_FC=> valid hex string mask for 16 Bit APIs0x_F_8_=> valid hex string mask for 8 Bit APIs0x_00_F0_FF_00=> Invalid hex string mask for 32 Bit APIs because of non-consecutive 1 bit in mask0x_00_FF_FF=> Invalid hex string mask for 16 Bit APIs because of invalid length0xFG=> Invalid hex string mask for 8 Bit APIs because invalid character in mask

Function Definitions

js

/**

* @fn mask8()

* @brief gets the number of bit value from the input byte position as according to mask

* @param mask -> String (Binary or Hex Mask string. Mask should always have 8 bits/1byte)

* @param byte_value -> Uint8 (Input Byte Value)

* @return 8 bit integer -> masked bits from byte_value

*/

BinaryUtils.mask8(mask, bytes_value);

/**

* @fn mask16()

* @brief gets the number of bit value from the input buffer index as according to Mask

* @param mask -> String (Binary or Hex Mask string. Mask should always have 16

* bits/2bytes)

* @param buffer -> Uint8Array[] (Input buffer)

* @param buffer_index -> Integer (Start buffer index)

* @return Integer -> masked bits from input buffer

*/

BinaryUtils.mask16(mask, buffer, buffer_index);

/**

* @fn mask32()

* @brief gets the number of bit value from the input buffer index as according to Mask

* @param mask -> String (Binary or Hex Mask string. Mask should always have 32

* bits/4bytes)

* @param buffer -> Uint8Array[] (Input buffer)

* @param buffer_index -> Integer (Start buffer index)

* @return Integer -> masked bits from input buffer

*/

BinaryUtils.mask32(mask, buffer, buffer_index);

/**

* @fn mask8AndShiftRight()

* @brief gets the number of bit value from the input byte position as according to Mask and right

* shift the value for all zero bits

* @param mask -> String (Binary or Hex Mask string. Mask should always have 8 bits/1byte)

* @param byte_value -> Uint8 (Input Byte Value)

* @return 8 bit integer -> masked bits from byte_value after right shift

*/

BinaryUtils.mask8AndShiftRight(mask, bytes_value);

/**

* @fn mask16AndShiftRight()

* @brief gets the number of bit value from the input buffer index as according to Mask and right

* shift the value for all zero bits

* @param mask -> String (Binary or Hex Mask string. Mask should always have 16

* bits/2bytes)

* @param buffer -> Uint8Array[] (Input buffer)

* @param buffer_index -> Integer (Start buffer index)

* @return Integer -> masked bits from input buffer after right shift

*/

BinaryUtils.mask16AndShiftRight(mask, buffer, buffer_index);

/**

* @fn mask32AndShiftRight()

* @brief gets the number of bit value from the input buffer index as according to Mask and right

* shift the value for all zero bits

* @param mask -> String (Binary or Hex Mask string. Mask should always have 32 bits/4bytes)

* @param buffer -> Uint8Array[] (Input buffer)

* @param buffer_index -> Integer (Start buffer index)

* @return Integer -> masked bits from input buffer after right shift

*/

BinaryUtils.mask32AndShiftRight(mask, buffer, buffer_index);

/**

* @fn mask8AndSet()

* @brief returns the byte_value after setting the set_value into the input byte as according to

* bits mentioned in the mask

* @param mask -> String (Binary or Hex Mask string. Mask should always have 8 bits/1byte)

* @param byte_value -> Uint8 (Input Byte Value)

* @param set_value -> Integer (value to be set)

* @return 8 bit integer -> The byte_value after setting the set value into it.

*/

BinaryUtils.mask8AndSet(mask, bytes_value, set_value);

/**

* @fn mask16AndSet()

* @brief sets the number of bits value into the input buffer from buffer index as according to

* bits mentioned in the mask

* @param mask -> String (Binary or Hex Mask string. Mask should always have 16

* bits/2bytes)

* @param buffer -> Uint8Array[] (Input buffer)

* @param buffer_index -> Integer (Start buffer index)

* @param set_value -> Integer (value to be set)

* @return updated input buffer

*/

BinaryUtils.mask16AndSet(mask, bytes_value, set_value);

/**

* @fn mask32AndSet()

* @brief sets the number of bits value into the input buffer from buffer index as according to

* bits mentioned in the mask

* @param mask -> String (Binary or Hex Mask string. Mask should always have 32

* bits/4bytes)

* @param buffer -> Uint8Array[] (Input buffer)

* @param buffer_index -> Integer (Start buffer index)

* @param set_value -> Integer (value to be set)

* @return updated input buffer

*/

BinaryUtils.mask32AndSet(mask, bytes_value, set_value);

/**

* @fn crc16()

* @brief calculates the crc16 checksum on the input byte array

* @param algorithm -> Builtin Global Object [CRC16Algorithm.ARC, CRC16Algorithm.XMODEM, CRC16Algorithm.MODBUS, CRC16Algorithm.USB, CRC16Algorithm.CMS]

* @param buffer -> Uint8Array (Input buffer)

* @param start_index -> Integer [OPTIONAL] (Start index of input buffer)

* @param length -> Integer [OPTIONAL] (length of input buffer from start index)

* @return 16 bit integer -> crc16 checksum

*/

BinaryUtils.crc16(algorithm, buffer, start_index, length)

/**

* @fn crc32()

* @brief calculates the crc32 checksum on the input byte array

* @param algorithm -> Builtin Global Object [CRC32Algorithm.AIXM]

* @param buffer -> Uint8Array (Input buffer)

* @param start_index -> Integer [OPTIONAL] (Start index of input buffer)

* @param length -> Integer [OPTIONAL] (length of input buffer from start index)

* @return 32 bit integer -> crc32 checksum

*/

BinaryUtils.crc32(algorithm, buffer, start_index, length)

/**

* @fn base64_encode()

* @brief base64 encode the input byte array

* @param buffer -> Uint8Array (Input buffer)

* @return String -> base64 encoded string

*/

BinaryUtils.base64_encode(buffer);

/**

* @fn base64_decode()

* @brief decode the base64 encoded string

* @param encoded_data -> String (Input string)

* @return Uint8Array -> decoded array buffer

*/

BinaryUtils.base64_decode(encoded_data);

Examples

Applying CRC & Base64 Encoding/Decoding on Uint8Array using BinaryUtils object

js

// Buffer Input (Uint8Array) -> 0x41 0x42 0x43 0x44 0x45 0x46 0x47 0x48 0x49 0x4A

function on_update() {

// encoded_message -> QUJDREVGR0hJSg==

output.encoded_message = BinaryUtils.base64_encode(buffer);

// decoded_message -> "Cisco - Edge Intelligence"

var decoded_buf = BinaryUtils.base64_decode("Q2lzY28gLSBFZGdlIEludGVsbGlnZW5jZQ==");

output.decoded_message = String.fromCharCode.apply(null, new Uint8Array(decoded_buf));

// crc16_checksum -> 3230

output.crc16_checksum = BinaryUtils.crc16(CRC16Algorithm.ARC, buffer);

// crc32_checksum -> 840854789

output.crc32_checksum = BinaryUtils.crc32(CRC32Algorithm.AIXM, buffer);

output.publish();

}

Applying Bit Manipulation get bit functions on Uint8Array using BinaryUtils object

js

function string_to_byte_array(str) {

var strLen=str.length;

var buf = new Uint8Array(strLen);

for (var i=0; i < strLen; i++) {

buf[i] = str.charCodeAt(i);

}

return buf;

}

// Buffer Input (Uint8Array) -> //# 0x 4E 62 63 64 65 66

function on_update() {

var get_buf = string_to_byte_array(input.getbit_array_data);

//function 1. mask8

output.getbit_mask8 = BinaryUtils.mask8("0b1111_0000", get_buf[0]);

//function 2. mask8AndShiftRight

output.getbit_mask8AndShiftRight = BinaryUtils.mask8AndShiftRight(

"0b11110000",

get_buf[0]

);

//function 3. mask16

output.getbit_mask16 = BinaryUtils.mask16(

"0b0000_1111_1111_0000",

get_buf,

0

);

//function 4. mask16AndShiftRight

output.getbit_mask16AndShiftRight = BinaryUtils.mask16AndShiftRight(

"0b0000_1111_1111_0000",

get_buf,

0

);

//function 5. mask32

output.getbit_mask32 = BinaryUtils.mask32(

"0b0000_1111_1111_1111_1111_1111_1111_0000",

get_buf,

0

);

//function 6. mask32AndShiftRight

output.getbit_mask32AndShiftRight = BinaryUtils.mask32AndShiftRight(

"0b0000_1111_1111_1111_1111_1111_1111_0000",

get_buf,

0

);

output.publish();

}

Applying Bit Manipulation set bit functions on Uint8Array using BinaryUtils object

js

function string_to_byte_array(str) {

var strLen=str.length;

var buf = new Uint8Array(strLen);

for (var i=0; i < strLen; i++) {

buf[i] = str.charCodeAt(i);

}

return buf;

}

// Buffer Input (Uint8Array) -> //# 0x 41 42 43 44 45 46 - "ABCDEF"

function on_update() {

var set_buf = string_to_byte_array(input.setbit_array_data);

var dup_set_buf = set_buf;

//function 1. mask8AndSet ,output: 0x71 = 113

output.setbit_mask8AndSet = BinaryUtils.mask8AndSet("0xF0", set_buf[0], 7);

//function 2. mask16AndSet ,output: "aBCDEF"

set_buf = dup_set_buf;

BinaryUtils.mask16AndSet("0xFF00", set_buf, 0, 0x61);

output.setbit_mask16AndSet = String.fromCharCode.apply(

null,

new Uint8Array(set_buf)

);

//function 3. mask32AndSet ,output: "bCDDEF"

set_buf = dup_set_buf;

BinaryUtils.mask32AndSet("0x0F_FF_FF_F0", set_buf, 0, 0x243444);

output.setbit_mask32AndSet = String.fromCharCode.apply(

null,

new Uint8Array(set_buf)

);

output.publish();

}

(Optional) Transfer files

Use the transfer file function to download a file to an EI agent from a remote or connected device and then upload it to a remote destination that is not pre-configured in EI.

The target URL (and optionally the credentials and other connection related parameters) is recommended to be read from a custom attribute of the asset. This way the value of these variables cannot be modified from the script, but can only be read/used, ensuring that the script does not do something that is not intended.

Note: Custom attributes are defined in the Asset Type and values are assigned when Assets are defined. See Asset Types for more information.

The function allows you to optionally specify SSL and Authentication parameters for the source and target servers.

HTTPS and authentication

This function supports HTTPS and authentication if required by the server, and accepts 5 arguments:

- Source URL: (Required) The URL from where the file will be downloaded. This can be a string defined in the script or an attribute configured in your Cisco IoT organization.

- Asset Type Name: (Required string) The input asset type name (variable name) which has the custom attribute corresponding to Target URL defined.

- Target URL: (Required string) The name of the custom attribute defined in the asset type in your Cisco IoT organization. This provides strict control over what is uploaded from the agent. If the "Target URL" attribute value ends with a slash ('/'), the filename from the source URL will be appended to the configured "Target URL" attribute value to generate the final URL that will be used. Otherwise, the configured attribute value will be used "as-is" to upload the file. The upload is triggered using an HTTP POST request.

For example:

- If the HTTP server does not care about the original source file name, and wants all the POST requests to be posted at the same URL, then the "Target URL" attribute should not end in "/" (e.g. https://{hostname}/upload/logfile). In this case, the name of the source file is not sent to the HTTP server and the URL handler should decide how to handle the contents of each request. For example, it can save each file with a dynamically generated unique name.

- If the HTTP server requires to know the the original source file name, then the "Target URL" attribute should end in "/" (eg. https://{hostname}/dir1/ ). In this case, the EI Agent will extract the source file name from the source URL and append it to the given "Target URL" attribute and then issue the POST request on this constructed URL. For example, if the source URL was "http://myasset/log-2020-17-11-12-00-00.txt" and target URL attribute was "http://myremoteserver/logs/", then the EI Agent will send the POST request to the URL "http://myremoteserver/logs/log-2020-17-11-12-00-00.txt"

- Source_options: (Optional) JavaScript object containing SSL and Authentication options needed for file download. If not required "{}" must be passed.

- Target_options: (Optional) JavaScript object containing SSL and Authentication options needed for file upload. If not required, "{}" must be passed.

SSL options

SSL options may contain the following options:

- self_signed_tls_certificate_allowed: Boolean option specifies if self-signed certificates are allowed or not.

- certs_path: String specifying the path to the certificates.

- ca_file: String specifying the location of Certificate Authority(CA) certificates file.

- cipher_list: String specifying the cipher list.

- verify_peer: Boolean option specifies if the certificate of the peer should be verified.

- verify_hostname: Boolean option specifies if the hostname in the certificate of the peer should be verified, requires verify_peer to be true.

Authentication options

Authentication options include:

- Username: Username associated with the user for the download/upload server.

- Password: Password for the username.

Input Data Model Example

This is configured in your Cisco IoT organization.

In this example, we have an Asset Type "my_input_asset_model" created in the EI cloud with the following attribute and custom attribute.

Source_url attribute

| Title | Value |

|---|---|

| Attribute Name | source_file_url |

| Label | SourceFileUrl |

| Data Type | String |

| Topic | source_file_url |

Target_url custom attribute

| Title | Value |

|---|---|

| Attribute Name | target_url |

| Label | TargetUrl |

| Data Type | String |

| Field Validation | Optional |

The Output Model

[{"key":"message", "type":"STRING", “category”: “TELEMETRY”}]

File transfer Data Logic script example

function on_update() {

var sourceOptions = new Object();

sourceOptions.ssl_verify_peer = false;

sourceOptions.username = "username"; //Authentication options for download

sourceOptions.password = "password"; //Authentication options for download

var targetOptions = new Object();

targetOptions.ssl_verify_peer = false;

targetOptions.username = "username"; //Authentication options for upload

targetOptions.password = "password"; //Authentication options for upload

transfer_file(my_input_asset_model.source_file_url, "my_input_asset_model", "target_url", sourceOptions, targetOptions);

output.message = "Transfered file " + my_input_asset_model.source_file_url;

output.publish();

}

(Optional) Publish data to an asset (Local Action)

Some Asset Types such as serial, modbus, NTCIP, RSU support writable attributes. You can set the values of these writable attributes in your JavaScript scripts.

The current setting for all writable attributes can then be published to the asset. This is achieved in the script by calling the function "publish()" on the asset type variable. Note that the actual write to the asset does not happen when the variable is assigned a value, but will happen only when the publish() function is called.

Local action example:

function on_update(){

If (oil_pump.pressure > oil_pump.max_pressure) {

oil_pump.engine_speed *= 0.95;

oil_pump.publish();

} else if (oil_pump.pressure < oil_pump.min_pressure) {

oil_pump.engine_speed *= 1.05;

oil_pump.publish();

}

}

Additional Examples

The following section contains two examples – the first example is a simple Degree Celsius to Degree Fahrenheit conversion, and the second is a more detailed sliding average example.

Example:

- Temperature Conversion

- Sliding average on two fields

Temperature conversion from Celsius to Fahrenheit

Input Data Model Example

This example is an OPC-UA variable from a simulator.

{

"apiVersion": 1.0,

"connectionType": "OPC_UA",

"metrics": {

"temperature": {

"label": "Temp in Degree Celsius",

"nodeId": {

"type": "numeric",

"namespaceUri": "http://cisco.com/ns/test",

"identifier": "4711"

},

"samplingInterval": 500.0,

"datatype": "Float"

}

}

}

The Datalogic Script

function on_update() {

var temp_celsius = opcua_var.temperature;

var temp_fahrenheit = temp_celsius * (9/5) + 32;

output.temperature = temp_fahrenheit;

output.publish();

}

The Output Model

[{"key":"temperature", "type":"DOUBLE", “category”: “TELEMETRY”}]

Sliding average on two fields

The code assumes an input model with two fields: temp and vibration, both fields are also expected to be present in the output model. The expected output is the sliding average over the input variables over a configurable window size.

Note: To know if the script is working as expected, you can deploy the current version using a policy and monitor the health status in the health monitoring page.

The input model (two inputs, “temp” and “vibration”):

{

"apiVersion": 1.0,

"connectionType": "OPC_UA",

"metrics": {

"temp": {

"label": "Current Temperature",

"nodeId": {

"type": "numeric",

"namespaceUri": "http://cisco.com/ns/test",

"identifier": "4711"

},

"samplingInterval": 500.0,

"datatype": "Float"

},

"vibration": {

"label": "Current Vibration Amplitude",

"nodeId": {

"type": "numeric",

"namespaceUri": "http://cisco.com/ns/test",

"identifier": "4715"

},

"samplingInterval": 500.0,

"datatype": "Float"

}

}

}

The Datalogic Script

function FastSlidingAvg(window_size) {

this.size = window_size;

this.values = [];

this.sum = 0;

this.update = function(value) {

if(this.values.length == this.size) {

this.sum -= this.values[0];

this.values.shift();

}

this.sum += value;

this.values.push(value);

}

this.avg = function() {

return this.sum / this.values.length;

}

}

var acc = {};

function init() {

acc["temp"] = new FastSlidingAvg(100);

acc["vibration"] = new FastSlidingAvg(100);

output.temp = 0;

output.vibration = 0;

}

function on_update() {

var field_name = trigger.field_name;

var value = globalThis[trigger.device_name][field_name];

acc[field_name].update(value);

output[field_name] = acc[field_name].avg();

output.publish();

}

The Output Model

[

{"key":"temp", "type":"DOUBLE", “category”: “TELEMETRY”},

{"key":"vibration", "type":"DOUBLE", “category”: “TELEMETRY”}

]

Data Logic Example for Multi Asset Selection

In the following examples, Average temperature and Input Temperature fields are selected from 2 OPCUA assets.

Input Data Model1:(Variable name: 'temperature_input1')

{

"apiVersion": 1,

"connectionType": "OPC_UA",

"fields": {

"temperature": {

"label": "temperature",

"description": "temperature",

"datatype": "Float",

"nodeId": {

"namespaceUri": "http://cisco.com/ns/temp",

"identifier": "12",

"type": "numeric"

},

"samplingInterval": 2000,

"category": "TELEMETRY"

}

}

}

Input Data Model2:(Variable name: 'temperature_input2')

{

"apiVersion": 1,

"connectionType": "OPC_UA",

"fields": {

"temperature": {

"label": "Temperature",

"description": "temperature",

"datatype": "Float",

"nodeId": {

"type": "numeric",

"namespaceUri": "http://cisco.com/ns/test",

"identifier": "4711"

},

"samplingInterval": 100,

"category": "TELEMETRY"

}

}

}

The Data Logic script

var temp1 = 0;

var temp2 = 0;

function on_update() {

temp1 = temperature_input1.temperature?temperature_input1.temperature:0;

temp2 = temperature_input2.temperature?temperature_input2.temperature:0;

output.averageTemp = (temp1 + temp2) / 2;

output.publish();

}

The Output Model

[{"key":"averageTemp","type":"DOUBLE","category":"TELEMETRY"}]

Data Logic example for Configurable Data Payload

Input Model:(Variable name: 'opcua_var')

{

"apiVersion": 1,

"connectionType": "OPC_UA",

"fields": {

"temperature": {

"label": "temperature",

"description": "",

"datatype": "Float",

"nodeId": {

"namespaceUri": "http://cisco.com/ns/temp",

"identifier": "12",

"type": "numeric"

},

"samplingInterval": 2000,

"category": "TELEMETRY"

}

}

}

The Data Logic Script

var seq = 0;

function on_update() {

seq++;

var temp_celsius = opcua_var.temperature;

var temp_fahrenheit = temp_celsius * (9 / 5) + 32;

var outputDataPayload = {

timestamp: Date.now(),

metrics: [

{

name: 'temperature',

alias: 1,

timestamp: Date.now(),

dataType: 'Integer',

value: temp_fahrenheit,

}

],

seq: seq,

};

publish('output', outputDataPayload);

}

Output Model: Blank

Data Logic Example for Local Action

Input Asset Type - temperature_sensor

Input Asset Type (Variable name: 'temperature_sensor')

{

"apiVersion": 1,

"connectionType": "MODBUS_TCP",

"fields": {

"temperature": {

"label": "temperature",

"datatype": "Double",

"description": "",

"required": false,

"category": "ATTRIBUTE"

},

"max_temperature": {

"label": "max_temperature",

"datatype": "Double",

"description": "",

"required": false,

"category": "ATTRIBUTE"

}

}

}

Input Asset Type : (Variable name: 'led_board')

Input Asset Type 2 - led_board

{

"apiVersion": 1,

"connectionType": "MODBUS_TCP",

"fields": {

"text_color": {

"label": "text_color",

"datatype": "String",

"description": "",

"rawType": "VARCHARSTRING",

"type": "COIL",

"pollingInterval": 200,

"offset": 0,

"category": "TELEMETRY",

"access": "ReadWrite"

}

}

}

Data Logic Script

const RED = "RED";

const GREEN = "GREEN";

function on_update() {

if (temperature_sensor.temperature >= temperature_sensor.max_temperature) {

led_board.text_color = RED;

} else {

led_board.text_color = GREEN;

}

output.temperature = temperature_sensor.temperature;

output.text_color = led_board.text_color;

led_board.publish();

output.publish();

}

Output Data Model

[{"key": "temperature", "type": "DOUBLE", "category": "TELEMETRY"},

{"key": "text_color", "type": "STRING", "category": "TELEMETRY"}]

Data Logic Example for HTTP File Transfer

Input Data Model:(Variable name: 'assetData')

{

"apiVersion": 1,

"connectionType": "MQTT",

"fields": {

"target_url": {

"label": "TargetUrl",

"description": "",

"datatype": "String",

"required": false,

"category": "ATTRIBUTE"

},

"source_file_url": {

"label": "SourceFileUrl",

"description": "",

"datatype": "String",

"topic": "source_file_url",

"category": "TELEMETRY"

},

"is_file_updated":{

"label": "isFileUpdated",

"description": "",

"datatype": "String",

"topic": "source_file_url",

"category": "TELEMETRY"

}

}

}

The Data Logic script

function on_update() {

if(assetData.is_file_updated === 'true'){

transfer_file(assetData.source_file_url, 'assetData', 'target_url', {}, {});

output.transferStatus = 'SUCCESS';

}

output.publish();

}

The Output Model

[{"key": "transferStatus", "type": "STRING", "category": "TELEMETRY"}]

DSRC Conversion APIs

- API: dsrc2016_encode : This function encodes data into the J2735 message format for both 2016 and 2020 standards, producing a uint8 array of bytes as output.

- API: dsrc2016_decode : This function decodes a uint8 array of bytes containing encoded data into the J2735 message format for the 2016 and 2020 standards, returning the result in JSON format.

The year specified in the API names reflects the supported J2735 standard. To maintain backward compatibility, the production environment continues to run older data logics, the API retains the "2016" label, even though it fully supports encoding and decoding for both 2016 and 2020 message formats.

function on_update() {

// The following variables represent the DSRC message in different formats

var dsrc_uint8array = new Uint8Array([0x00, 0x12, 0x82, 0x6c, 0x38,...]); // DSRC message as a Uint8Array

var dsrc_hex_string = '0012826c38093000204e2825...'; // DSRC message as a hex string

var dsrc_json_string = '{"messageId":18,"value":{"MapData":{"intersections":[{"id":{...'; // DSRC message as a JSON string

// Conversion guidelines

// 1. Convert a DSRC JSON string to a Uint8Array

dsrc2016_encode(dsrc_json_string);

// 2. Convert a Uint8Array to a DSRC JSON string

dsrc2016_decode(dsrc_uint8array);

// 3. Convert a hex string to a DSRC JSON string

dsrc2016_decode(Duktape.dec('hex', dsrc_hex_string));

// 4. Convert a DSRC JSON string to a hex string

Duktape.enc('hex', dsrc2016_encode(dsrc_json_string));

}

Protobuf Guide

This guide will refer to the protofile below.

Protofile:

package test;

syntax = "proto3";

message TestMsg {

int32 temperature = 1;

string pressure = 2;

}

Step-1 Initialisation:

To work with protobuf messages in Datalogic Script,

- convert the above protofile to a base64 string and initialise the protobuf context.

- create a helper variable pb.TestMsg, which shall help us with the creation, encoding, and decoding of a protobuf message of type test.TestMsg.

Example:

var pb = new Object();

function init() {

var protobuf = require("protobuf");

// Load protofile

var base64_encoded_protofile = "cGFja2FnZSB0ZXN0OwpzeW50YXggPSAicHJvdG8zIjsKbWVzc2FnZSBUZXN0TXNnIHsKICAgIGludDMyIHRlbXBlcmF0dXJlID0gMTsKICAgIHN0cmluZyBwcmVzc3VyZSA9IDI7Cn0=";

var root = protobuf.init([base64_encoded_protofile]);

// Create a helper objects for creation, encode and decode of protobuf messages

pb.TestMsg = root.lookup("test.TestMsg");

}

Step-2 Create, encode, and decode protobuf messages

Create and Encode:

- This example receives temperature updates from a sensor and publishes them to an MQTT destination as a protobuf message.

- pb.TestMsg.create() - To create protobuf messages

- pb.TestMsg.encode() - To encode protobuf message

- Every protobuf field's assignment is similar to a JSON assignment.

Example:

var pb = new Object();

function init() {

var protobuf = require("protobuf");

var base64_encoded_protofile = "cGFja2FnZSB0ZXN0OwpzeW50YXggPSAicHJvdG8zIjsKbWVzc2FnZSBUZXN0TXNnIHsKICAgIGludDMyIHRlbXBlcmF0dXJlID0gMTsKICAgIHN0cmluZyBwcmVzc3VyZSA9IDI7Cn0=";

var root = protobuf.init([base64_encoded_protofile]);

pb.TestMsg = root.lookup("test.TestMsg");

}

function on_update() {

// Create a protobuf message by populating sensor readings

var message = pb.TestMsg.create({

temperature: sensor.temperature,

pressure: 30.4

});

// Serialise the message to a protobuf binary format

var buffer = pb.TestMsg.encode(message).finish();

// Send to MQTT destination

publish("output", buffer);

}

Decode:

- Decoding protobuf message: This example receives protobuf messages from MQTT destination (Cloud2Device feature) and decodes the same.

- pb.TestMsg.decode() - To decode protobuf message

- Every protobuf field's access is similar to a JSON attribute.

Example:

var pb = new Object();

function init() {

var protobuf = require("protobuf");

var base64_encoded_protofile = "cGFja2FnZSB0ZXN0OwpzeW50YXggPSAicHJvdG8zIjsKbWVzc2FnZSBUZXN0TXNnIHsKICAgIGludDMyIHRlbXBlcmF0dXJlID0gMTsKICAgIHN0cmluZyBwcmVzc3VyZSA9IDI7Cn0=";

var root = protobuf.init([base64_encoded_protofile]);

pb.TestMsg = root.lookup("test.TestMsg");

}

function on_cloud_command() {

logger.info("Received message on topic: ", output.command_properties["topic"]); // Output: Received message on topic: test-topic/A

// Decode the serialised protobuf message from Cloud

var message = pb.TestMsg.decode(output.command);

logger.info("Received pressure update from Cloud: ", message.pressure); // Output: Received pressure update from Cloud: 30.4

// Do something else

}

Support for publishing on multiple topics with MQTT as a destination

We can use Datalogic Script to publish telemetry messages on many topics with MQTT as the destination.

Below is an example of publishing the sensor reading to the destination on two different topics, test/topic1 and test/topic2.

Example:

function on_update() {

var message = sensor.temperature;

var property1 = {"topic":"test/topic1"}; // QoS if not mentioned, takes default as given in IOT-OD Destination config

var property2 = {"topic":"test/topic2","qos":1};

// Publish to destination

publish("output", message, property1);

publish("output", message, property2);

}

Multi-topic subscription

We need to use the mqtt_add_subscription() method in the init() function to subscribe to multiple topics.

function init() {

mqtt_add_subscription("test_sub_a"); // calling api without QoS level

mqtt_add_subscription("test_sub_b", 1); //calling api with Topic and QoS level

mqtt_add_subscription("test_sub/#"); //calling api with wildcard topic

mqtt_add_subscription("test_sub/C");

}

function on_update() {}

function on_cloud_command() {

logger.info("received command: " + output.command);

logger.info("received command on topic: " + output.command_properties['topic']);

}

MQTT Cloud to Edge Messaging/Commands

This feature will enable the user to send instructions/commands to the data logic script running on an EI Agent from the northbound application through the MQTT server (This is currently available only for MQTT Data destinations). In order to handle this command coming from the Data Destination, the Data Logic script should have additional functionality. In the VSCode plugin, for a given Data Logic, in the Runtime Options, click Enable Cloud to Device Command. A new callback function on_cloud_command() is inserted into the script to handle the command from Data Destination. This command is called whenever any command is published from the MQTT cloud. You can access this command using output.command variable.

You can send a response from the command with the specified request id, send_command_response(request_id, payload). This command can be called from any method.

You can send a response to a command via the response topic inside a datalogic back to the command sender via the send_command_response() method. For example, send_command_response(trigger.request_id).

Data Logic Example for MQTT Cloud to Edge Messaging/Commands

var target_temp = 4;

var cached_request_id = null;

on_cloud_command()

{

// Make sure we always send a response for a previous command in flight

if (cached_request_id !== null) {

send_command_response(cached_request_id, "Overwritten");

}

// write back to SB connectors

asset1.target_temp = getTargetTemp(output.command); // custom utility function to extract specific value from the incoming string

asset2.target_temp = getTargetTemp(output.command); // custom utility function to extract specific value from the incoming string

asset1.publish();

asset2.publish();

// store request id to send a response later

cached_request_id = trigger.request_id;

// possible future extension ideas:

// topic = trigger.command_topic

// trigger.command_originator_identifier

// store target temp to check the state of our SB values on updates

target_temp = getTargetTemp(output.command);

}

on_update()

{

// check if command was fulfilled

if (cached_request_id !== null &&

trigger.field_name == "temperature" &&

asset1.temperature == target_temp &&

asset2.temperature == target_temp) {

// send response for the last command

send_command_response(cached_request_id, "Ok");

// clear cached request id

cached_request_id = null;

}

output.publish();

}

on_time_trigger()

{

// ...

}

New features for on_cloud_command

output.command_properties["topic"] // contains commands from cloud. If protobuf is enabled, output.command // now returns a Uint8Array, otherwise ASCII text.

Example:

function on_cloud_command() {

logger.info("received command:" + output.command);

logger.info("received command_prop:" + output.command_properties['topic']);

}

Data Logic Example for Timestamp

function on_update() {

device = trigger.device_name; // Represents an asset

attribute = trigger.field_name; // Represents an asset attribute

value = input[attribute]; // Current attribute value

timestamp = trigger.timestamp; // Represents an asset attribute timestamp

// logging only

logger.info("device name: " + device);

logger.info("attribute name: " + attribute);

logger.info("attribute value: " + value);

logger.info("attribute value timestamp: " + timestamp);

output.value = value + "---" + timestamp;

output.publish();

}

Output

[{"key":"value","type":"STRING","category":"TELEMETRY"}]